Version 7.5, July 2022

Table of contents

Introduction

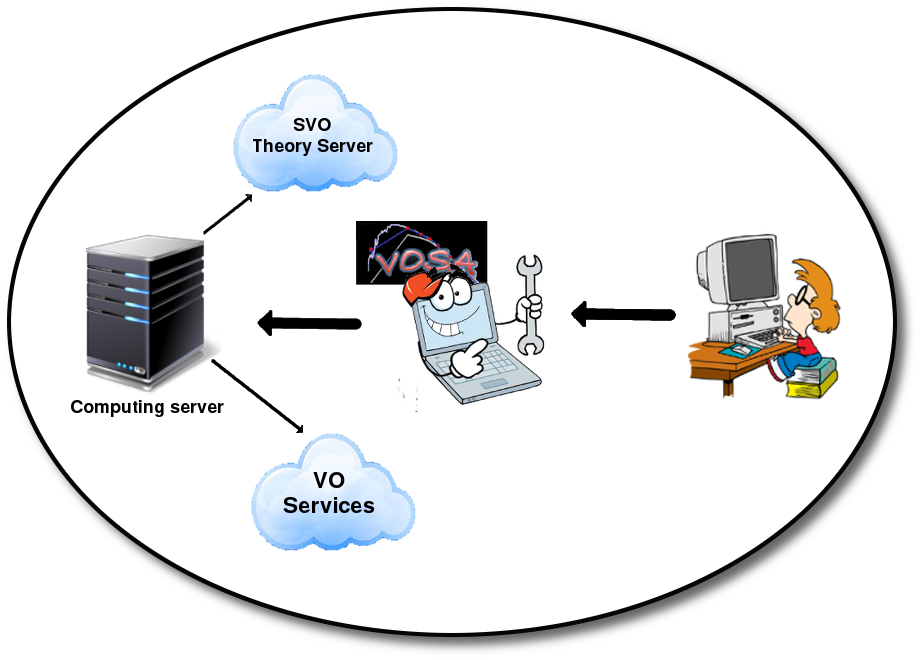

VOSA (VO Sed Analyzer) is a tool designed to perform the following tasks in an automatic manner:

- Read user photometry-tables.

- Query several photometrical catalogs accessible through VO services (increases the wavelength coverage of the data to be analyzed).

- Query VO-compliant theoretical models (spectra) and calculate their synthetic photometry.

- Perform a statistical test to determine which model reproduces best the observed data (optionally fitting at the same time the optimal interstellar extinction).

- Provide the likelihood of the model parameters (and the interestellar extinction).

- Use the best-fit model as the source of a bolometric correction.

- Provide the estimated bolometric luminosity for each source.

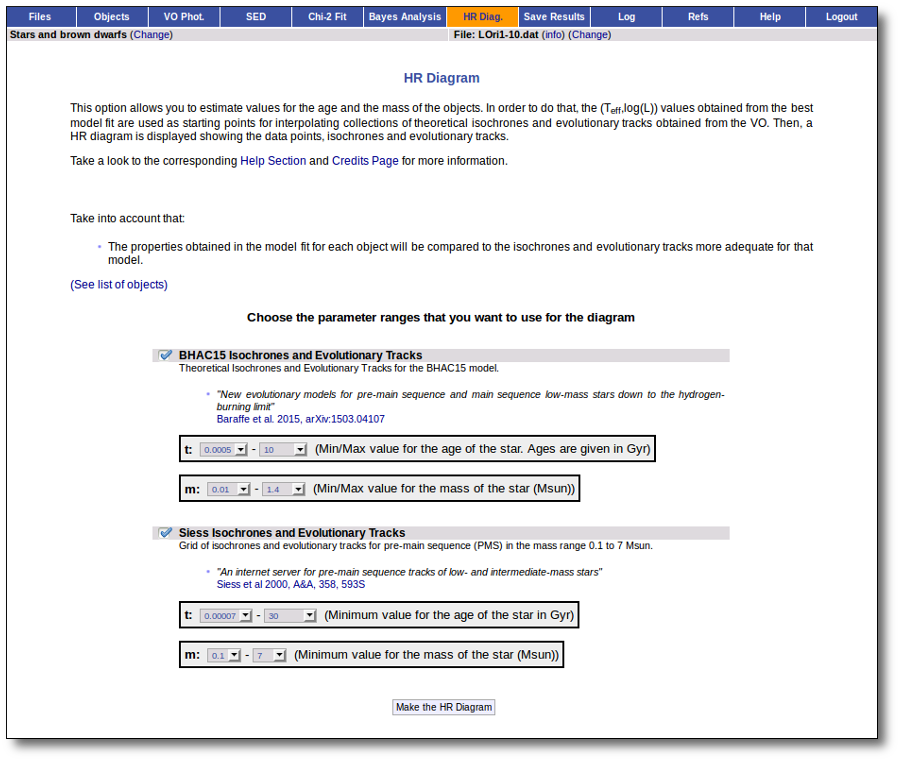

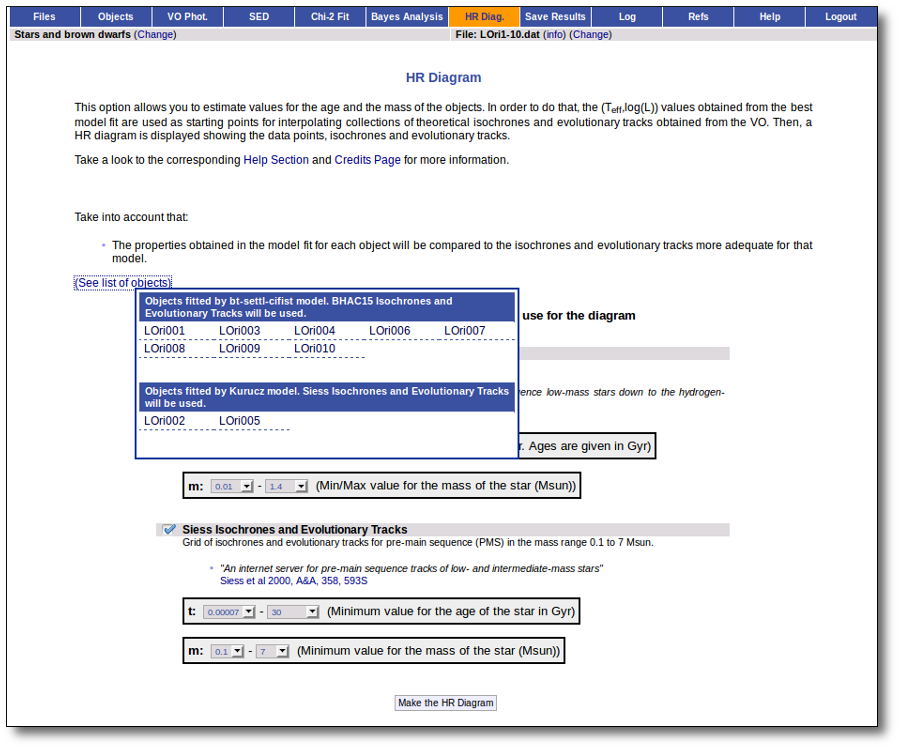

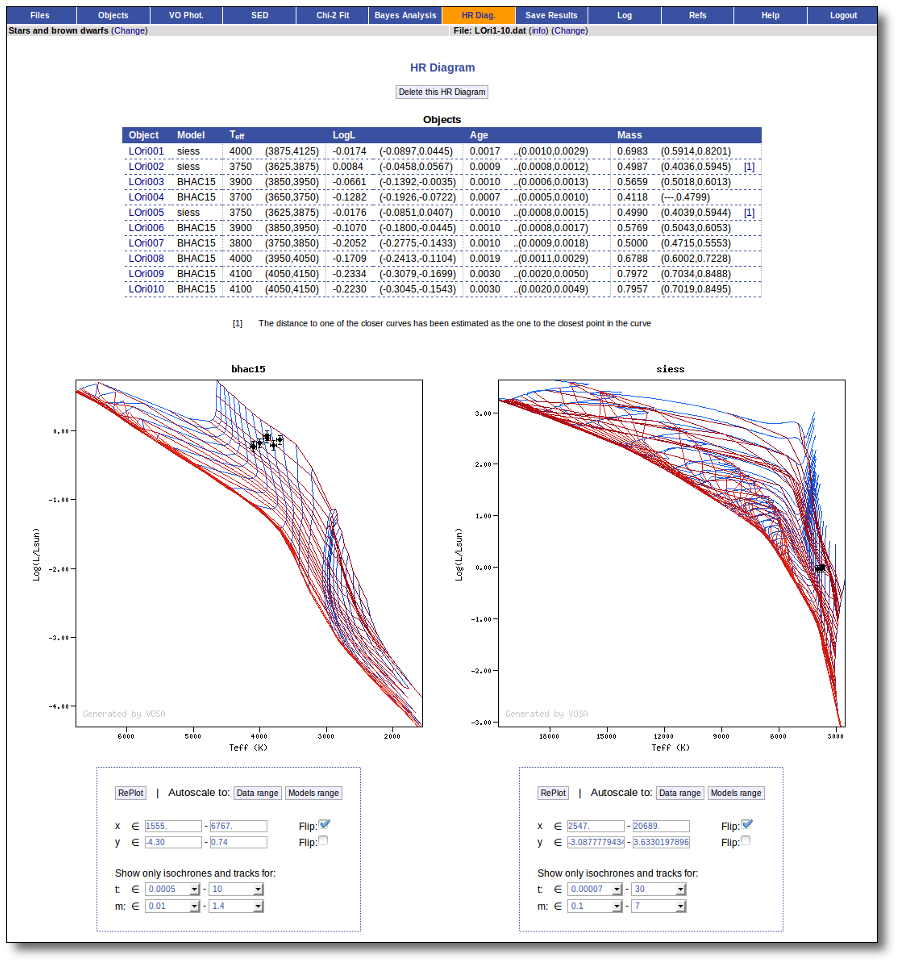

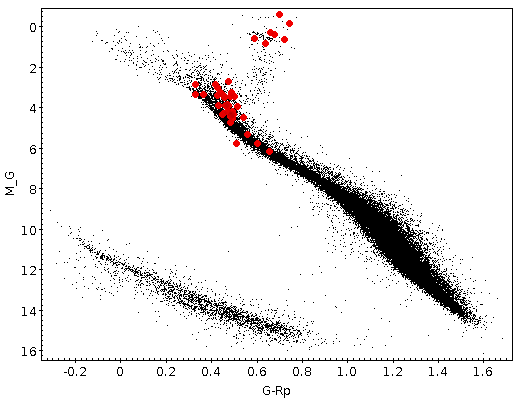

- Generate a Hertzsprung-Russel diagram with the estimated parameters.

- Provide an estimation of the mass and age of each source.

See this documentation in a single page.

This can be useful to print or to search text, but take into account that it is a large page and can be heavy to load for your browser.

Input files

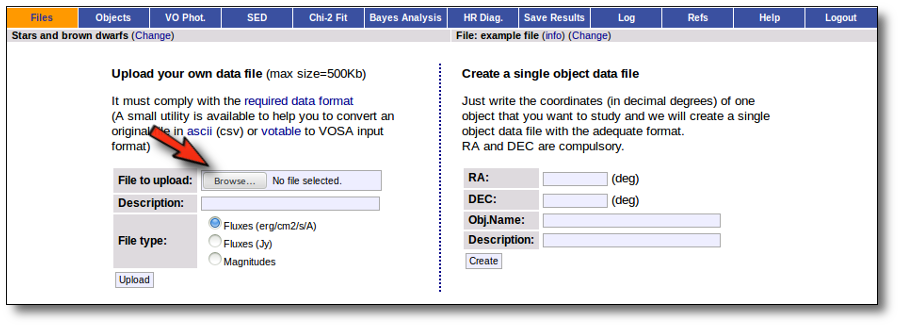

There are two main ways to start working with VOSA:

- Uploading a VOSA-format input file with your data (or selecting a previously uploaded one).

VOSA is mainly designed to work with several objects at the same time so that the same or equivalent operations are performed on all the objects. The information about these objects (and optionally, user photometry data for them) must be uploaded by the user in an input ascii file with an special format.

Please, read carefully how to write an input file in VOSA-format.

- Making a simple search for a single object giving its coordinates.

Using these coordinates VOSA builds an input file and uploads it automatically to the application.

And, at any time, you can select a previously uploaded file and continue with it in the same point where you left the work. Below you can see details about these three options.

Upload data files

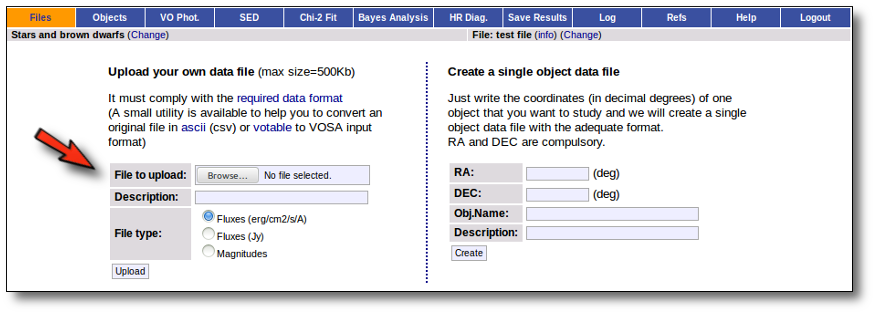

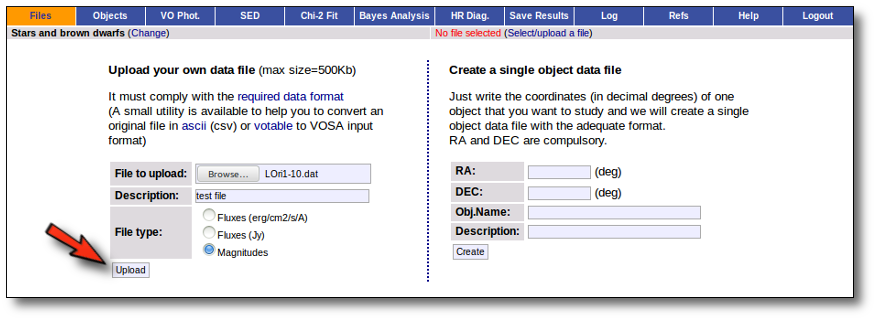

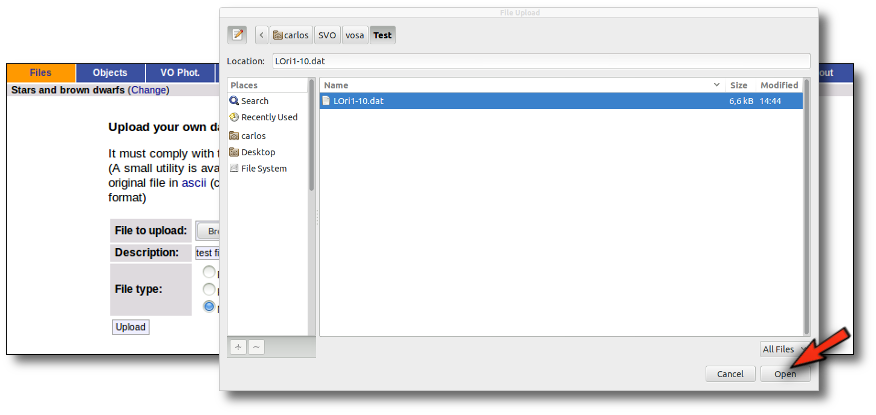

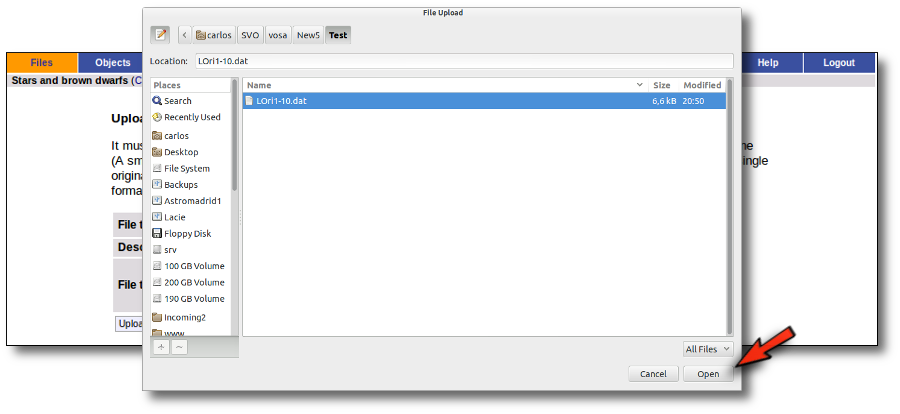

Whenever you click on the "Files" tab, you have the option of uploading a new file.

In order to do that you have to:

- Give a description for the file.

- Specify if the photometric points in your file are expressed as magnitudes or fluxes (in erg/cm2/s/A o Jy).

- Select a file.

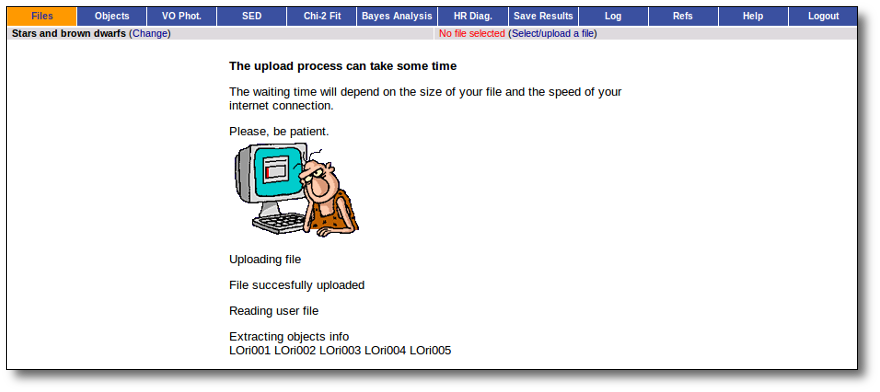

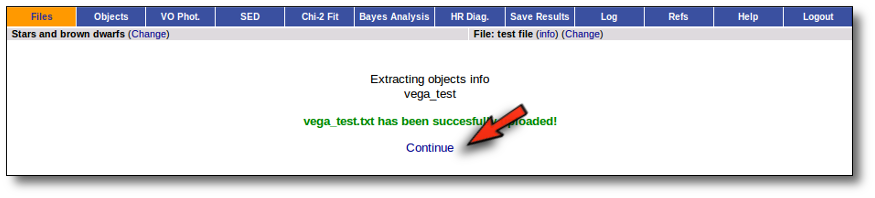

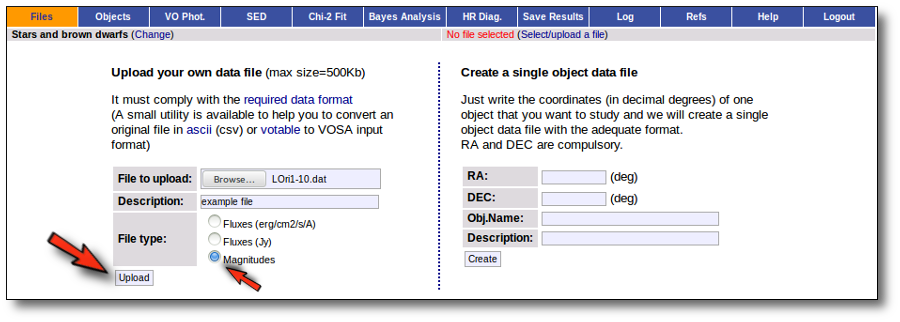

When you click the "Upload" button, your file is transfered to the VOSA server and then it starts been analyzed. This can take a while if the file is large.

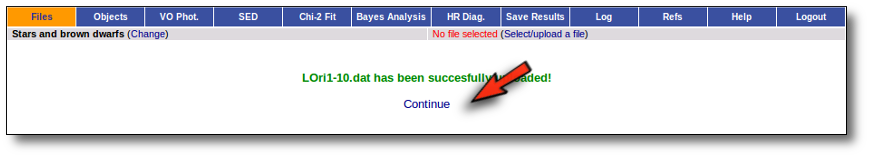

If everything is OK, you will get a message saying so. Please, click in "Continue" to go ahead.

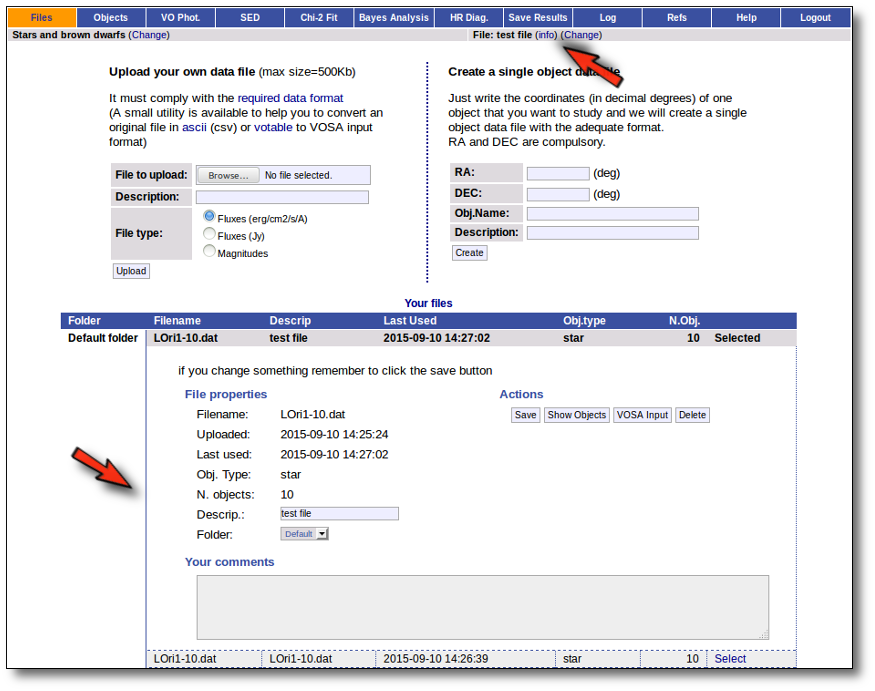

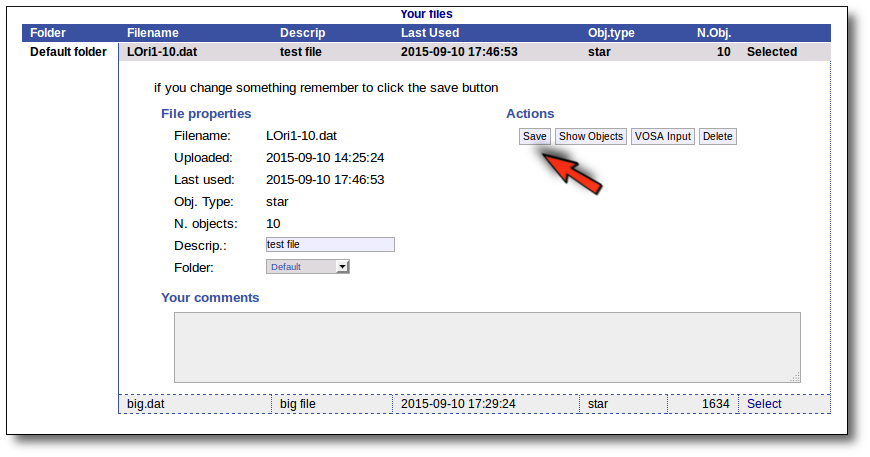

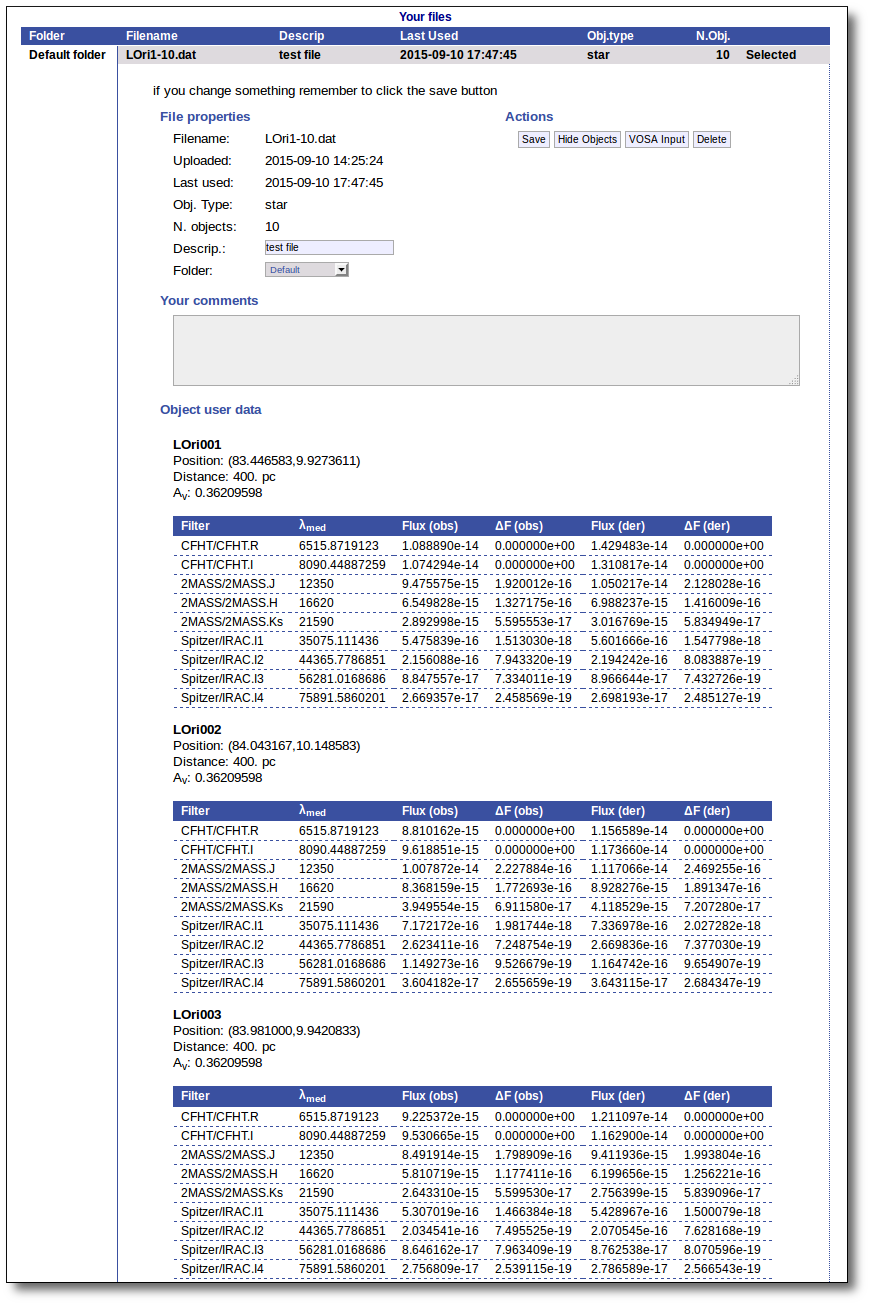

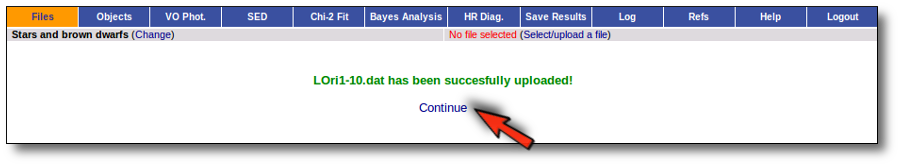

You will go back to the "Files" page. Now you can see the details about the just uploaded file, that will be already available to work with it.

Even if there were not errors detected by VOSA, it is a very good idea to check if the format of your file has been correctly understood. So, please, whenever you upload a new file, click in the "Show Objects" button to see the information that VOSA has save for each object.

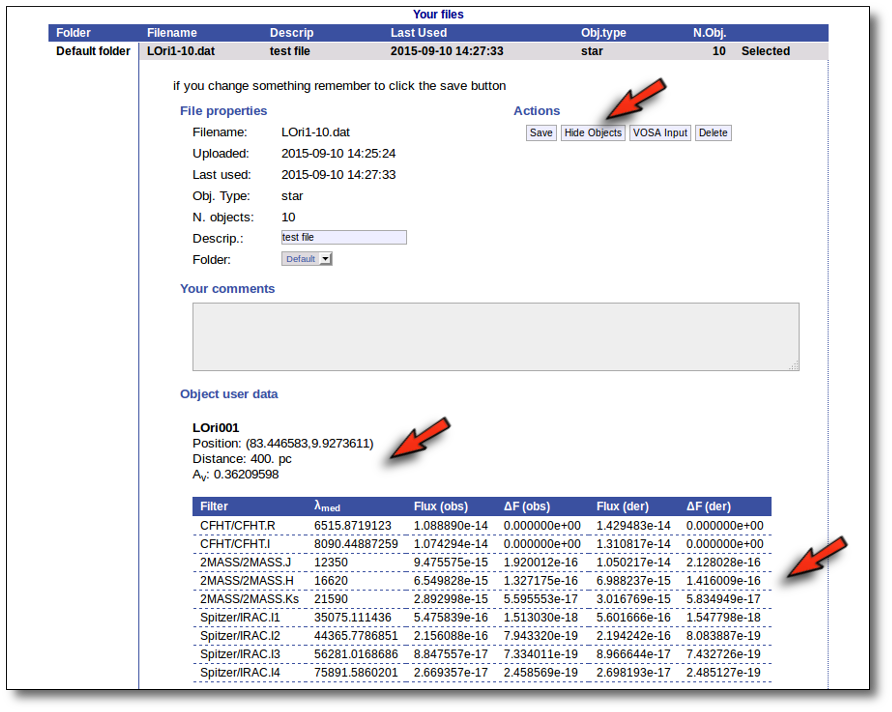

For each object in the file you should see its properties (name, position, extinction, distance...) and its photometric points. See if this is what you expected. If not, delete this file, check your input file and upload it again.

(while you are seeing the objects details, the "Show Objects" button is changed to "Hide Objects": you can use this one to hide the details)

Once the file is uploaded and you have checked that everything is ok, you can go to any of the other tabs in the index above and start working.

VOSA file format

VOSA is mainly designed to work with several objects at the same time so that the same or equivalent operations are performed on all the objects. In order to do this, we have defined a format so that the user can upload the info about these objects together with user photometric data.

Thus, the main way to use vosa is to upload a VOSA input file with this format (or selecting a previously uploaded one).

Nevertheless, we have added the Single Object Search so that you can directly search for a single object using its coordinates. See more information below.

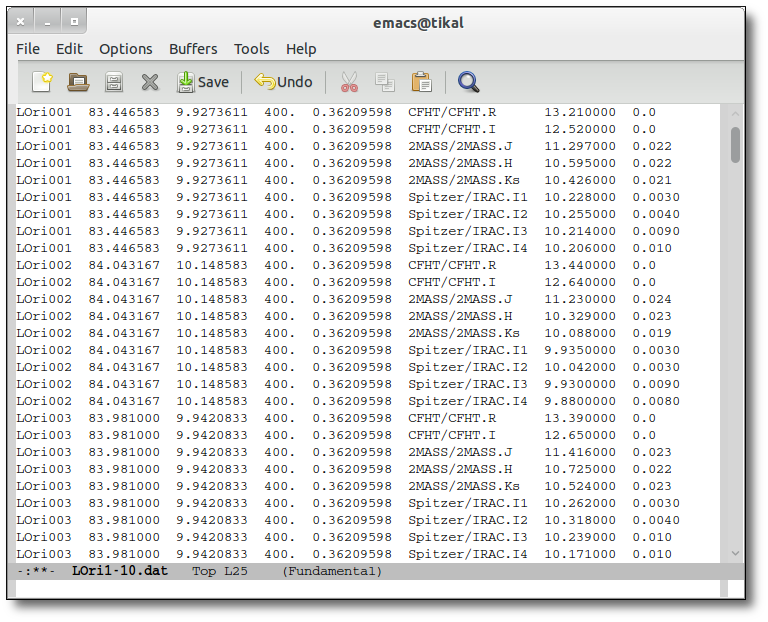

Required input file format

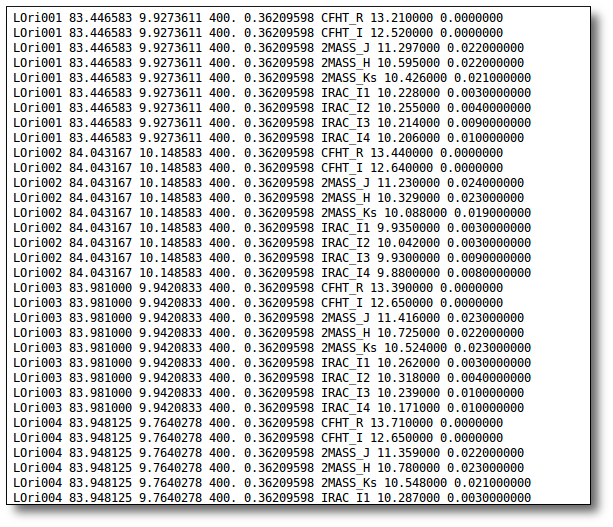

The uploaded file must be an ascii document with a line for each photometric point.

Each line should contain 10 columns:

---------------------------------------------------------------------------- | object | RA | DEC | dis | Av | filter | flux | error | pntopts | objopts | | --- | --- | --- | --- | ---| --- | --- | --- | --- | --- | | --- | --- | --- | --- | ---| --- | --- | --- | --- | --- |

- 1: a one word text label, without spaces or special characters, that corresponds to the object name. See (1).

- 2: the RA, in deg, corresponding to the object in J2000 equinox. See (2).

- 3: the DEC, in deg, corresponding to the object in J2000 equinox. See (2).

- 4: the distance to the object in parsec. See (4).

- 5: the AV parameter defining the extinction. See (5).

- 6: a label corresponding to the name of the filter. It must be in the list of available filters . See (6).

- 7: the flux in erg/cm2/s/A, Jy or magnitude. See (7).

- 8: the observed error in the flux (in erg/cm2/s/A) or magnitude. See (8).

- 9: options specific for this photometric point. See (9).

- 10: options specific for this object (they must be repeated in each line corresponding to the same object). See (10).

Take into account that:

- (1) The only mandatory value is the object name (you can use the real one or some label of your like). The other columns can be writen as '---' (please, don't let them blank nor write '...' instead of '---') if you don't know the right value or don't want to specify it.

- (1) Only alphanumeric characters (letters and numbers) should be used in object names. A very short list of special characters are allowed too, in particular the "_" (underscore) character. Two special "tricks" can be used if necessary:

- Asterisks are forbiden in object names. But if you include the special string "_AST_" in an object name it will be treated as ans asterisk (*) for object name resolution. For instance, if you use EM_AST_SR3 it will be submited to simbad as EM*SR3.

- If you include a "_" character in the object name (not as part of _AST), it will be treated as an space for object name resolution. This can be useful if you are using real object names that contain spaces (for instance, the variable star "R Aql") and you need to use the actual name so that it can be resolved by VOSA using VO services. In a case like that, write "R_Aql" as the object name in the file.

- (2) Coordinates (as accurate as possible) are necessary to obtain photometry from VO catalogues.

If unknown, you can write RA and DEC as '---'. In that case, if you have given the right object name in the first column, you can use the Objects:Coordinates section to find the coordinates for the object using Sesame.

- (4) The distance to the object is necessary to compute the Bolometric Luminosity.

If you don't know the distance, write it as '---' and an assumed distance of 10pc will be used in the calculations.

You can also provide a value for the error in the distance. In order to do that write D+-ΔD (for instance: 100+-20), without spaces (Remember to write both symbols, + and -, together, not a ± symbol or something else; otherwise vosa will not understand the value)

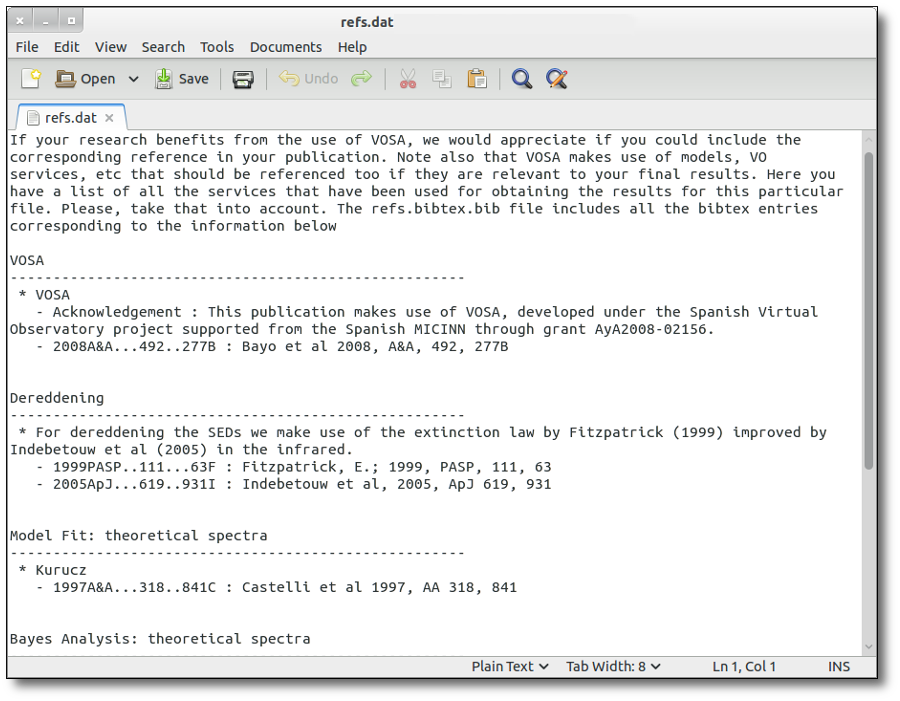

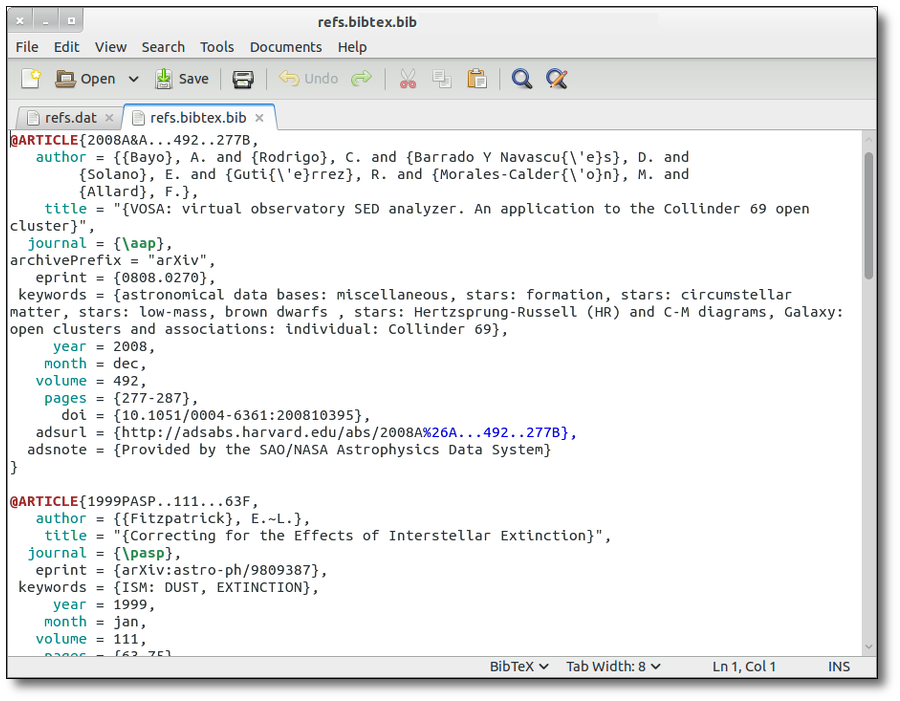

- (5) The value of visual extinction and the the extinction law by Fitzpatrick (1999), improved by Indebetouw et al (2005) in the infrared, are used to deredden the user and VO photometry in a standard way. No reddening correction is applied if Av is set to '---' or zero. Take a look to the corresponding Credits Page for more information.

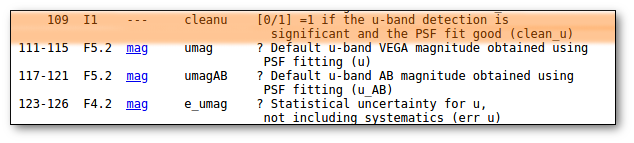

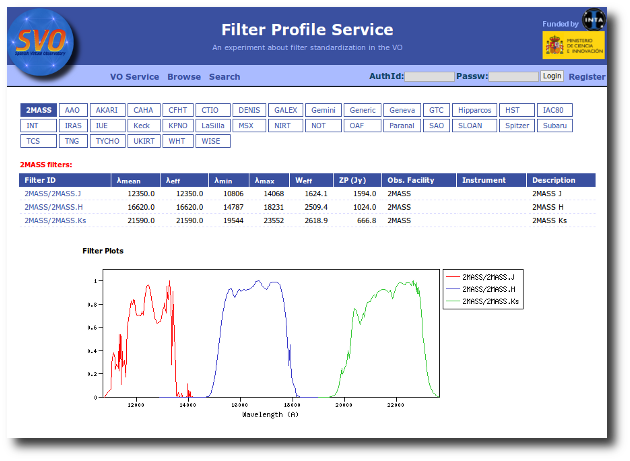

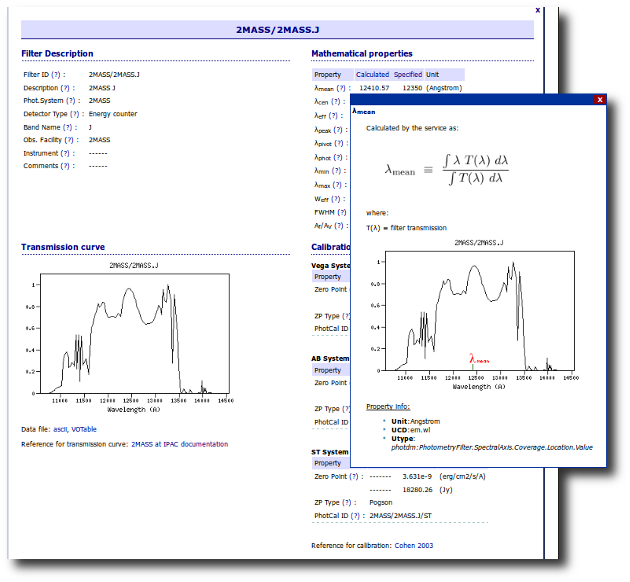

- (6) We use the SVO Filter Profile Service as the source for filter names and properties. Check it to see if the filter corresponding to your observed data is in the list (if not, contact us and we will try to include it).

- (7) If your input file containes magnitudes or fluxes in Jy, please, be careful to mark the corresponding checkbox when uploading the file. If not, we will understand the values as fluxes in erg/cm2/s/A).

- (7) If your data are given in Jy, we will transform them to erg/cm2/s/A using the λ value given by the SVO Filter Profile Service. If you prefer other λ value, please, transform the fluxes to erg/cm2/s/A before uploading your file.

- (7) If your data are given as magnitudes, we will transform them to erg/cm2/s/A using the properties (photometric system, zero point, etc) given by the SVO Filter Profile Service. If you prefer a different transformation, do it yourself and upload the file with fluxes in erg/cm2/s/A.

- (8) Errors must be specified in the same units as the fluxes (or magnitudes).

- (9) You can specify certain options for each photometric point including a special keyword in this column. By now these options are available:

- "---" : nothing special for this point.

- "nofit" : this point will be included in the SED and in all the plots. But it will NOT be used for the fit.

- "uplim" : this point will be considered an upper limit.

- "mag" : the flux and error included in columns 7 and 8 are given as magnitudes.

- "erg" : the flux and error included in columns 7 and 8 are expressed are given in erg/cm2/s/A.

- "jy" : the flux and error included in columns 7 and 8 are expressed are given in Jy.

These three last options can be mixed in the file. If "mag","erg" or "jy" is included for one point, this point will we handled accordingly even though the global "file type" that you choose to upload is different. If you don't specify one of these options for one point, the file type will be used as default.

- (10) You can specify certain options for each object including a special keyword in this column. By now these options are available:

- "---" : nothing special for this object.

- "Av:av_min/av_max" : Range of values for Av. If you give a range here, the visual extintion will be considered as an additional parameter in model fit, bayes analysis and template fit. See the corresponding section for details.

- "Veil:value" : value in Angstroms so that photometric points with smaller wavelength will be considered to present UV/blue excess.

- "excfil:FilterName" : name of the filter where infrared excess starts for this object.

In the future, other options could be implemented.

Please, check in advance that your file conforms to these requirements. Next, after uploading it, you can try to see the analyzed contents of the file in "Upload files → Show". If what you see does not correspond to what you expect it will probably mean that there is something wrong in your data file. Delete it from the system, try to correct the mistake and upload it again.

Examples of valid files

1.- A complete file

Obj1 19.5 23.2 80 1.2 DENIS/DENIS_I 5.374863e-16 4.950433e-19 --- Av:0.5/5.5 Obj1 19.5 23.2 80 1.2 CAHA/Omega2000_Ks 2.121015e-16 1.953527e-19 --- Av:0.5/5.5 Obj1 19.5 23.2 80 1.2 Spitzer/MIPS_M1 6.861148e-15 1.390352e-16 nofit Av:0.5/5.5 Obj2 18.1 -13.2 80 1.2 WHT/INGRID_H 1.082924e-14 2.194453e-16 --- --- Obj2 18.1 -13.2 80 1.2 2MASS/2MASS_J 2.483698e-17 2.287603e-19 --- ---

In this file we have two different objects, their positions (RA and DEC), the distance to the objects, the AV parameter and some values of the photometry (three for Obj1 and two for Obj2). For the first object, the MIPS_M1 will not be used for the fit, and Av will be considered as a fit parameters with values from 0.5 to 5.5

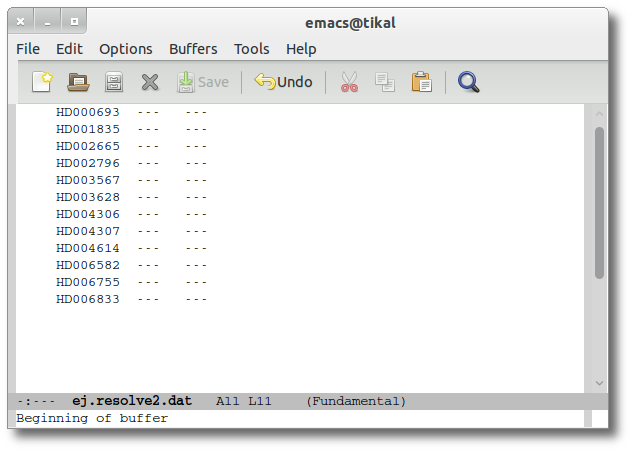

2.-Only object names

BD+292091 --- --- --- --- --- --- --- --- --- HD000693 --- --- --- --- --- --- --- --- --- HD001835 --- --- --- --- --- --- --- --- ---

This file is also correct, and although we have little information in it, VOSA can try to find some more data about these objects so that the analysis can be performed. Assuming that the names for the three objects are the real ones, we can try to find these objects coordinates. Then, using these coordinates, some observed photometry could be retrieved from VO catalogues, and so on.

2.-A mixed case

#objname RA DEC DIS Av Filter Flux Error PntOpts ObjOpts #======= === ======= === === =============== ================== ================= ======= ======= BD+292091 --- --- --- --- 2MASS/2MASS_J 7.14724167946E-14 5.14601400921E-16 --- --- BD+292091 --- --- --- --- 2MASS/2MASS_H 3.69142119547E-14 2.3625095651E-16 --- --- Obj2 18.1 -13.2 80 1.2 DENIS/DENIS_I 1.082924e-14 2.194453e-16 --- --- Obj2 18.1 -13.2 80 1.2 2MASS/2MASS_J 2.483698e-17 2.287603e-19 --- --- HD000693 2.81 -15.467 --- --- --- --- --- --- --- HD001835 --- --- --- 1.4 --- --- --- --- --- Obj3 19.5 23.2 80 1.2 Omega2000_Ks 2.121015e-16 1.953527e-19 --- --- Obj3 19.5 23.2 80 1.2 Spitzer/MIPS_M1 6.861148e-15 1.390352e-16 --- --- HD003567 --- --- --- --- --- --- --- --- ---

You can combine in the same file objects with different type of information. Just keep in mind that each line must have 10 columns and, when you want to leave a data blank, you must write it as '---'.

And remember that the different columns can be separated by blanks or tabs or any combination of them. For instance, this next example would be completely equivalent to the previous one:

BD+292091 --- --- --- --- 2MASS/2MASS_J 7.14724167946E-14 5.14601400921E-16 --- --- BD+292091 --- --- --- --- 2MASS/2MASS_H 3.69142119547E-14 2.3625095651E-16 --- --- Obj2 18.1 -13.2 80 1.2 DENIS/DENIS_I 1.082924e-14 2.194453e-16 --- --- Obj2 18.1 -13.2 80 1.2 2MASS/2MASS_J 2.483698e-17 2.287603e-19 --- --- HD000693 2.81 -15.467 --- --- --- --- --- --- --- HD001835 --- --- --- 1.4 --- --- --- --- --- Obj3 19.5 23.2 80 1.2 Omega2000_Ks 2.121015e-16 1.953527e-19 --- --- Obj3 19.5 23.2 80 1.2 Spitzer/MIPS_M1 6.861148e-15 1.390352e-16 --- --- HD003567 --- --- --- --- --- --- --- --- ---

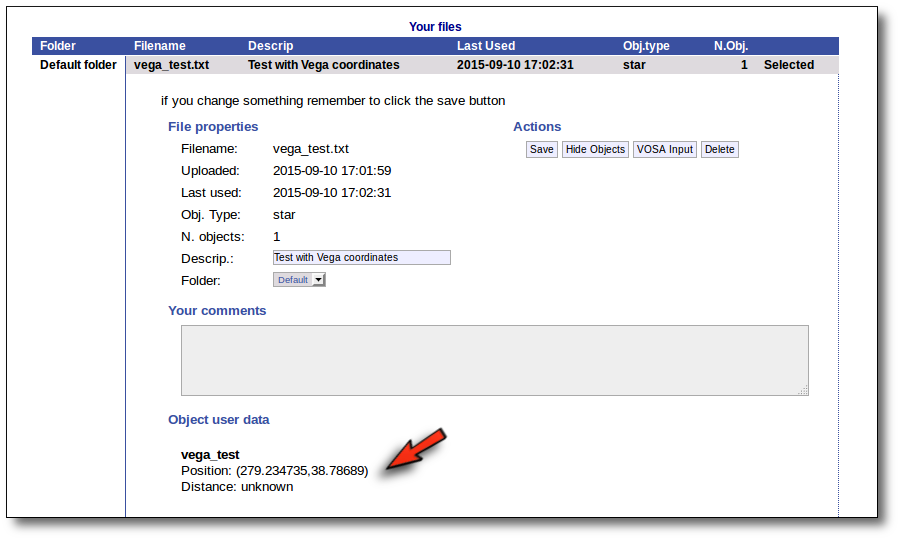

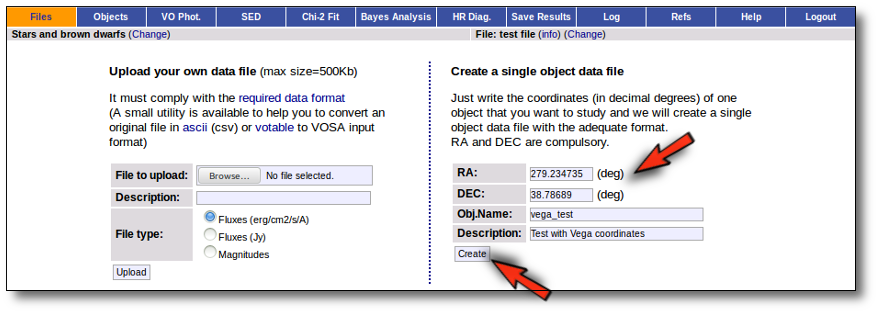

Single object search

In the case that you only want to work with a single object (or you just want to test how VOSA works) you don't need to build a input file.

You only need to specify the RA and DEC (in decimal degrees) of your objects. The object name and description are optional (if you leave any of them blank VOSA will fill them using the information in the other fields).

With those coordinates VOSA builds a very simple input file that is saved in your Default folder and you can then work with it, use VO catalogues to find out information or photometry for that object and then try to fit the observed SED with theoretical models.

Example

You only need to specify the RA and DEC (in decimal degrees) of your objects. The object name and description are optional (if you leave any of them blank VOSA will fill them using the information in the other fields).

With this information VOSA will make a very simple "VOSA input file" and it will be loaded automatically.

From then on, you will work with this file as with any other vosa file.

Just remember that the only information that we have for this object now is its coordinates. You will need, at least, to search for photometric data in VO catalogues using the "VO Phot." tab.

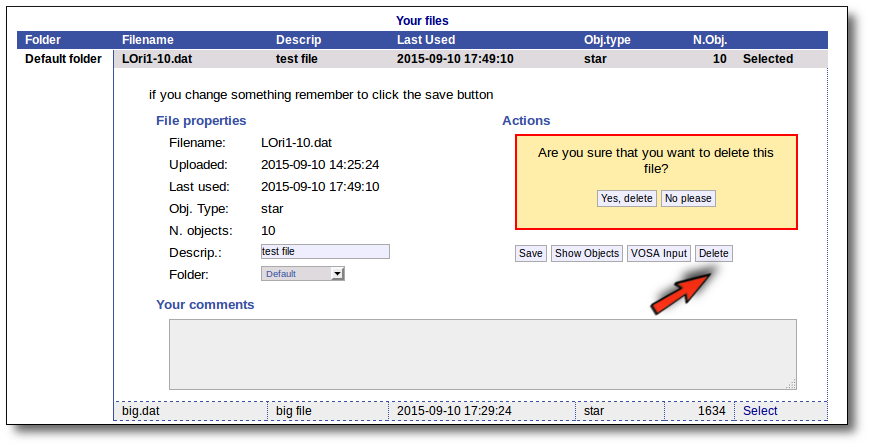

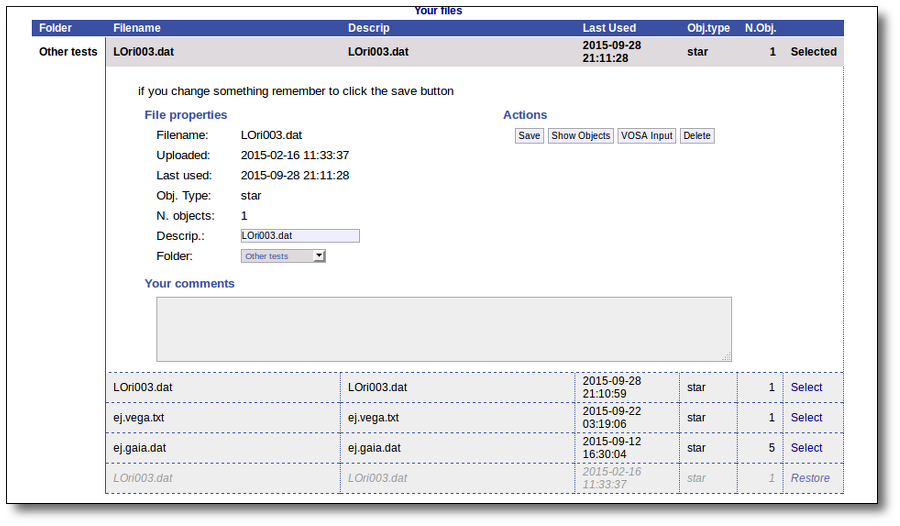

Managing your files

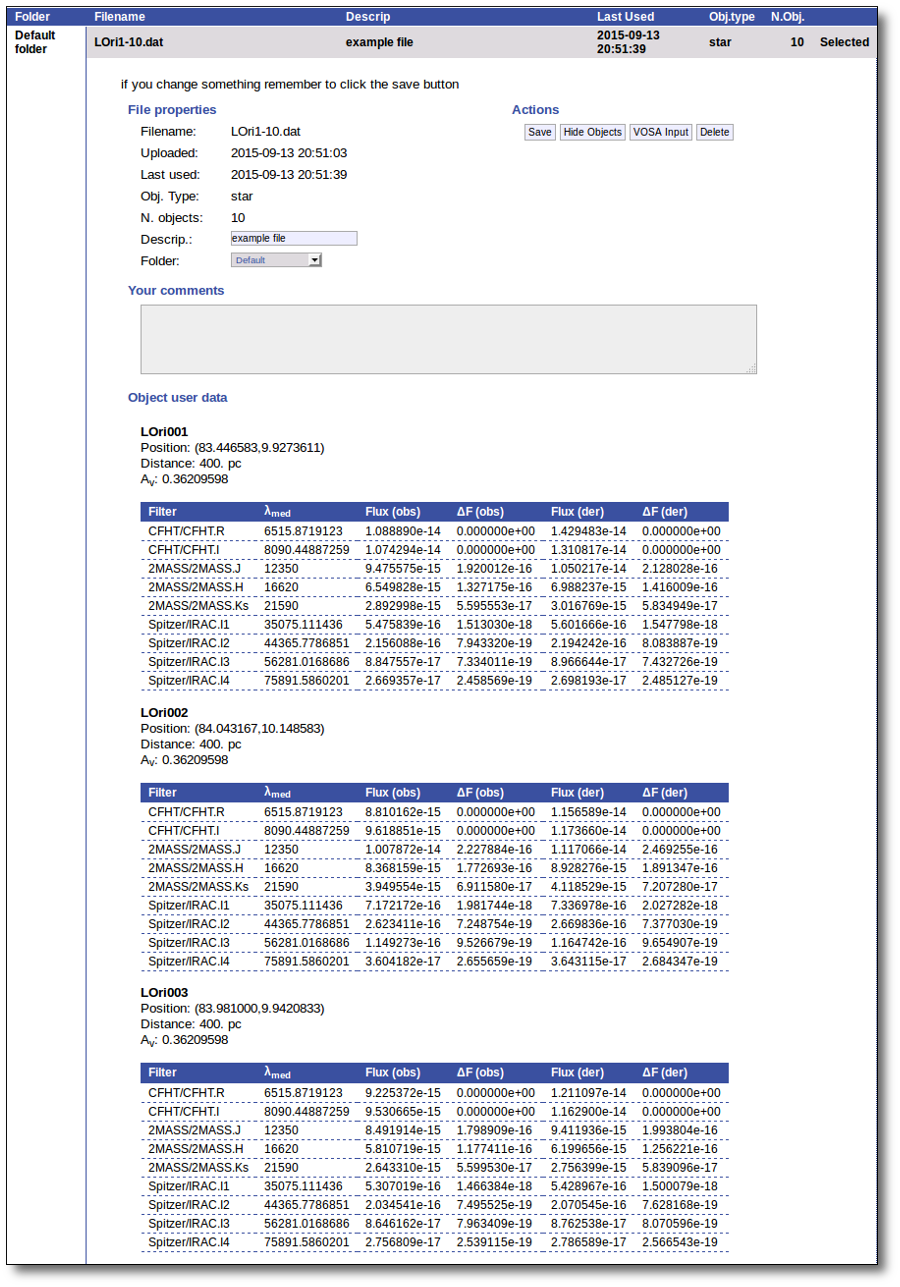

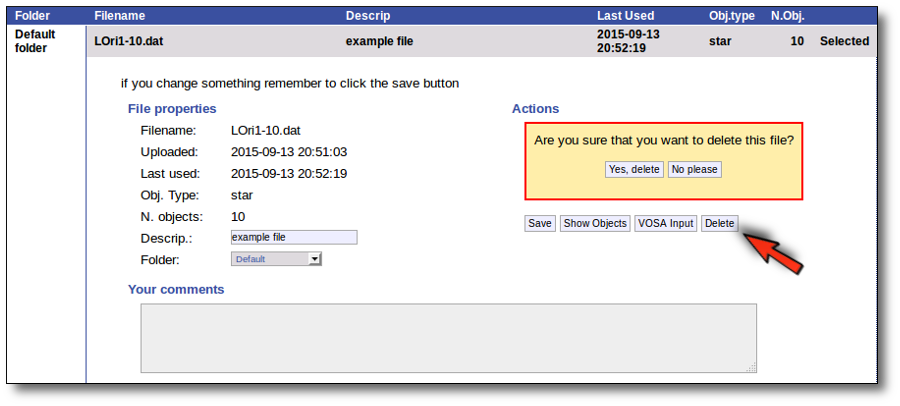

All the files that you upload to VOSA will be shown in the "Files" page.

You can organize them using folders. In the form in the bottom you can create folders as you like (or rename them).

To start working with VOSA You need to select one of the files.

For the selected file you can also:

- Edit the file description.

- Move it to another folder.

- Add comments (maybe to remember something about this file in the future).

In order to do that, you just need to edit that information in the form and click the "Save" button.

Click the "VOSA Input" button to recover the VOSA Input file that you first uploaded (you will get the same ascii file).

Click the "Show Objects" button to see the info about the objects in the file. Remember to do this after uploading the file to check that all the info has been understood by VOSA properly.

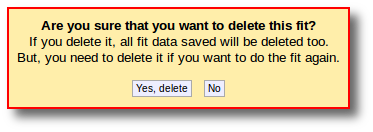

Click the "Delete" button to delete the file from VOSA (all the information about it will be lost). You will be asked for confirmation.

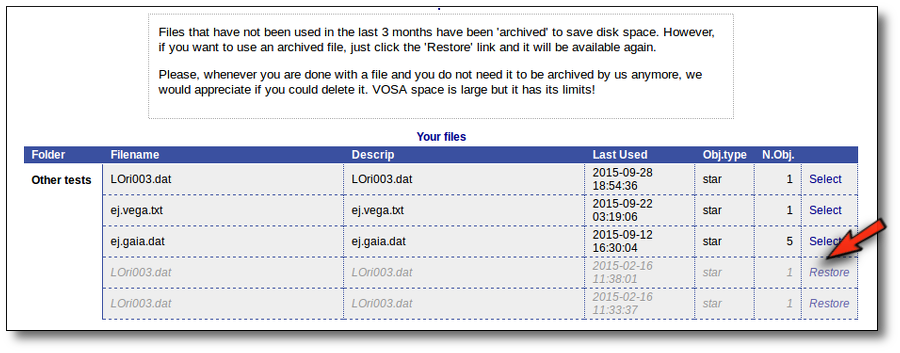

Archived files/Restore

Every file that you upload into VOSA is keeped in our server together with all the information related to every action that you do to the objects in that file (photometry, fit results, plots, etc.). You can come later and continue your work on any of your files at the point where you left it.

But if you haven't done any action on a file for 3 months we understand that you are not doing an active work on it and you do not really need it to be so easily accesible.

Thus, we archive files that have not been used in the last 3 months to save VOSA disk space and maintanaince.

Those files will be displayed in a different style in VOSA and you will not be able to select them directly.

But if you really want to use that file again, you can click in the "Restore" link. VOSA will recover all the content so that you can work with it again.

The process will be almost inmediate for small files but could take a while if your file is big.

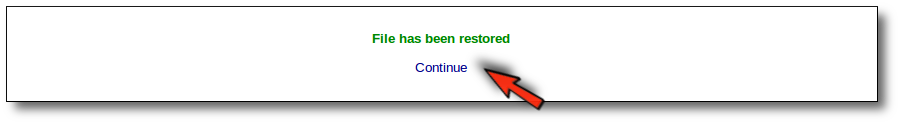

When everythink is ready you will see a message.

And when you click the "Continue" link, the content of your file will be available again.

In any case, please, whenever you are done with a file and you do not need it to be archived by us anymore, we would appreciate if you could delete it. VOSA space is large but it has its limits!

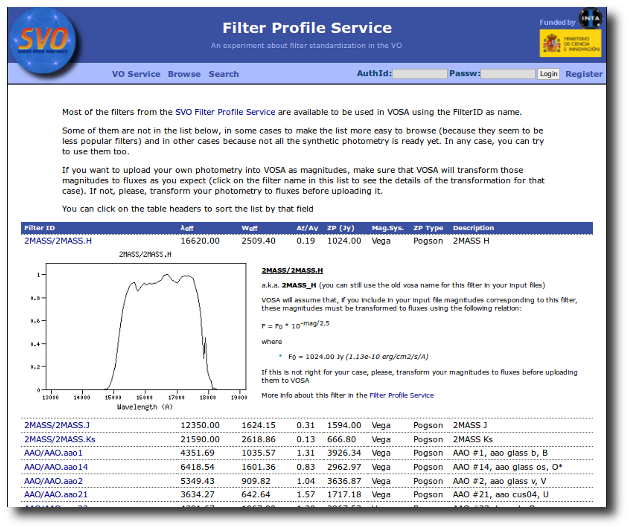

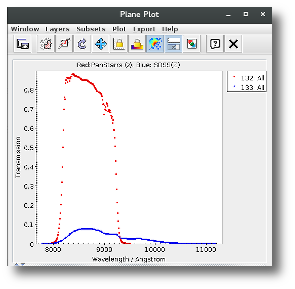

Available Filters

Most of the filters from the SVO Filter Profile Service are available to be used in VOSA using the FilterID as name.

Please, check the Filter Profile Service for details. The link will open in a different window.

The filter properties are used by VOSA in a number of ways.

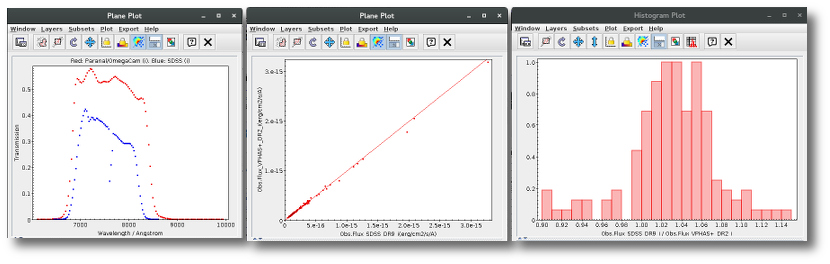

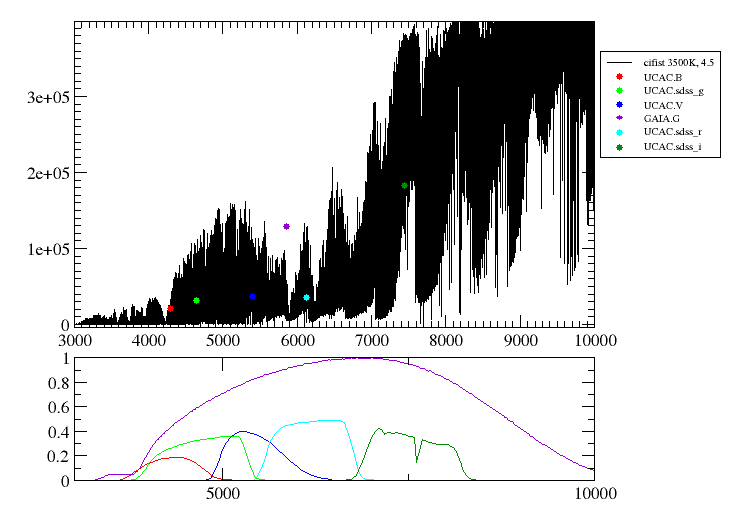

- The filter transmission curve is used to calculate the synthetic photometry for each of the available theoretical models or observational templates. This synthetic photometry is what will be compared to the observed photometry in the model fit or bayes analysis.

- The λeff will be used as the nominal value for the wavelength corresponding to the photometric point. This wavelength is used as follows:

- As the x coordinate for the different plots showing the object (or model) SED.

- To transform from Jy to erg/cm2/s/A if the original photometric values are given in Jy (either in your input file or in photometry coming from VO catalogs)

- Indirectly, the Af/AV value given by the FPS is calculated for this value of the filter wavelength. Thus, it has some effect in the deredenning.

- The zero point is used to transform magnitudes to fluxes if the original photometric values are given as magnitudes (either in your input file or in photometry coming from VO catalogs). In that case, the corresponding magnitud system will be taken also into account.

The link above shows a summary on how VOSA will use the filter properties. You can click on any filter name to see more details and you can also use the table column titles to sort the table using that field.

Besides that, you can access the full information in the Filter Profile Service using the "Browse" or "Search" links in the top menu. You can see a summary of all the filters in a given "family" (instrument, mission, survey, generic...) or click in any filter to see more details on the filter properties and how they are calculated by the service or where they were found in the literature.

Objects

There are some object properties that are important in order to be able to use all the potential of VOSA and get reliable results.

- Object coordinates are necessary to be able to make VO searches (for properties or photometry). Most operations in which we search for information in VO catalogs have to be made in terms of the object coordinates (and some search radius around them). If the coordinates are not available, you won't be able to search the VO.

- Object distance is necessary to be able to calculate bolumetric luminosities from the model fit results and then use these luminosities in the HR diagram to try to get estimation of the mass and age.

- The value of the visual extinction, Av, is necessary to deredden the observed photometry.

You can upload all this information in your Input file if you know it, but VOSA also can help to find values for these object properties searching in VO catalogs.

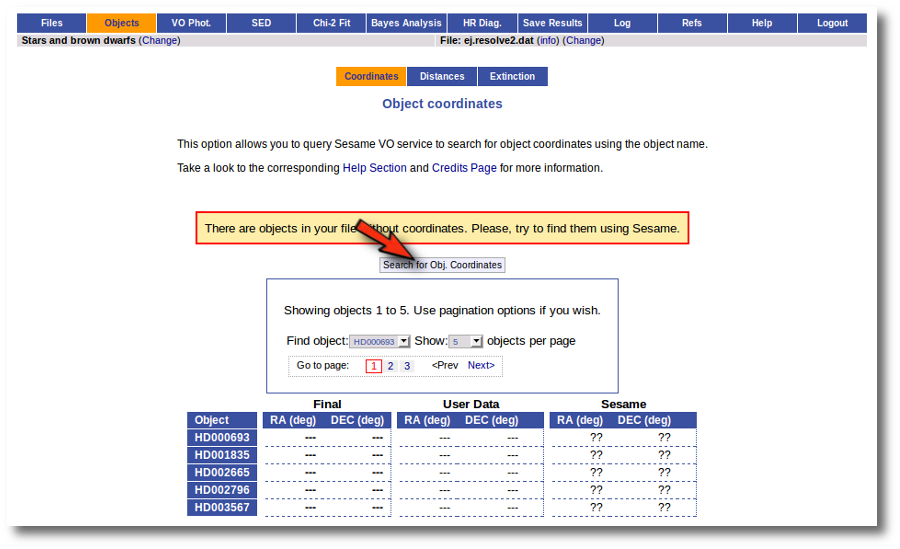

Object coordinates

VOSA offers the possibility of finding the coordinates of the objects in your user file.

Having the right coordinates for each object is necessary if you want to be able to search in VO services for object properties (distance, extinction) or photometry.

In order to do this, the object name is used to query the Sesame VO service.

Then you can choose to incorporate the found coordinates (if any) into your final data or not.

Take into account that this only will give proper results if the object name given in the user file is the real one. Otherwise either you will find nothing or the obtained coordinates will not have anything to do with the real ones and, if they are used to search for catalogue photometry, the obtained values (if any) will not really correspond to the object under consideration.

Two examples

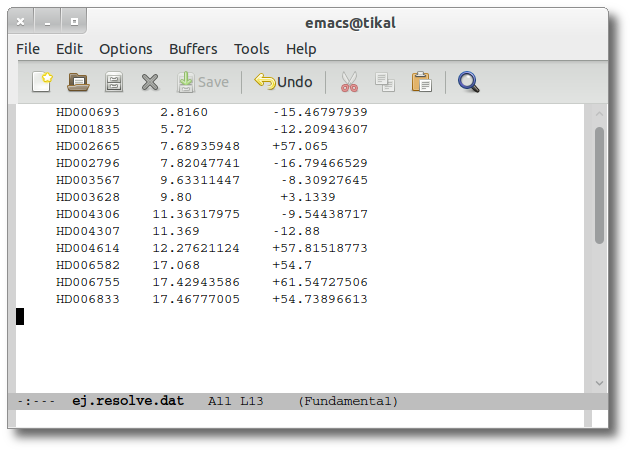

We upload a very simple file with some object names and no coordinates.

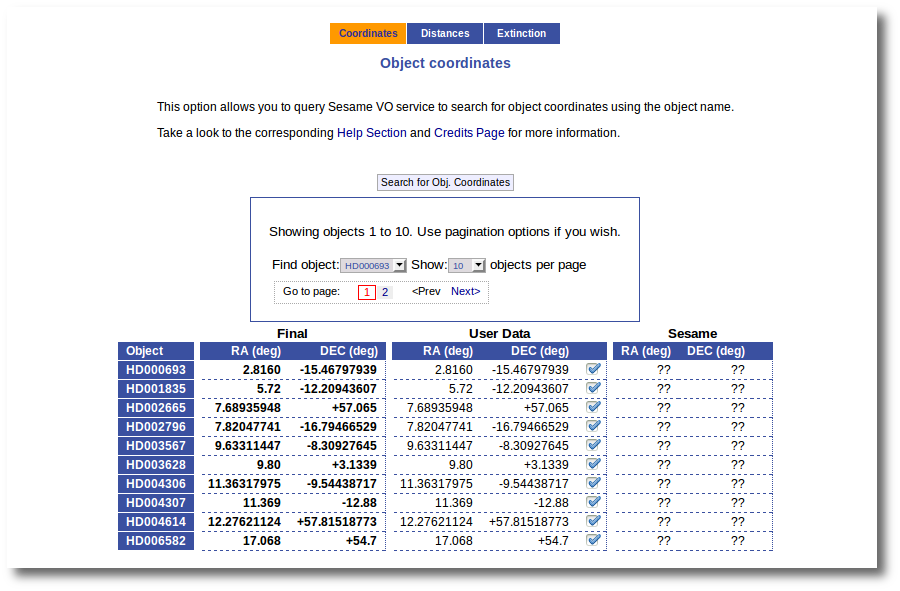

So the first thing that we do is clicking the button to "Search for Obj. Coordinates".

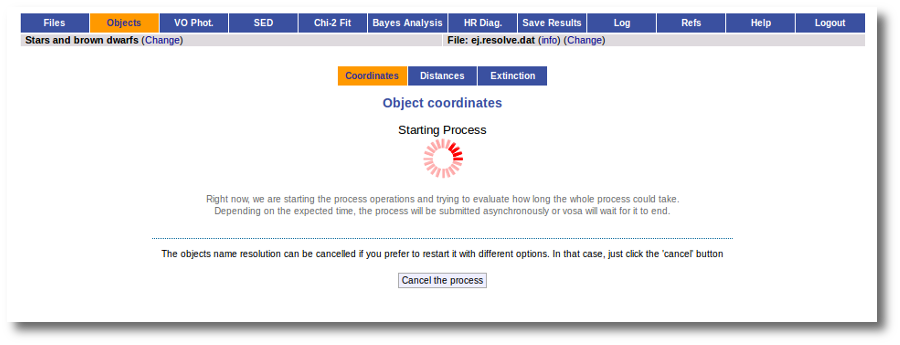

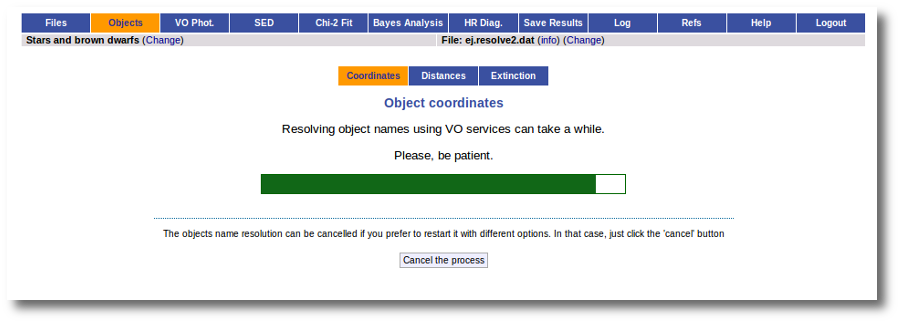

When you click the search button, VOSA starts the operation of querying Sesame for coordinates.

This search is performed asynchronously so that you don't need to stay in front of the computer waiting for the search results. You can close your browser and come back later. If the search is not finished, VOSA will give you some estimation of the status of the operation and the remaining time.

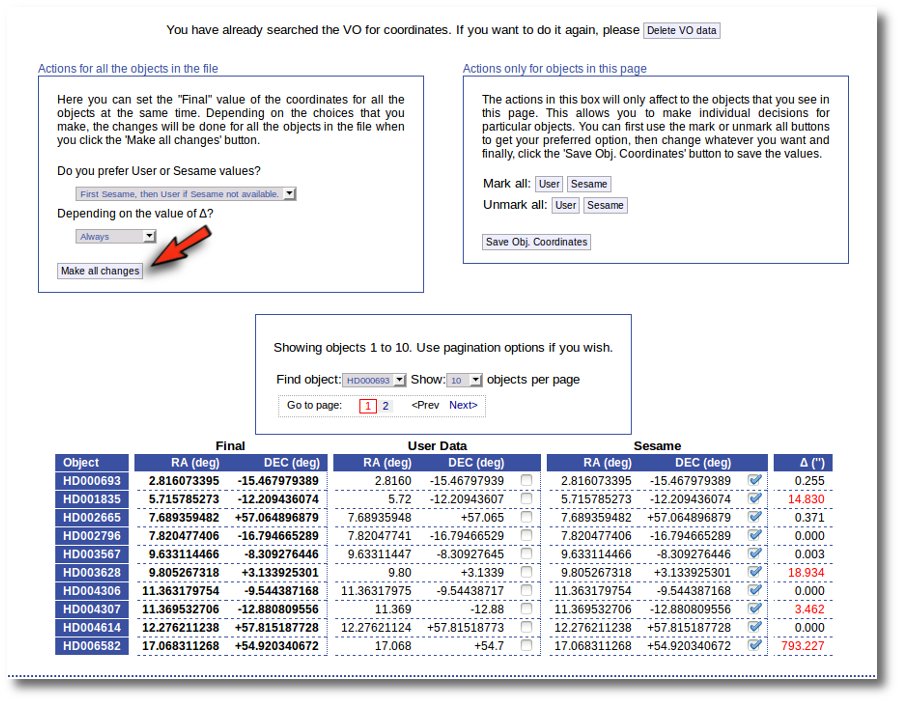

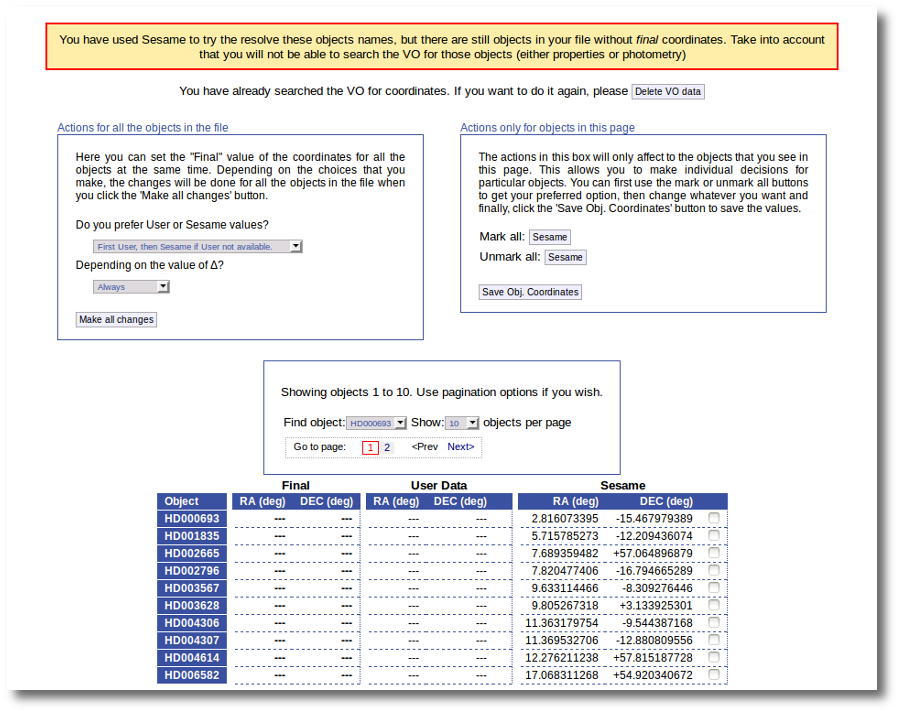

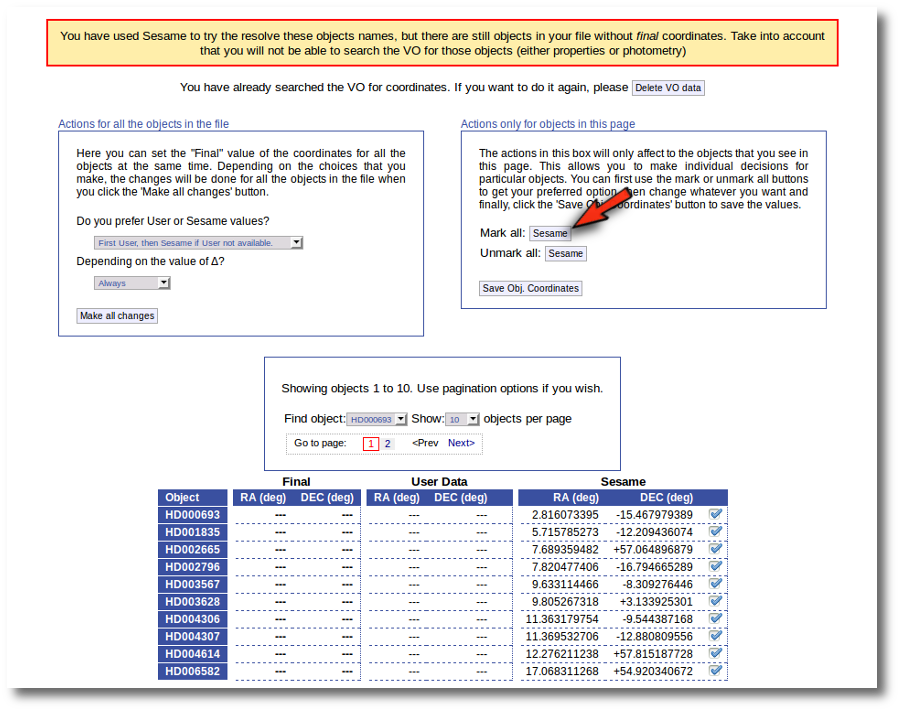

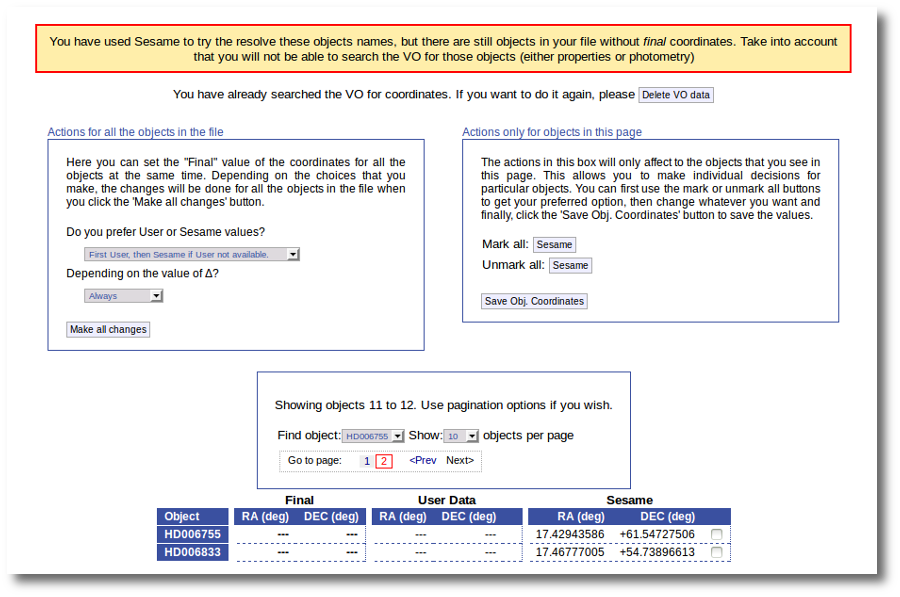

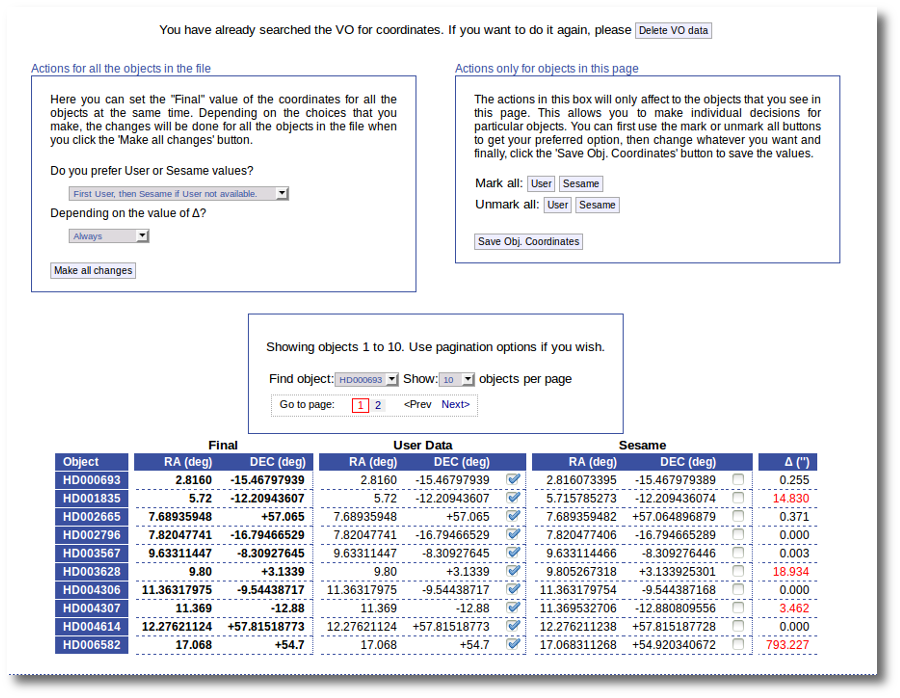

When the search is finished VOSA shows you the data obtained from Sesame, but these coordinates are not incorporated to the final data yet.

You have two different forms available. The one on the left allows to save data for all the objects in the file with a single click. The one on the right is useful to mark/save data corresponding ONLY to those objects that are displayed in the current page (not doing anything to objects in other pages, when there are many objects).

In this example we are going to use the form on the right.

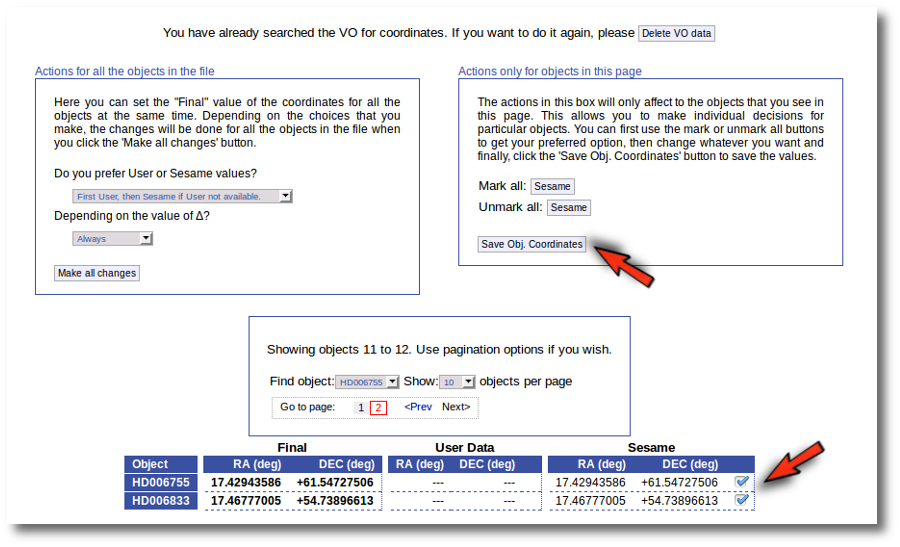

First we click the "Sesame" button so that all the values coming from Sesame are selected.

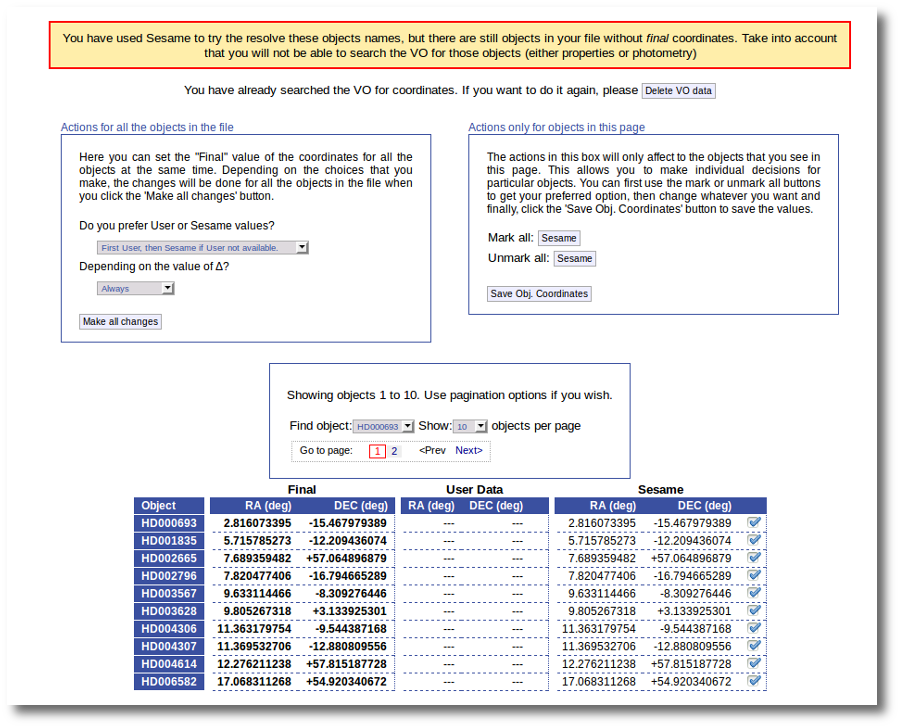

Then, we click the "Save Obj. Coordinates" so that the marked values get saved.

But we still see the warning saying that there are some objects without coordinates!

If we use the pagination form to go to that page we see that we haven't saved the distance for those objects yet.

In this case we just mark those two Sesame value by hand and click the "Save Obj. Coordinates" again.

Now he have the coordinates for all the objects in the file.

As a second example, we upload a file with the same objects but including RA and DEC values.

We can see the user values already selected and saved as final values.

We could stop here, but we want to check these coordinates comparing them with what we find in Sesame.

Thus, we click the "Search for Obj. Coordinates" button, we wait for the process to finish and we see, side by side, both user coordinates and Sesame values.

An extra column shows the difference, in arcsec, from the user coordinates and the sesame ones. This difference is shown in red when the difference is bigger than 1'' so that it is easier for you to discover suspicious cases.

In this example we use directly the form on the left. We select the option to use Sesame values values when available and to use them always. We click the "Make ail changes" button and Sesame values are directly saved as final for all the objects in the file.

Object distance

The distances to the objects are used by VOSA to transform the total fluxes given by the 'model fit' into bolumetric luminosities as:

Lbol = 4πD2 Ftot

If you don't give a value for the distance, VOSA will assume it to be 10pc to calculate the Luminosity.

If you don't care about the final luminosities and you don't intend to make an HR diagram, you can forget about distances and write them as "---" in your input file.

Distance errors

You can also provide a value for the error in the distance in your input file. In order to do that write D+-ΔD (for instance: 100+-20), without spaces, in the fourth column of your input file. See below for an example. (Remember to write both symbols, + and -, together, not a ± symbol or something else; otherwise vosa will not understand the value).

ΔD will be propagated as a component of ΔL as follows:

ΔLbol (from D) = Ftot * 2ΔD/D

If you don't give a value for ΔD or you don't find one in the VO, it will be zero. This will imply very small errors in ΔL as only errors coming from the observed fluxes will be considered.

VO search

VOSA offers the possibility of searching for the distance of the objects in VO catalogs.

In order to do this, the object coordinates are used to query some VO services (like Hipparcos catalog) to find observed parallaxes. Thus, object coordinates must be known (either provided in your input file or obtained in the Objects:Coordinates tab) if you want to search the VO for information about distances,

Take into account that the tool queries VO services using the object coordinates and returns the closer object to those coordinates, within the search radius, for each catalog. It could happen that the obtained information corresponds to a different object if the desired one is not in the catalog. In that case, the obtained distance could be erroneous because it corresponds to a different object. So, please, check the coordinates given by the catalog for each object to see if they seem to be the appropriate ones (within the catalog precision) before using the obtained values.

VOSA marks as "doubtful" those values found in catalogs so that the observed error is bigger than 10% of the parallax. It has been shown that for bigger errors the estimation of the distance from the parallax is biased (See Brown et al 1997). These values will be shown in red so that you are easily aware of large uncertainties.

The user can choose to incorporate the found distance (if any) into the final data or not. This decision can be taken in two different ways:

- Object by object. You can give user values for the distance (and error) for some particular objects, choose the value (user or VO catalogs) object by object, and just save those values.

- Specifying global criteria that will be applied to all the objects in the user file (which is specially useful for large files with many objects). Criteria are based on two main ideas: (1) what catalog you prefer to use and (2) the uncertainties for the distance values. See below for details.

Take a look to the corresponding Credits Page for more information about the VO catalogs used by VOSA.

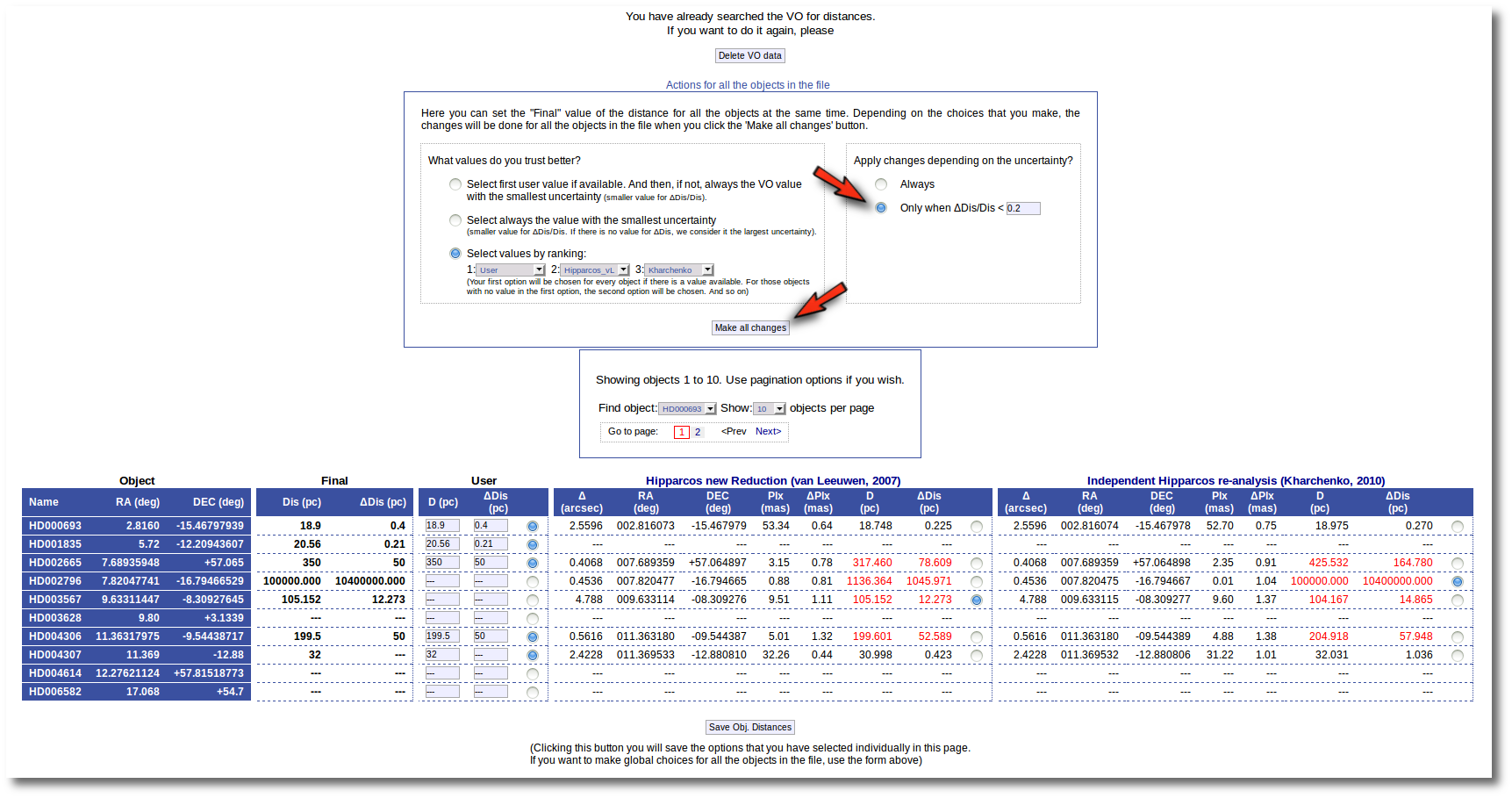

An example

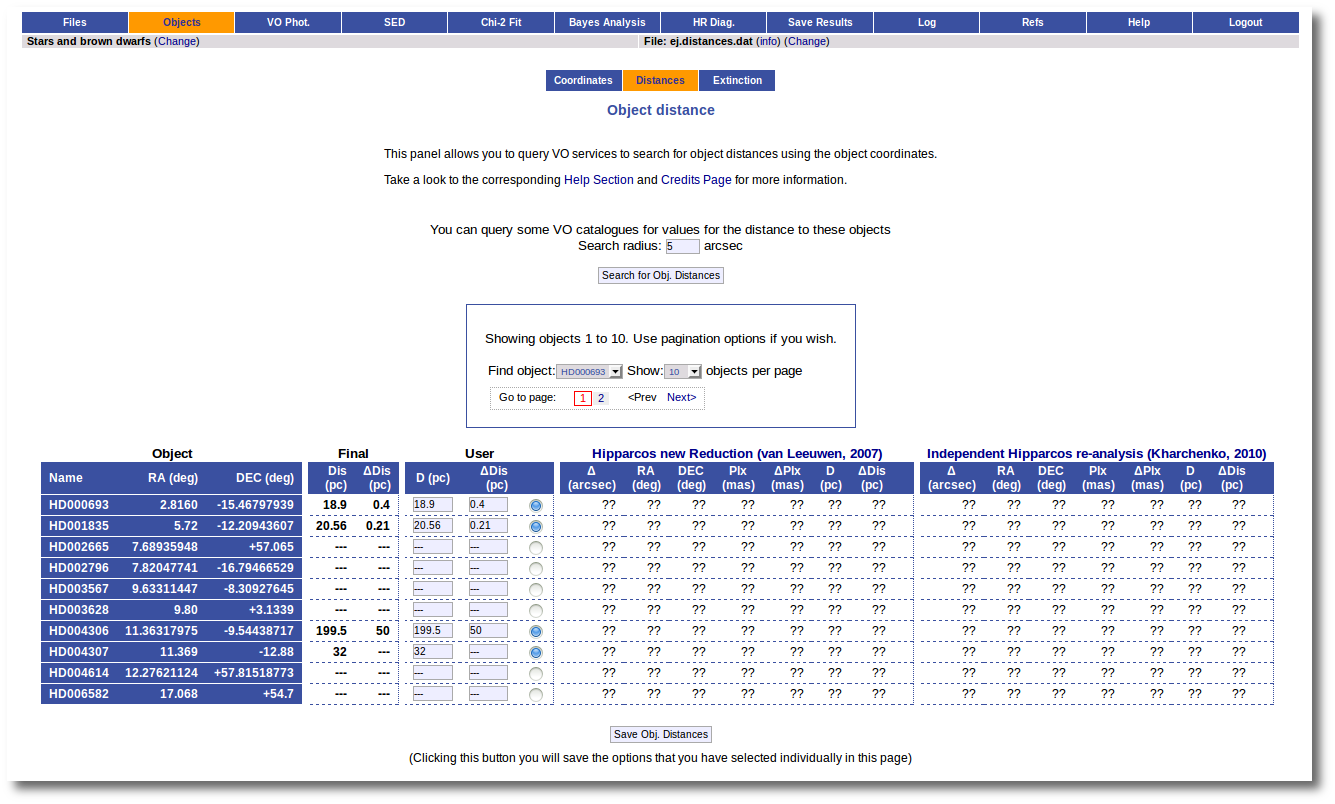

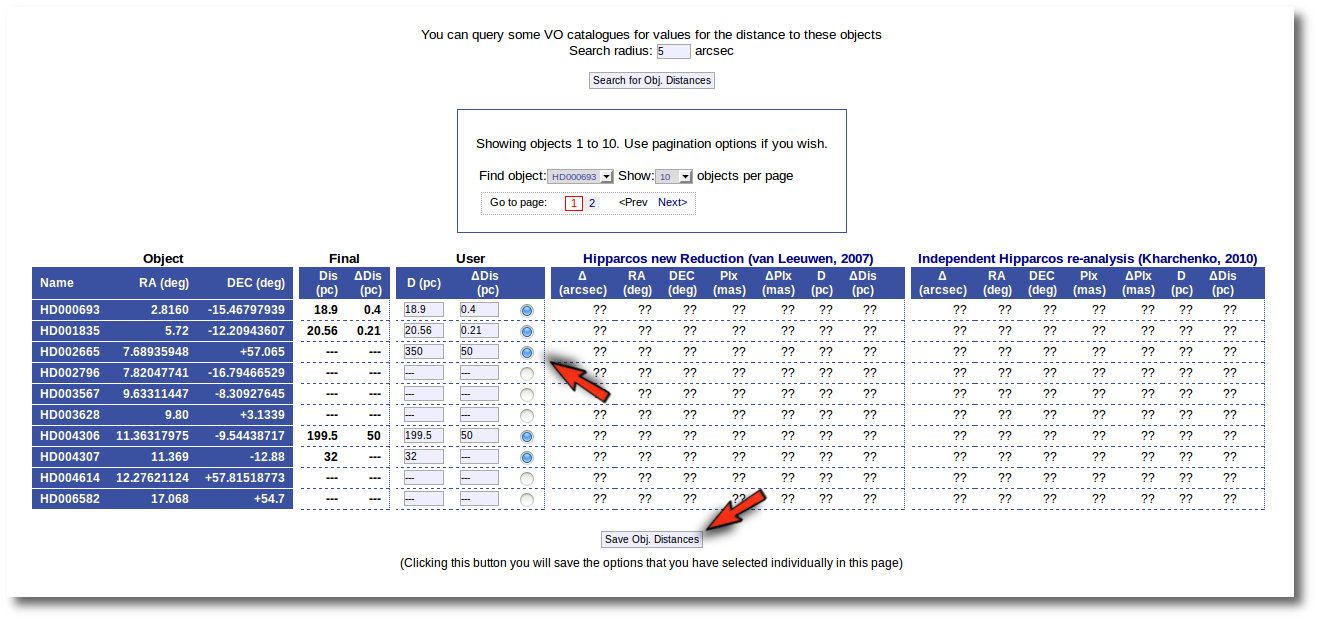

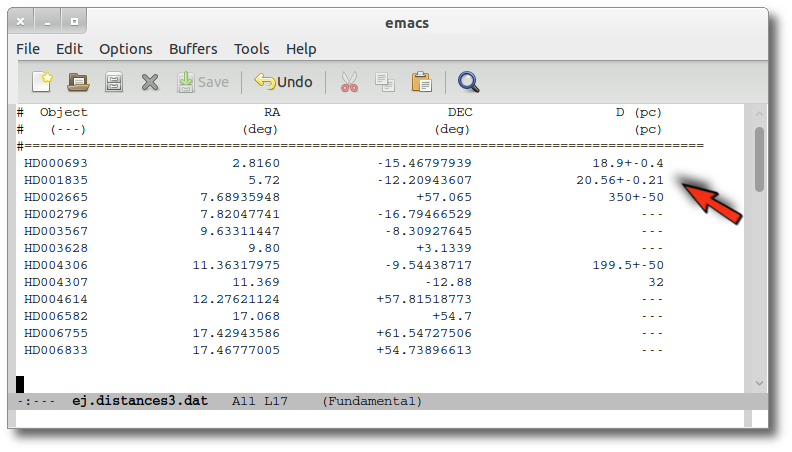

We have uploaded a file with information about the distance to some of the objects (in some cases we have included errors for the distance too). As you see we have values for distance and error for 4 objects, only the distance for HD004307 and no information about 7 objects.

We want to check the VO to search for more information, so we enter the Objects:Distances subtab to try to find something.

At this stage, we see three main functionalities:

- First, the possibility to search the VO.

- A pagination form (when there are more objects in the file than the number that you choose to visualize in each page).

- A form with the values of the distance for each object.

In this last form there are several groups of columns:

- Object information (object name, RA and DEC)

- The Final values. These are the values for the distance that will be used by VOSA outside this tab. In principle, the values here will be those values provided by you in your input file. But now you can change them.

- The User values. In principle, the values here will be those values provided by you in your input file. But now you can change them. And you can decide to used those new values as the final ones (or not).

- Information about the distance values found in VO catalogs for each object. At first this information is "unknown" because you haven't done a VO search yet.

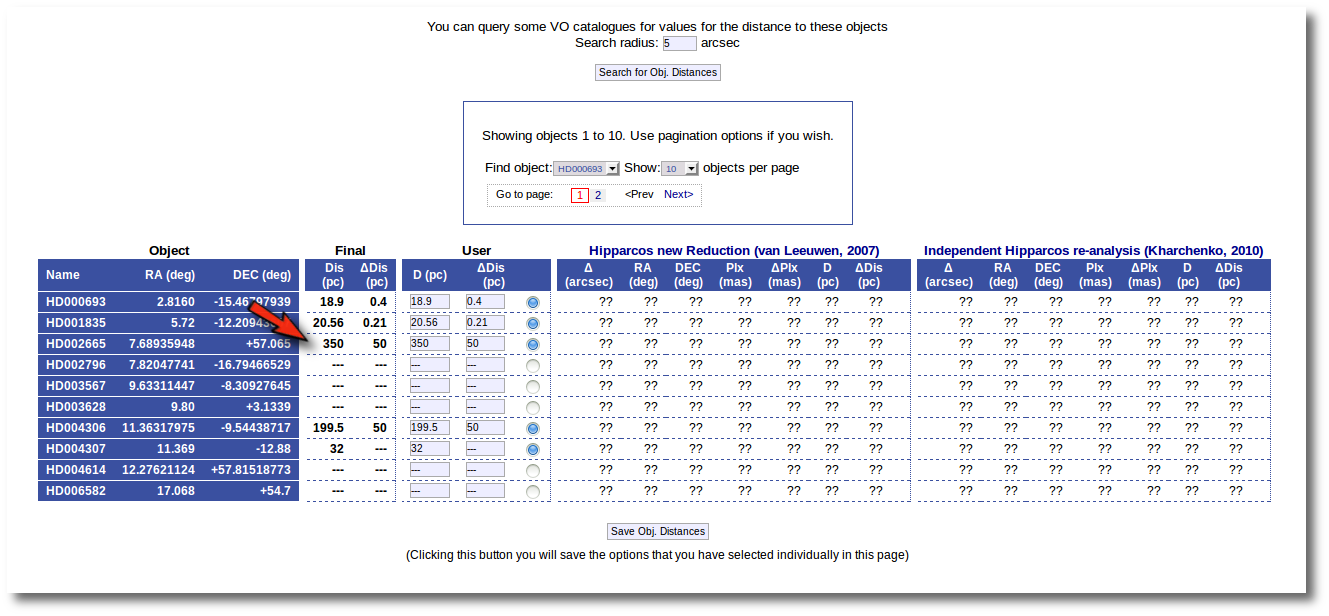

The first thing that you can do is editing the User values as you wish. For instance, you can give a value of 350±50 pc for the HD002665. You just need to write those values in the User column, mark the "tick" to its right and click the "Save Obj. Distances".

And you see that the final value for this object has been changed accordingly. If you leave this tab now, whenever a distance value is needed, VOSA will use a distance of 350±50 pc for this object.

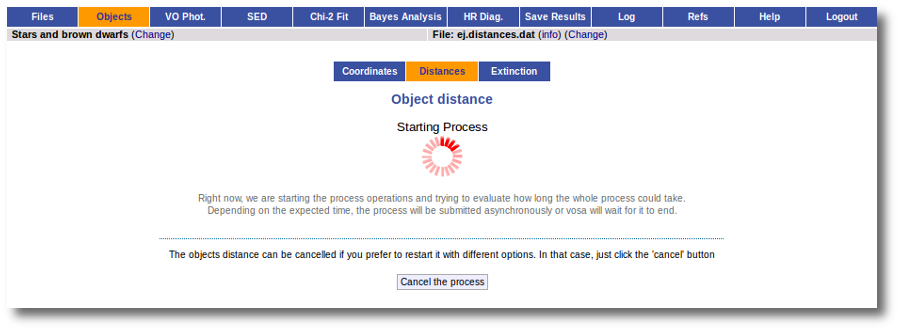

The next natural step is searching the VO for distance values. In order to to this you can just click the "Search for Obj. Distances".

When you click this button, VOSA starts the operation of querying VO catalogs.

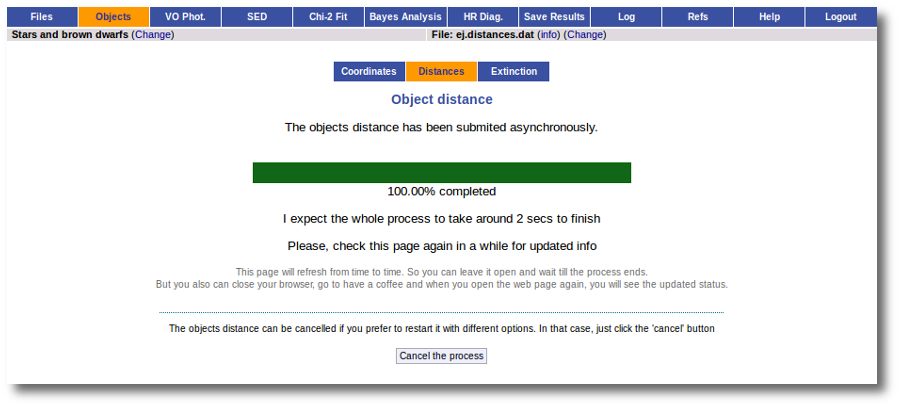

This search is performed asynchronously so that you don't need to stay in front of the computer waiting for the search results. You can close your browser and come back later. If the search is not finished, VOSA will give you some estimation of the status of the operation and the remaining time.

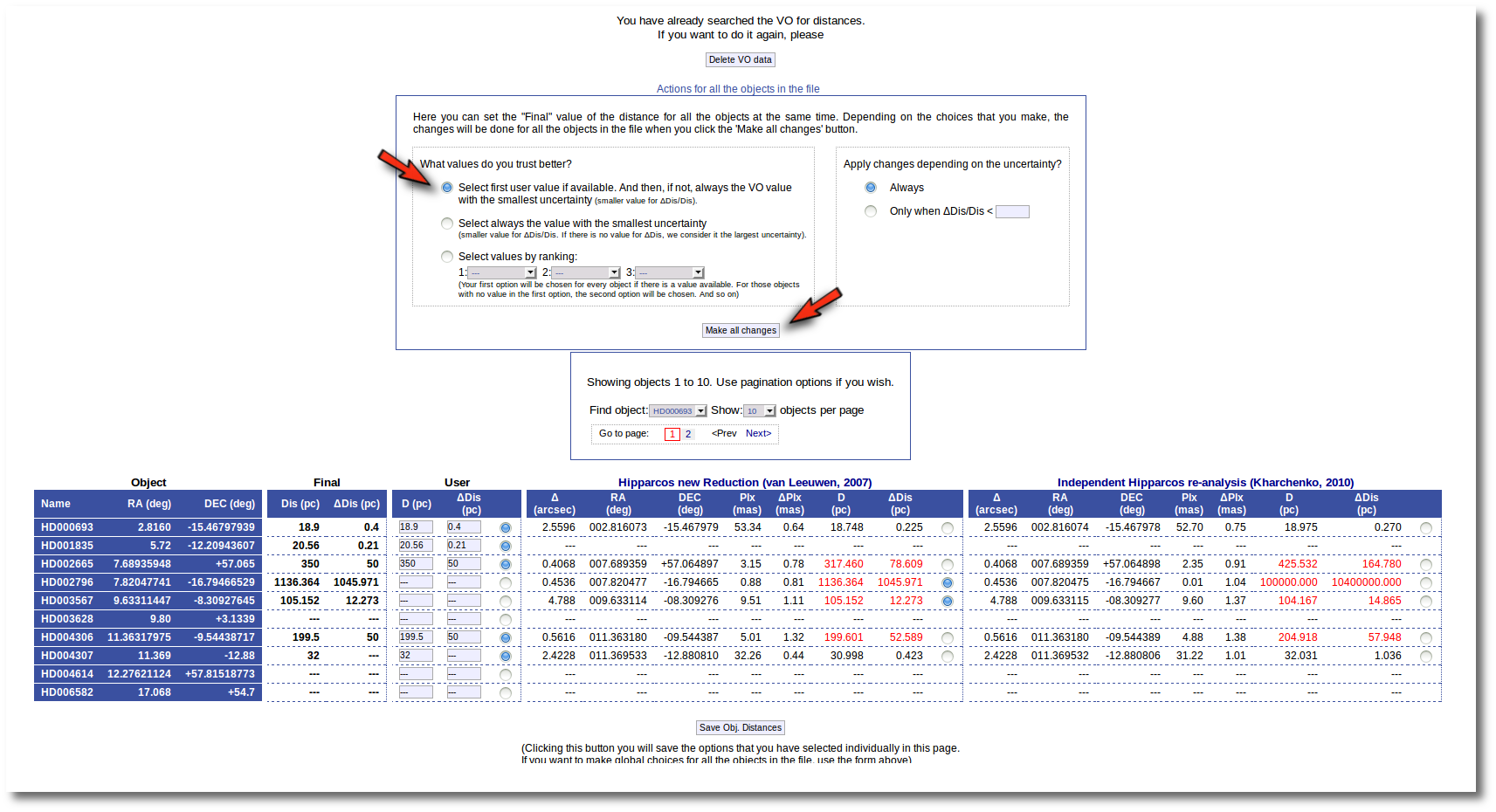

And, when everything is ready, you will see the values found in the VO catalogs for the distance to these objects.

Values with large relative errors are shown in red so that you are easily aware of large uncertainties.

At this point you still can choose to edit User values one by one and save them with the "Save Obj. Distances" button (as explained above). Or you also can decide to mark individually what value you prefer for each object among those available and click the "Save Obj. Distances" button to save those values as the final ones.

But you also can see a new form that offers you some options to choose the final values for all the objects in the file with just one click.

The form has two main parts:

- On the left, you have three different ways to prefer one catalog (or user values) over the other.

- Select first user value if available. And then, if not, always the VO value with the smallest uncertainty.

If you choose this, for those objects with user values, these will be chosen as the final ones. For those objects that don't have a user value for the distance, the one in VO catalogs with a smaller relative error will be saved as the final one. - Select always the value with the smallest uncertainty.

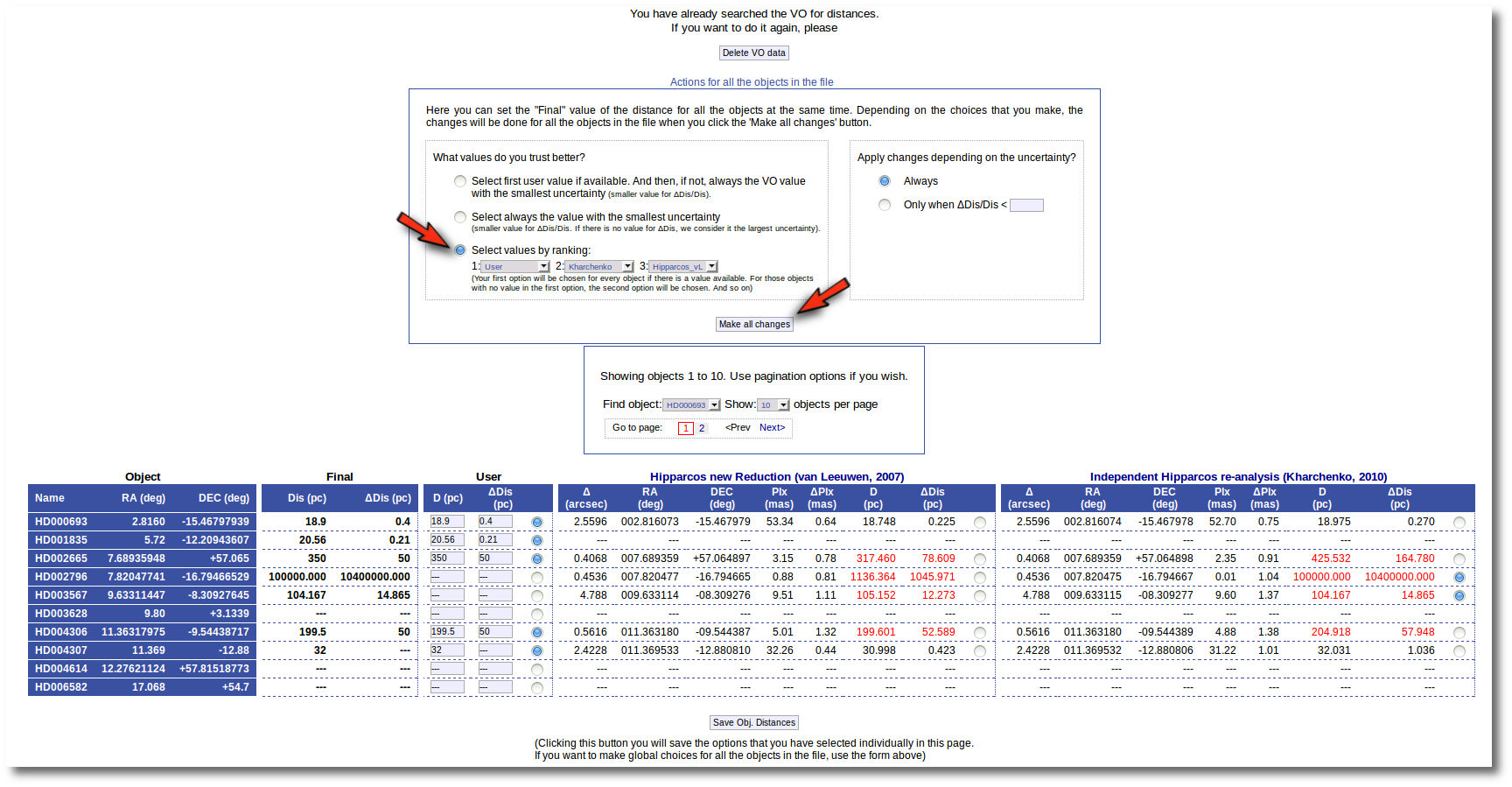

This means, quite obviously, that the value with a smaller relative error will be chosen. Take into account that if there is not a value for ΔDis, this will be considered as the largest uncertainty. - Select values by ranking.

Here you can specify the option that you prefer if there is a value available. If not, your second option will be used, and so on.

- Select first user value if available. And then, if not, always the VO value with the smallest uncertainty.

- On the right, you can decide if the above conditions are applied always or only when the relative error in the distance is below a certain limit. For instance, if you say that "Only when ΔDis/Dis < 0.5", if for one object, in one catalog, the relative error is larger, that will be considered as "there is not a value in this catalog".

When the "Make all changes" button is pressed, VOSA makes the selection adequate for your criteria and the corresponding values are saved as final.

For instance, if you mark the first option on the left, for those objects where is a value of the user distance, it will be the selected one, for the other objects, the van Leuween values are selected because they have smaller relative errors that the Kharcheko's ones.

Then, we select the third option on the left. And we set our preferences as: (1) user, (2) Kharchenko, (3) van Leuween. When we press the "Make all changes" button, Kharchenko's values for the distance is selected for HD002796 and HD003567, because there is not a user value for those objects.

Then, we change the order of preference to: (1) user, (2) van Leuween (3), Kharchenko. And we also set a limit ΔDis/Dis < 0.2 to make changes. In this case, for HD003567 there is not user value, then the van Leuween one is considered and ΔDis/Dis = 0.116 so it is selected and saved. But for HD002796 ΔDis/Dis = 0.92 in van Leuween and ΔDis/Dis = 10,4 in Kharchenko. So none of the values is selected and no change is made: the final value is kept as it previously was.

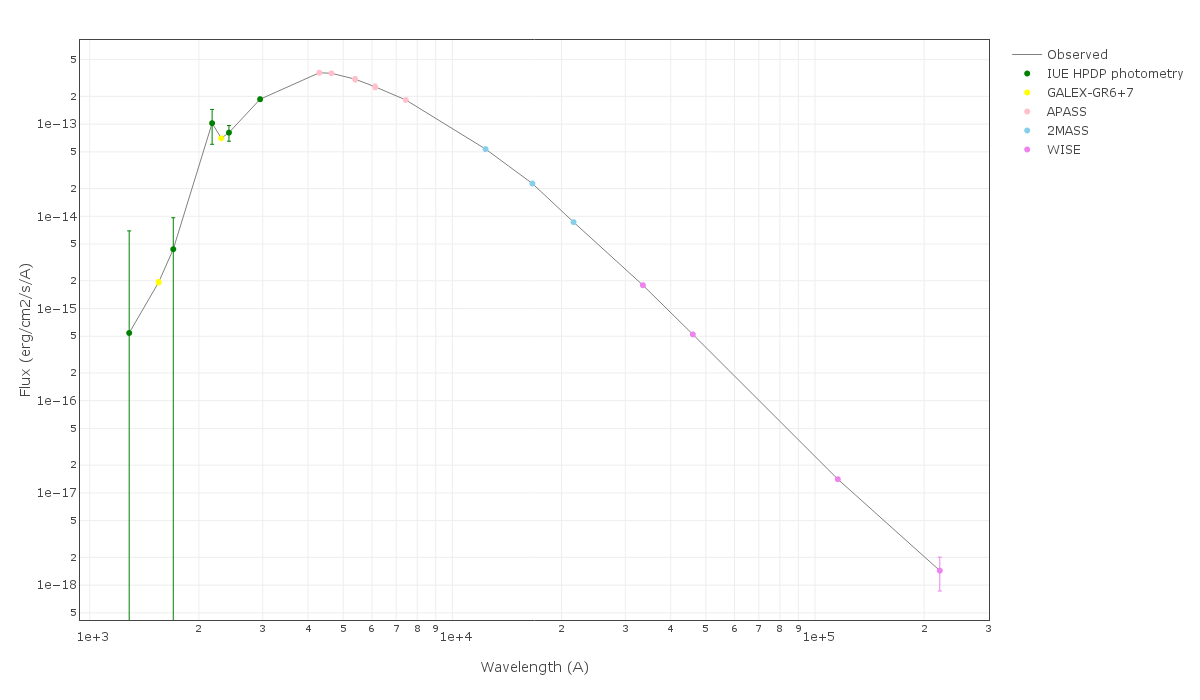

Extinction

The value of the interestellar extinction is necessary to deredden the observed photometry before analyzing it. If the extinction is not negligible, the shape of the real SED can be very different from the real one and any physical property estimated using the SED, if not properly unreddened, can be erroneous.

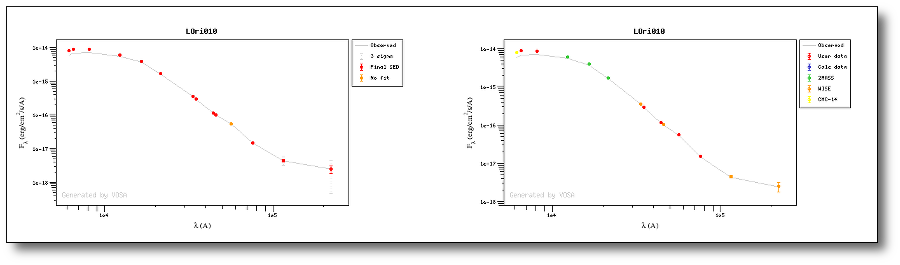

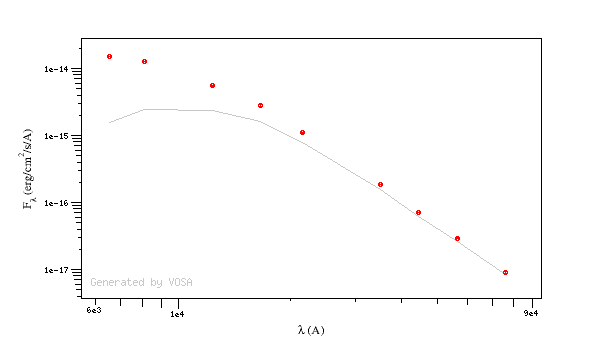

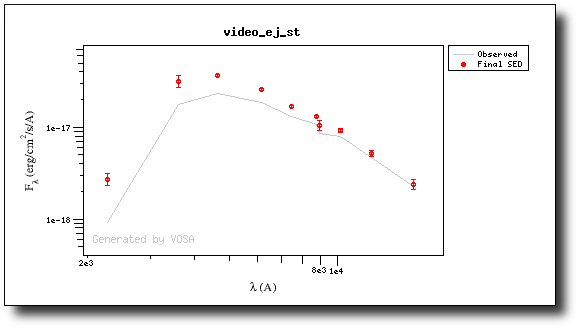

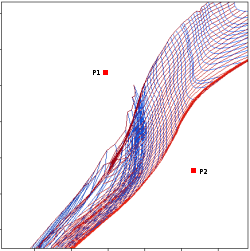

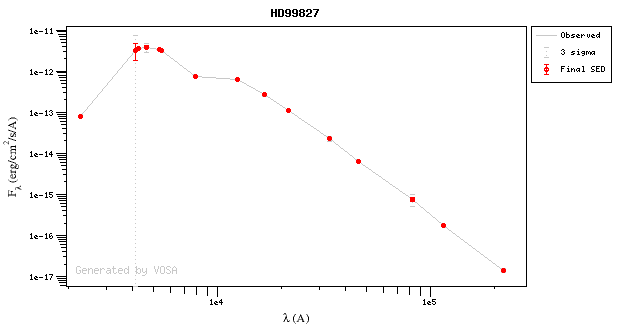

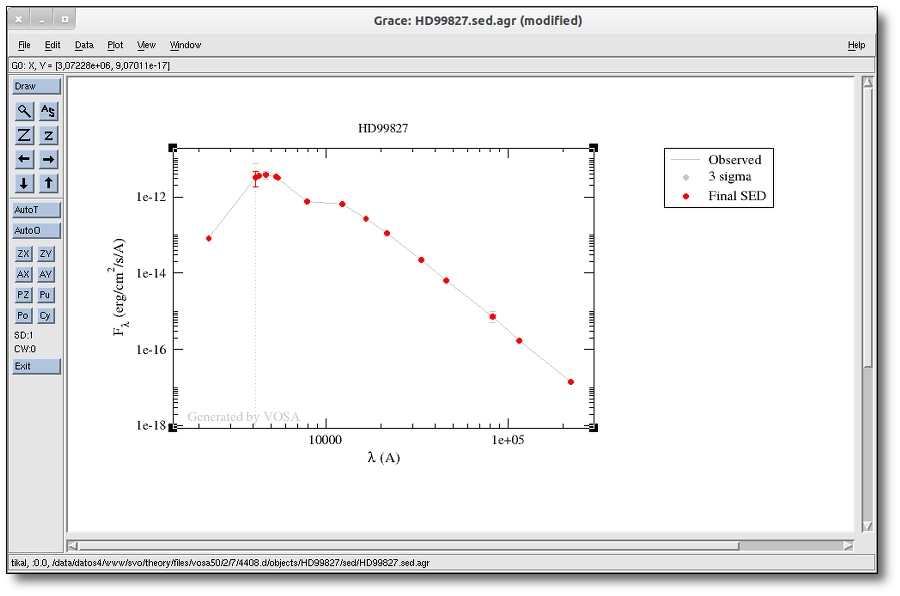

For instance, see the difference the observed SED (gray line) and the dereddened one (red points) for an object with Av=3.

You can provide a value for the visual extinction Av for each object in your Input file. But, if you don't have those values, VOSA also offers the posibility to search VO catalogs for extinction properties.

And, finally, you can also give a range of values for Av so that the model fits (chi2 and bayes) fits together the model physical parameters and the value for Av.

The extinction law.

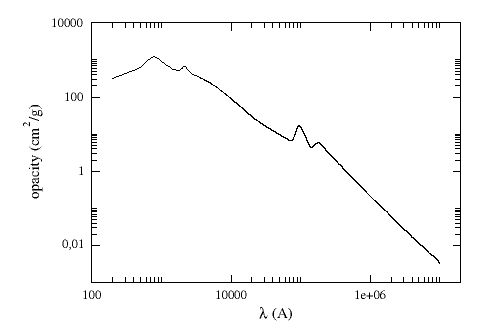

For dereddening the SEDs we make use of the extinction law by Fitzpatrick (1999) improved by Indebetouw et al (2005) in the infrared. Take a look to the corresponding Credits Page for more information.

(You can download the tabulated data for the extinction law).

The extinction at each wavelength is calculated as: Aλ = AV * kλ/kV, where kλ is the opacity for a given λ and kV=211.4

VO extinction properties.

The tool offers the possibility of finding extinction properties of the objects in the user file.

In order to do this, the object coordinates are used to query some VO services to find AV or RV and E(B-V) for each object.

Then you can choose to incorporate the found values (if any) into the final data or not. In fact, if it happens that diffferent catalogues give different information about the relevant quantities, you can choose which data to use to build the final AV value.

Remember that, if you decide to save new values for AV, the original data will have to be deredden again using the new values. This will change the final SED and, thus, if any other analysis has been done for the corresponding SED (for instance, a model fit) this analysis will have to be done again.

The first time that you enter this section for a given input file, the tool shows the AV values given in the input file (if any) and a button to search into VO services. When a search has been done, the tool will show the user values together with the found values for each relevant quantity so that you can choose which ones should be used (checking the corresponding box).

In fact, this form has several options that can be combined. Take into account that

- Some VO catalogues provide AV directly, but other catalogues give information about RV or E(B-V). Using RV and E(B-V), AV can be calculated as AV = RV * E(B-V).

- VOSA can combine the data given by you in your input file and the information found in VO catalogues.

- You can write default values for AV, RV, E(B-V) or the AV range to be used in the fits. If you do so, click the 'Add user values' button to fill the corresponding User columns with those values (only if there is not a previously saved value).

- Click the 'Search for extinction properties' button for seaching several VO catalogues.

- For each object, if you want to save some information, check the corresponding checkbox. If you mark values for RV and E(B-V) they will be used to calculate AV. If you want to do so, mark the ticks with the values that you want to save, and click the 'Save extinction properties button' and the AV values will be saved and shown in the 'Final' column (if there was enough information).

- When you have a large list of objects, it is difficult to do this object by object. You can also give some general criteria about what catalog information you trust better, and let VOSA try to build Av values for all the objects in the file using the available information. See below for a detailed example on how to use the different forms.

Take into account that the tool queries VO services using the object coordinates and returns the closer object to those coordinates for each catalogue in a given search radius. It could happen that the obtained information corresponds to a different object if the desired one is not in the catalogue. In that case, the obtained data could be erroneus, as it corresponds to a different object. So, please, check the coordinates given by the catalogue for each object to see if they seem to be the appropiate ones (within the catalogue precision) before using the obtained values.

Take a look to the corresponding Credits Page for more information about the VO catalogues used by VOSA.

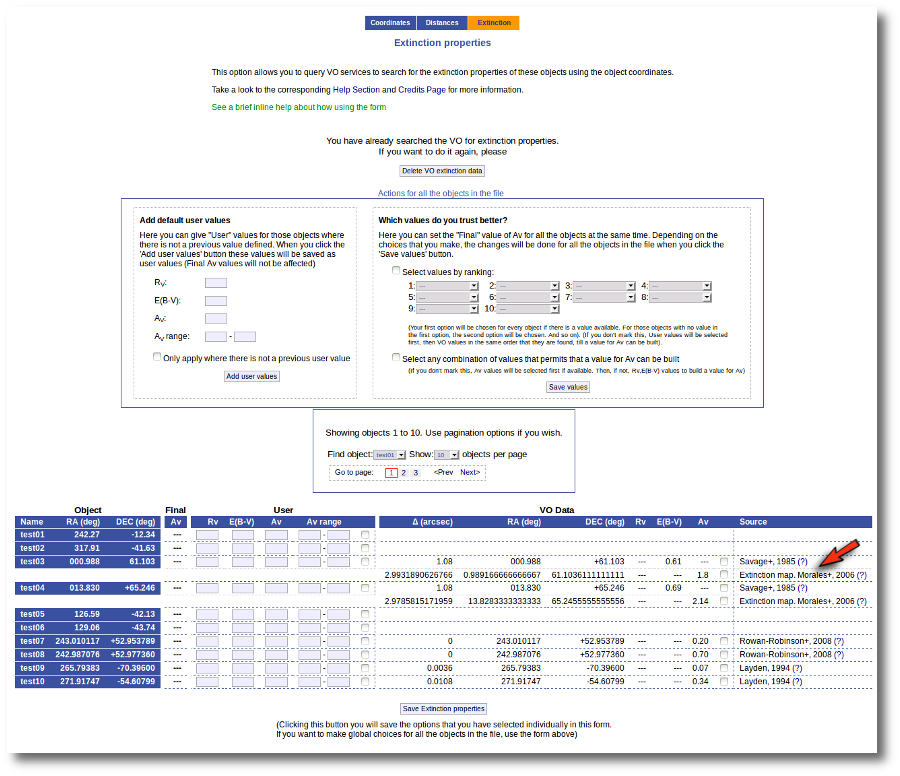

An example

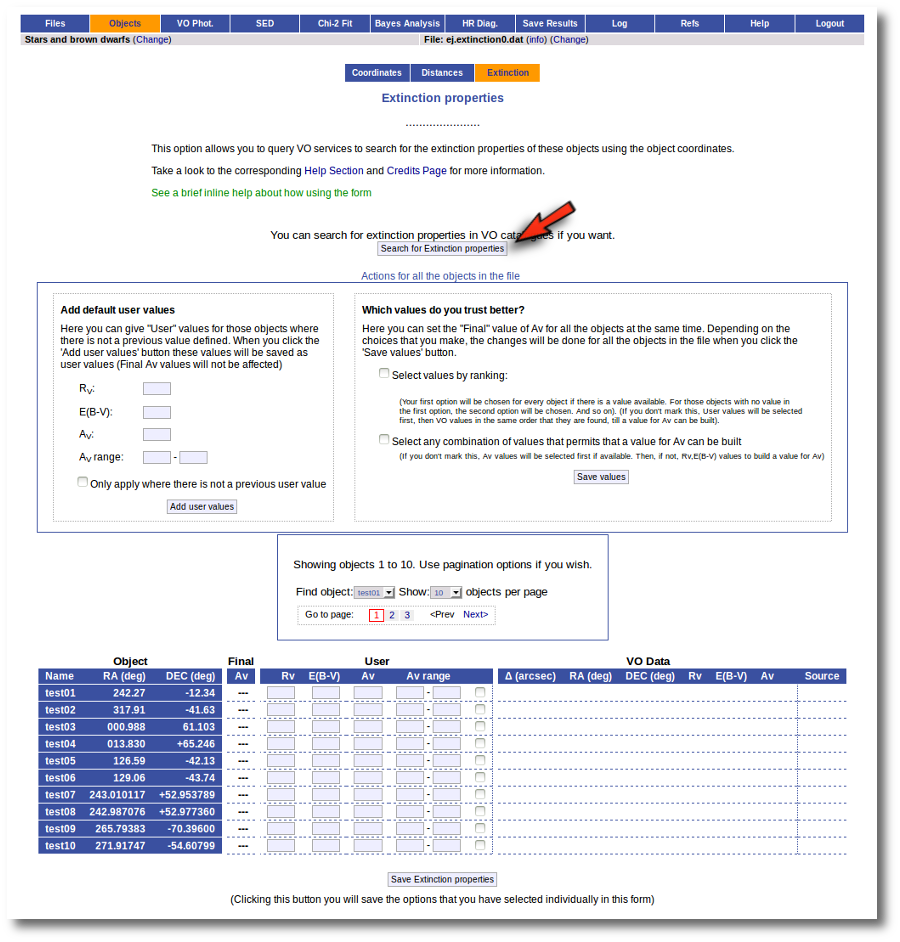

We have uploaded a file with some objects and their coordinates, but we don't have information about the extinction for each object.

Thus, when we enter the "Objects:Extinction" tab in VOSA we see the list of objects and no extinction properties. We also see some forms:

- A button to seach the VO for extinction properties.

- A box, on the left, where we can add default values for AV, RV, E(B-V) or the AV range to be used later in the fits.

- A box, on the right, where we can give some criteria about our preferences among catalogs (this is of no use yet because we still don't have any information).

- A pagination form to see all the objects page by page.

- A "Save extinction properties" button that would be useful to save particular values for only the objects that we can see in the page.

We will see all these options with some detail below.

But, given that we don't have any information, our first step is searching for these objects in VO catalogs. And, thus, we click the "Search for extinction properties" button.

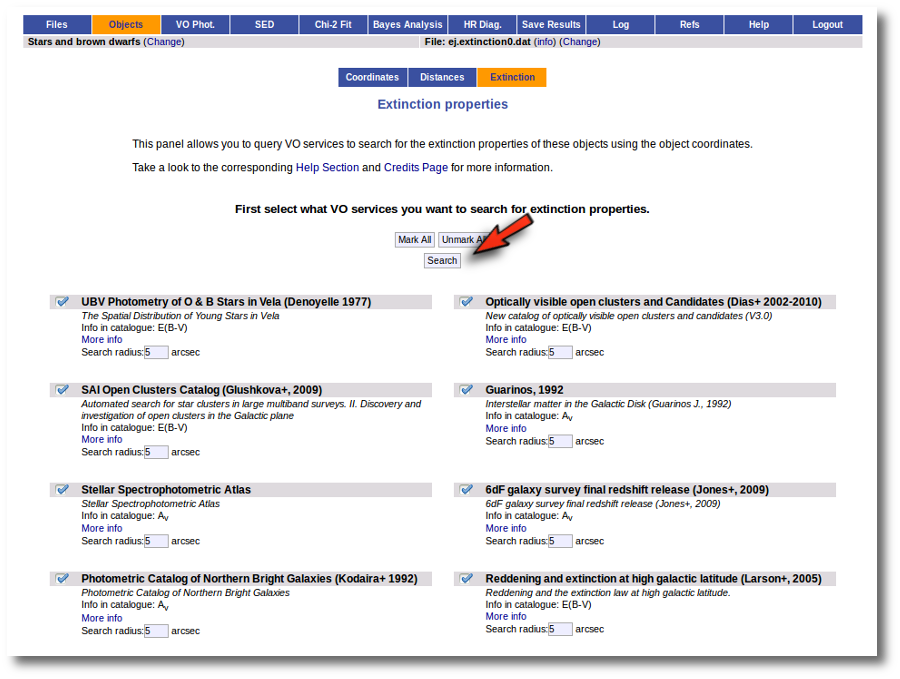

We get a list of all the catalogs that VOSA can use to search for VO properties. You can leave it as it is and just click the "Search" button. But you also could unmark some of them if you know, for some reason, that they are not going to be useful. You also can change the default Search Radius for some catalogs if you are aware that a differrent radius is more adequeate for your case.

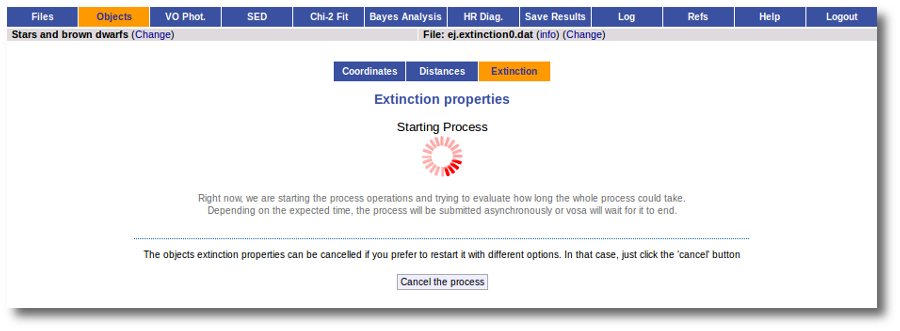

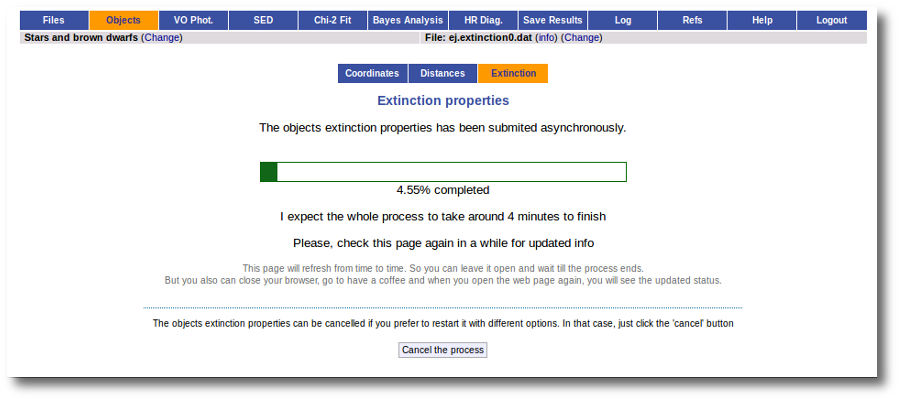

We just click "Search". When we click this button, VOSA starts the operation of querying VO catalogs.

This search is performed asynchronously so that you don't need to stay in front of the computer waiting for the search results. You can close your browser and come back later. If the search is not finished, VOSA will give you some estimation of the status of the operation and the remaining time.

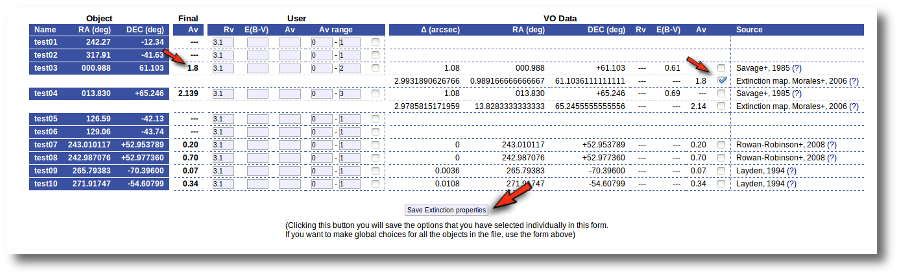

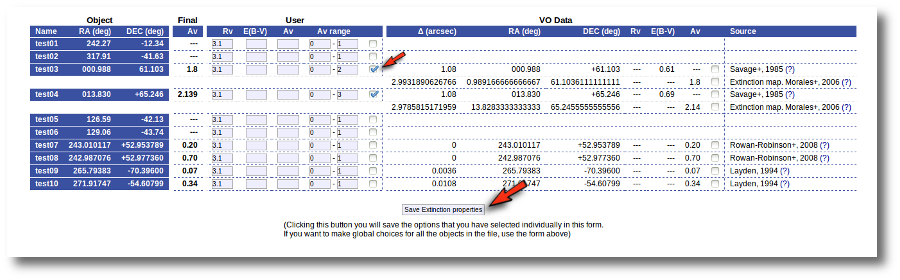

When the search is finished, VOSA shows you, on the right, all the information that has been found for each object. In some cases, we don't get any information at all (for instance, for objects 'test01' and 'test02'). In other cases we only get information from one catalog. But in some cases (for instance, objects 'test03' and 'test04') we get heterogeneus information from more than one catalog.

It happens very often that catalogs give values for E(B-V) but not for Av (like the Savage one in this example) and we need a value of RV to calculate AV using the expression AV=RV * E(B-V).

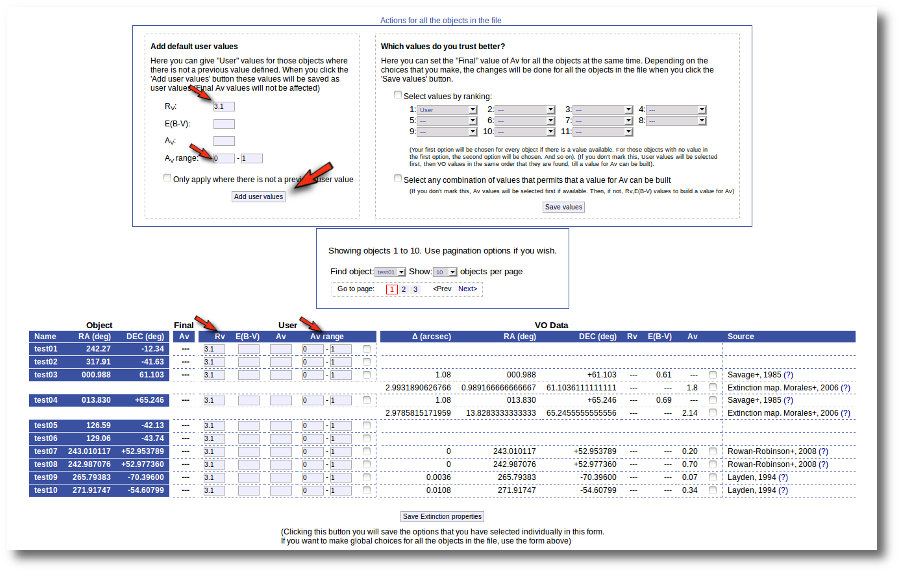

Thus, our first action is going to be adding 'Default user values' for some quantities. We write a value RV=3.1 in the "Default User Values" form and also a default fit range of (0-1) for Av. Then we click in the "Add user values" button (we could write the RV in the "User" column, object by object, but it's easier to do it this way).

Now we have values for RV so that VOSA can use them if they are necessary to build a AV value for some object.

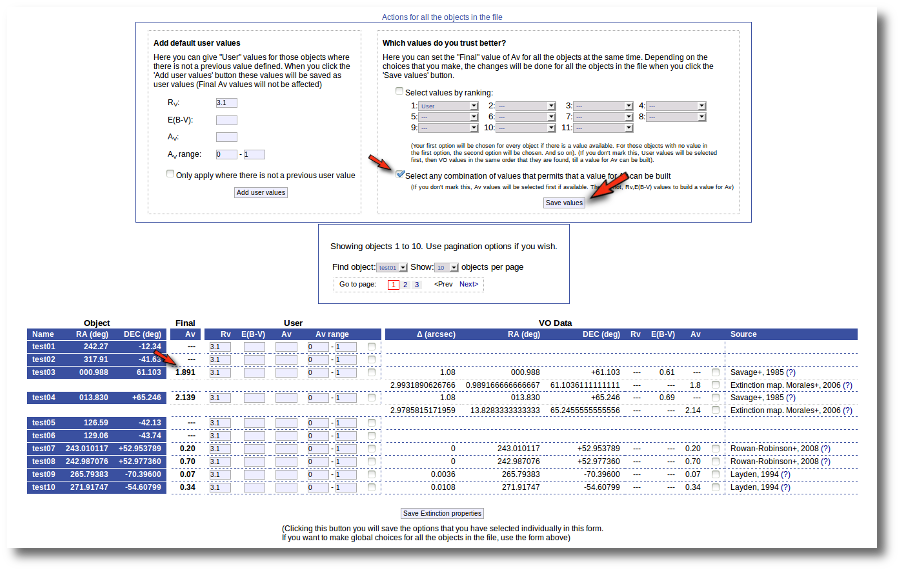

Next, we use the form on the right to let VOSA try to build values for Av for all the objects. We mark the tick correspoding to "Select any combination of values that permits that a value for Av can be built" and click the "Save values" button.

As you can see:

- For objects 'test01' and 'test02' nothing can be done, because there isn't enough information available, and nothing has been changed.

- For objects 'test07' to 'test10' it has been easy. There is only information from one catalog for each object. And in every case the catalg gives a value for Av. Thus, this is set as the final value of Av for those objects.

- For objects 'test03' and 'test04' there are two different options that can be used to build a value for Av. VOSA always chooses the first combination of values that allows for calculating Av. Thus:

- For 'test03', VOSA first tries E(B-V)=0.61 from Savage and, given that we have a default value Rv=3.1, a value Av=3.1*0.61=1.891 is calculated and saved.

- For 'test04', VOSA first tries E(B-V)=0.69 from Savage and, given that we have a default value Rv=3.1, a value Av=3.1*0.69=2.139 is calculated and saved.

But we decide that we prefer Av=1.8 (from Morales) for the object 'test03' instead of the 1.891 value calculated before. And we want to make that particular change only.

Thus, we go to the list and:

- We mark the tick corresponding to the Morales catalog for 'test03' so that it is the value saved as final.

- We then click the 'Save Extinction properties' button.

and the 1.8 value is set as the final one for 'test03'.

But then we notice that, given that for objects 'test03' and 'test04' we have Av values 1.8 and 2.139 it does not make sense that, later, when performing model fits, we try an Av range between 0 and 1. We set that default range before, when we didn't have any information, but now we should change that range, at least, for these two objects.

Thus, we go to the list and make these changes one by one.

- We set Av range = 0-2 for 'test03' and mark the tick on its right.

- We set Av range = 0-3 for 'test04' and mark the tick on its right.

- We then click the 'Save Extinction properties' button.

And the Av fit ranges are changed only for these two objects.

Build SEDs

VOSA helps you to build and/or improve the observed Spectral Energy Distribution (SED) for the objects in your file in different ways.

First, you can upload your own photometry into VOSA for each object including it in your input file.

If you include your data as magnitudes or Jy, VOSA will transform them into erg/cm2/s/A using the information for each filter provided by the SVO Filter Profile Service.

You can search in VO catalogs to find more photometry for your objects and those new points (if any) will be included in your objects SED. Again, if the catalogs provide data as magnitudes or Jy, VOSA will transform them into erg/cm2/s/A using the information for each filter provided by the SVO Filter Profile Service.

In the case that, for an object, there are several photometry values corresponding to the same filter but coming from different sources (user and VO, different VO catalogs, same source at different epochs...) VOSA will average them and include the average value in the final SED.

Every observed SED will be dereddened using the value for Av provided by you in your input file or in the "Objects:Extinction" tab (with the option of searching VO catalogs for extinction properties).

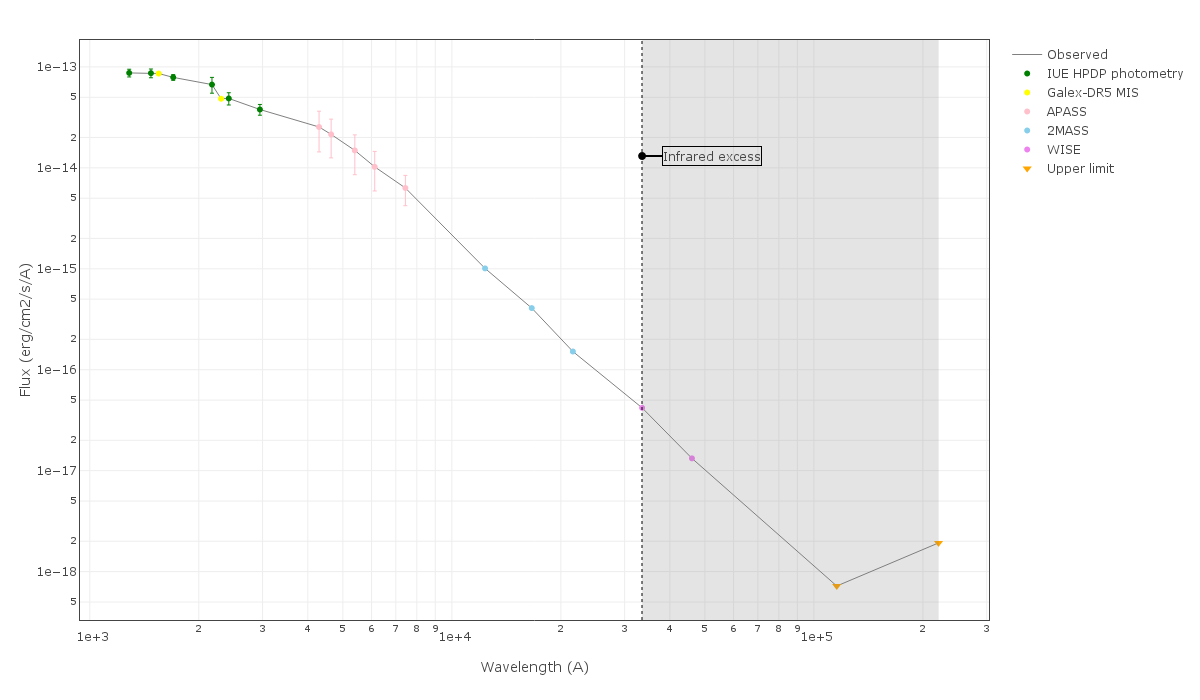

For each object, VOSA will try to detect the presence of infrared excess using an automatic algorithm.

Then you have the option to inspect (and optionally edit) the final SED object by object.

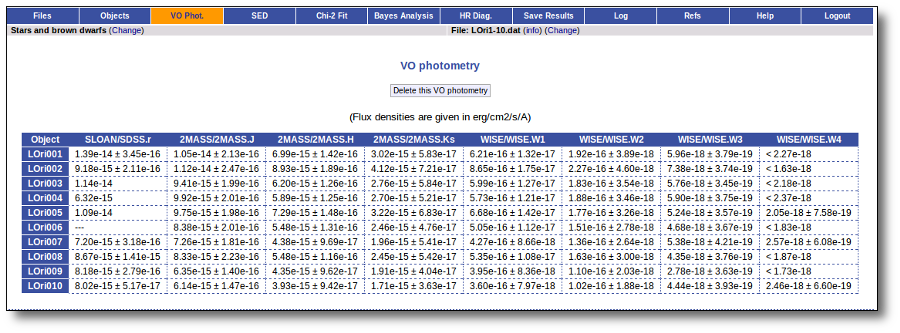

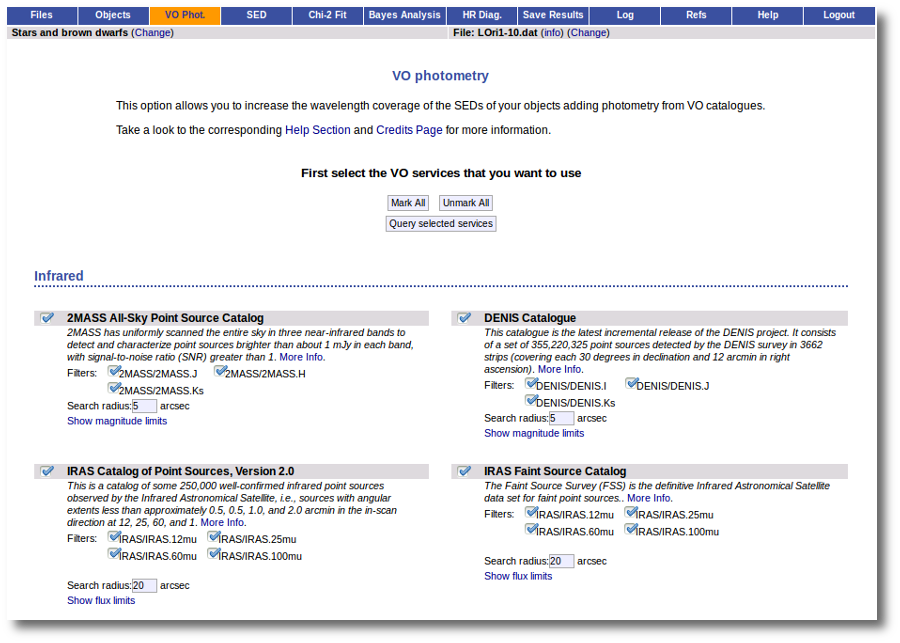

VO photometry

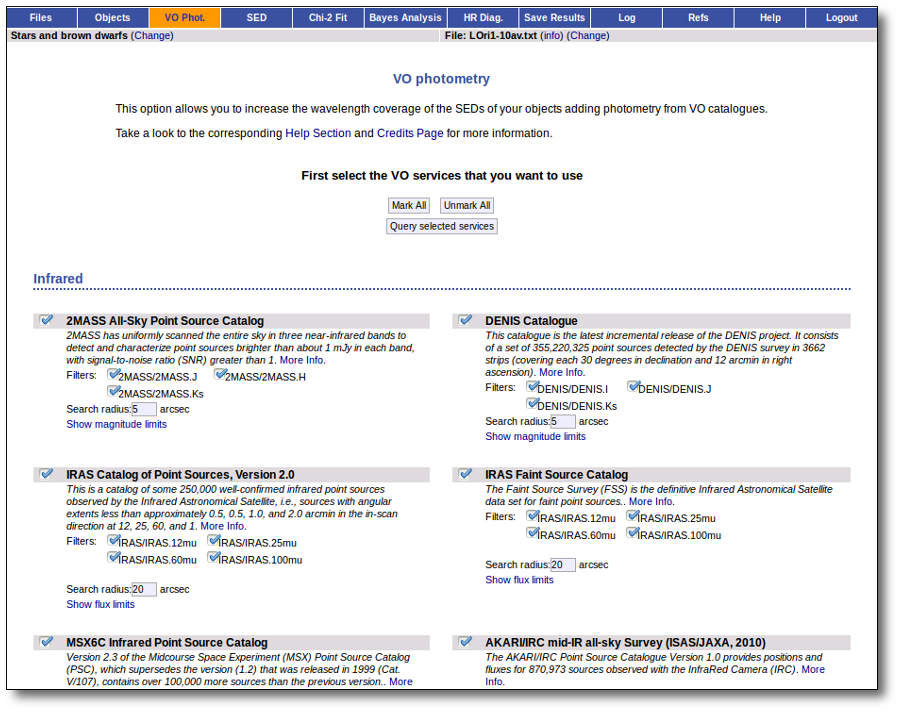

Search for photometry in VO catalogues.

The tool offers the possibility of searching in the VO for catalog photometry for the objects in the user file.

In order to do that, the object coordinates must be known as precisely as possible. Either the user can provide these coordinates in the input file or they can be obtained also from the VO.

VOSA offers access to several catalogs with observed photometry from the infrared to the ultraviolet.

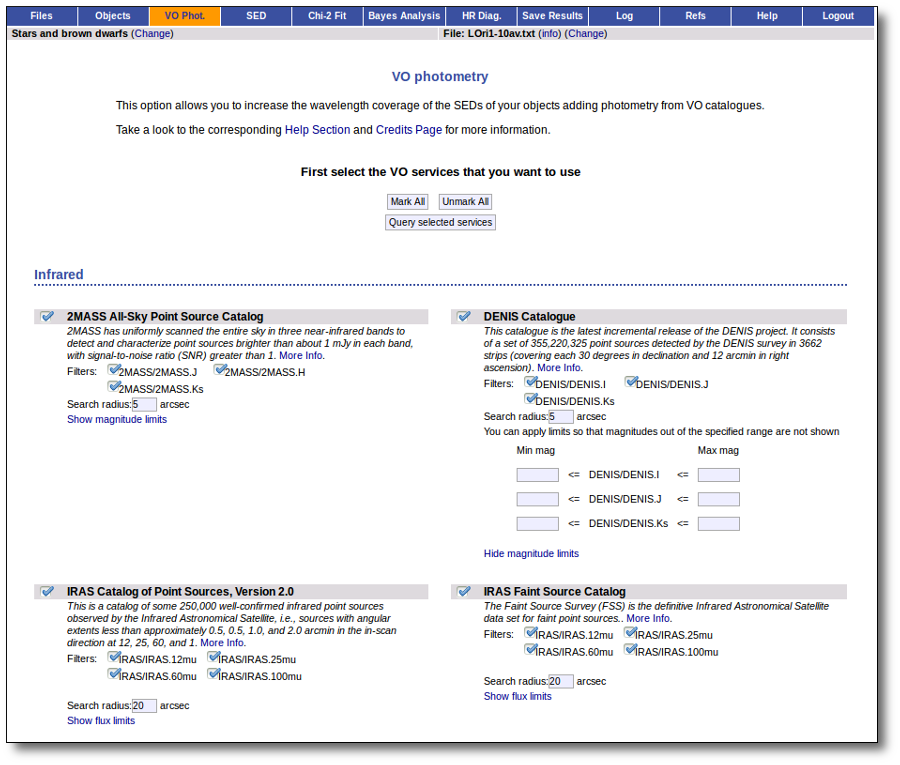

You can choose which catalogs to use and the search radius within each one.

For each catalog, you have the option to establish magnitude limits, so that only photometry values in that range will be retrieved.

For each object in the user file, each catalog will be queried specifying the given radius, and the best result (the one closer to the object coordinates) will be retrieved. For some catalogs there are special restrictions. For instance, for the UKIDSS surveys, the search is restricted to class -1 (star) or -2 (probable star) objects. These special restrictions, when applied, are explicitly commented in the brief catalog description in the VOSA form.

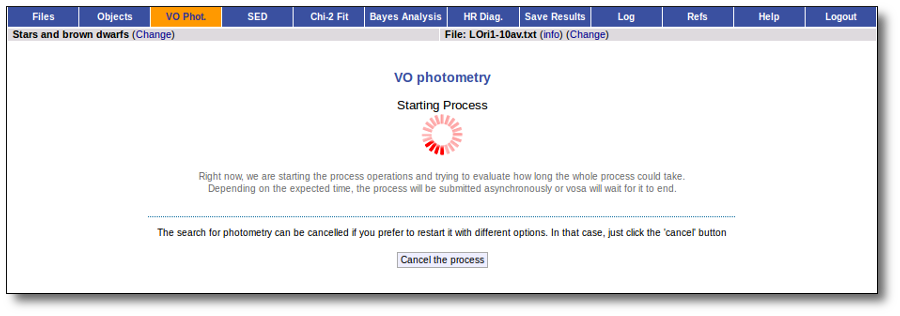

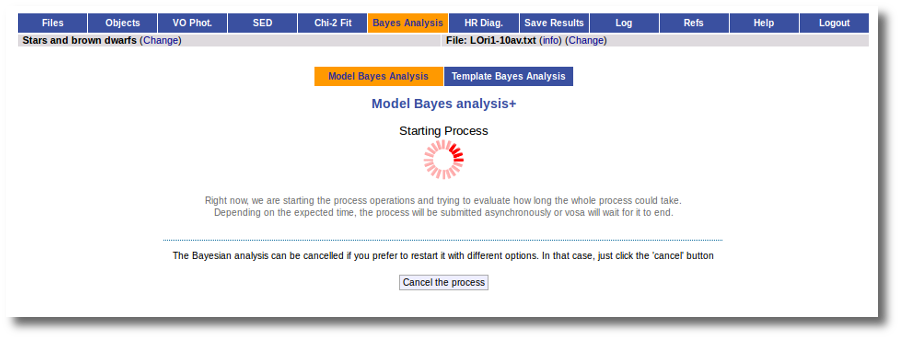

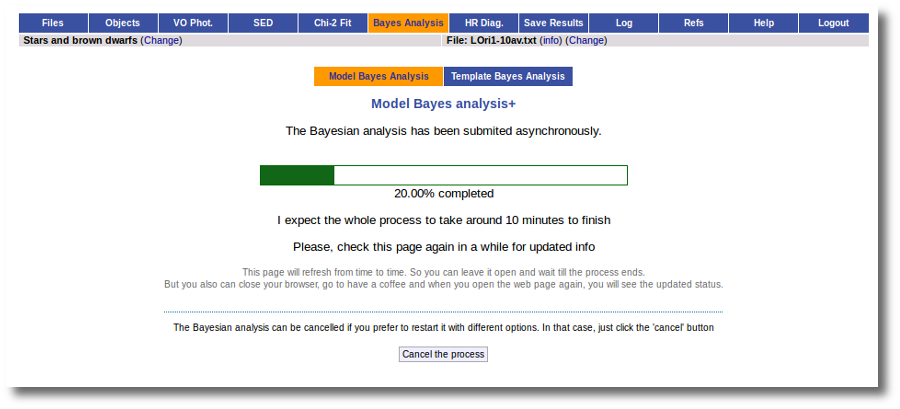

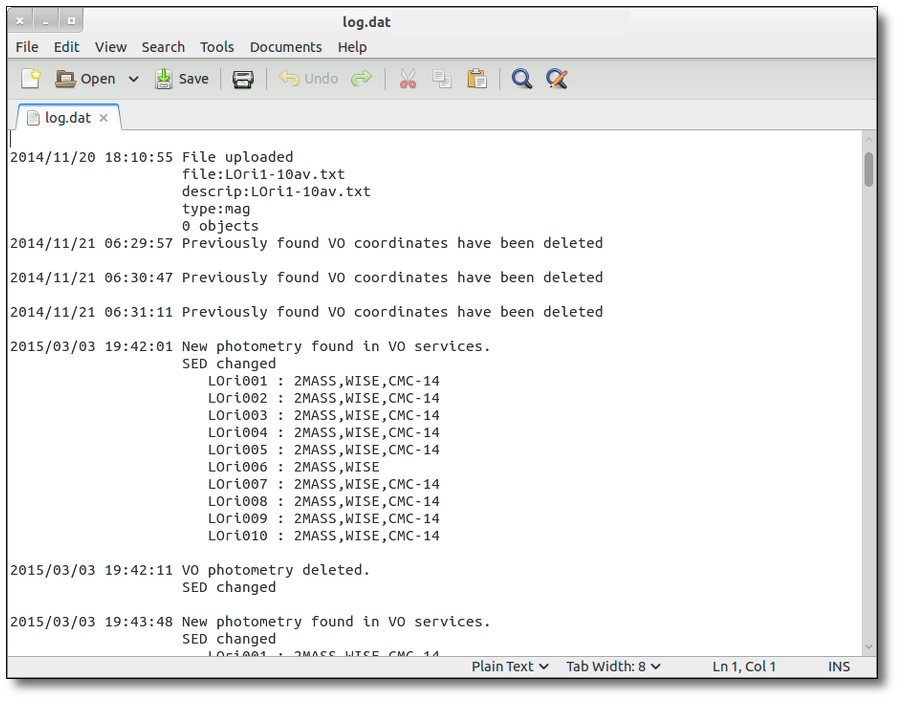

When you click the "Search" button, VOSA starts the operation of querying VO catalogs.

This search is performed asynchronously so that you don't need to stay in front of the computer waiting for the search results. You can close your browser and come back later. If the search is not finished, VOSA will give you some estimation of the status of the operation and the remaining time.

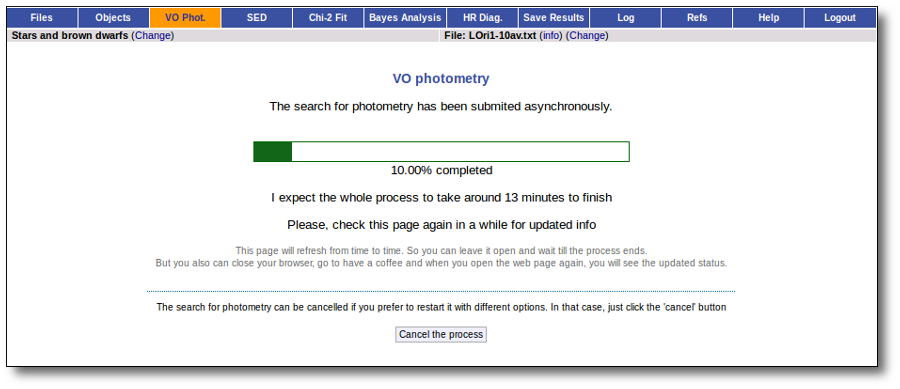

When the search process is finished you will see the photometric values obtained for each object (if any).

If the catalog provides magnitude values, these are automatically converted to fluxes.

Take a look to the Credits section for information about the available VO catalogs.

Detection of outliers in VO data

When new data are found in VO catalogues and before incorporating them to the object SED, VOSA tries to identify the presence of outliers, that is, photometric points that, for one or another reason, seem not to be part of the real SED.

In particular, VOSA looks for V patterns and inverted V patterns, that is:

V pattern

VOSA looks for points that seem to be clearly below the main SED, that is points so that both the previous and next points have much higher fluxes. To be more precise, if all these criteria are met:

- Fn-1/Fn > 5

- Fn+1/Fn > 5

- λn-λn-1 < 2500A

- λn+1-λn < 2500A

Although in the infrared, when 10000> ≤ λn-1 ≤ λn < 26000A, the criteria above is changed to: - λn-λn-1 < 6000A

- λn+1-λn+1 < 6000A

the point (λn,Fn) is considered suspicious and thus is marked as 'bad'. A 'lowflux' flag will also be included in the vosa and SED files if they are downloaded later.

Take into account that to make these calculations only the points (both from VO catalogues or User data) that are not flagged as 'bad' or 'upper limit' will be considered.

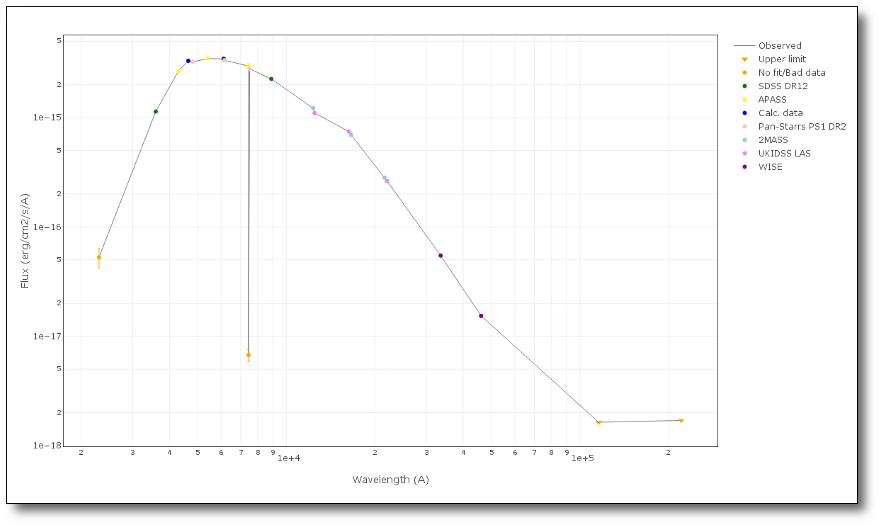

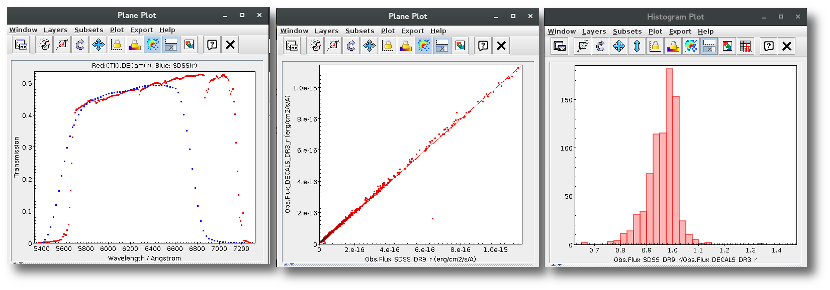

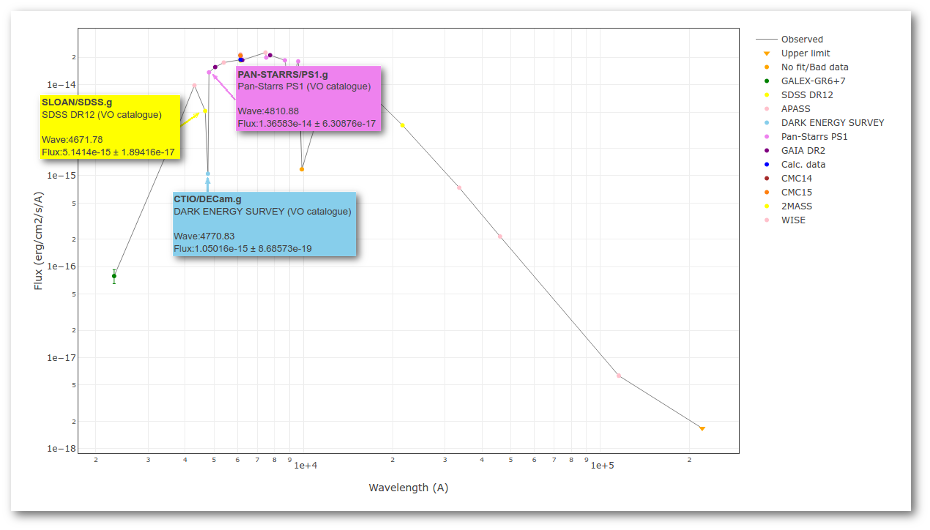

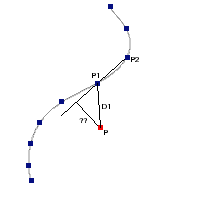

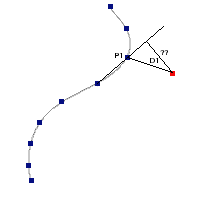

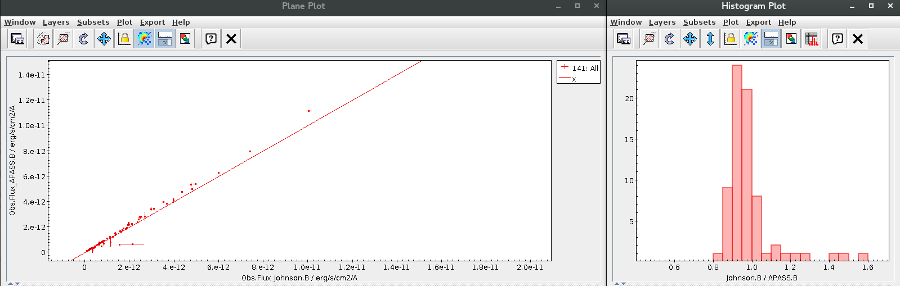

A simple example can be seen in this image:

We can see a first suspicious point for CTIO/DECam.g:

But VOSA will not flag it as bad because it does not meet the criteria

- Fn-1/Fn = 5.141e-15/1.050e-15 = 4.89 < 5

- Fn+1/Fn = 1.366e-14/1.050e-15 = 13.00 > 5

- λn-λn-1 = 4770.83 - 4671.78 = 99.05 < 2500A

- λn+1-λn = 4810.88 - 4770.83 = 40.05 < 2500A

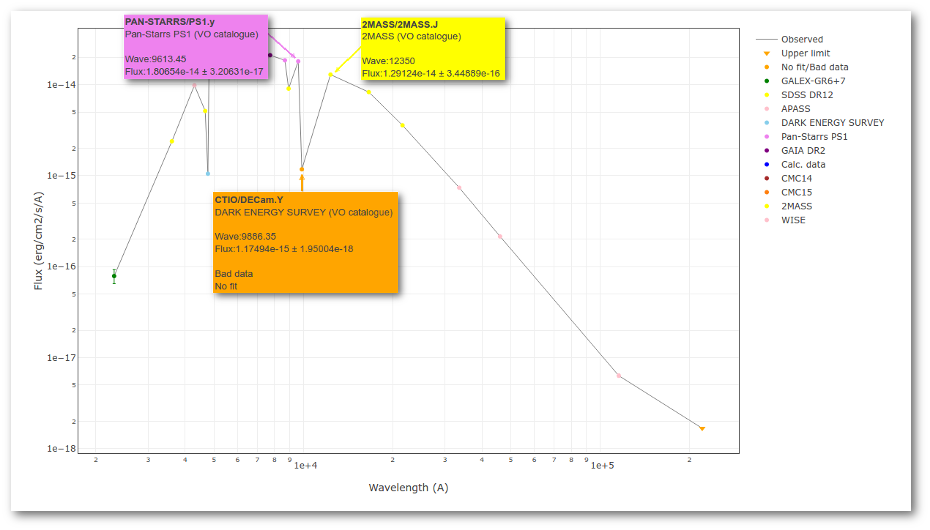

But the point for CTIO/DECam.Y will be marked as bad:

- Fn-1/Fn = 1.807e-14/1.175e-15 = 15.37 > 5

- Fn+1/Fn = 1.291e-14/1.175e-15 = 10.98 > 5

- λn-λn-1 = 9886.35 - 9613.45 = 272.9 < 2500A

- λn+1-λn = 12350 - 9886.35 = 2463.65 < 2500A

because all the criteria are met.

Inverse V pattern

VOSA looks for points that seem to be clearly above the main SED, that is points so that both the previous and next points have much lower fluxes. To be more precise, if all these criteria are met:

- Fn/Fn-1 > 5

- Fn/Fn+1 > 5

- λn-λn-1 < 2500A

- λn+1-λn < 2500A

the point (λn,Fn) is considered suspicious and thus is marked as 'bad'. A 'highflux' flag will also be included in the vosa and SED files if they are downloaded later.

Take into account that to make these calculations only the points (both from VO catalogues or User data) that are not flagged as 'bad' or 'upper limit' will be considered.

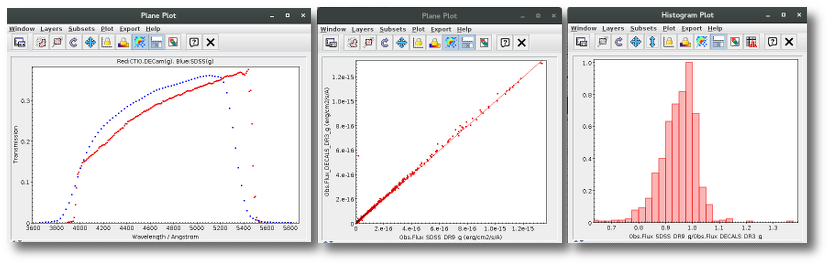

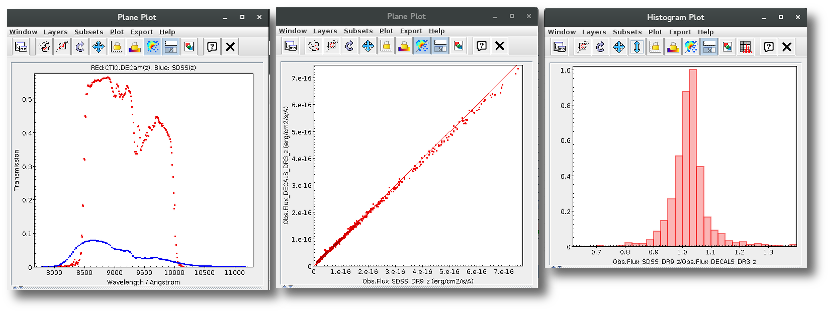

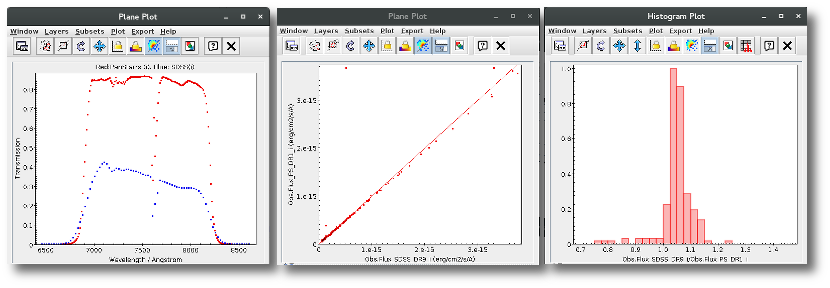

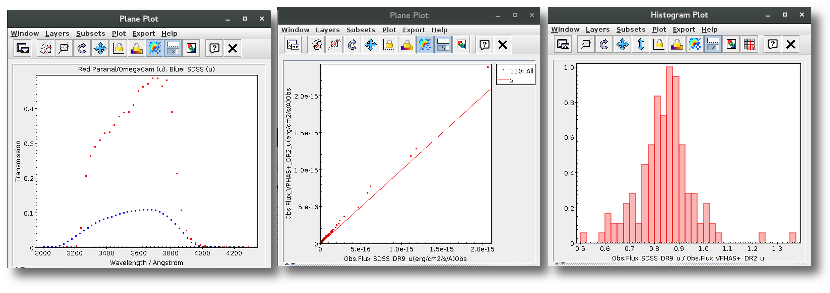

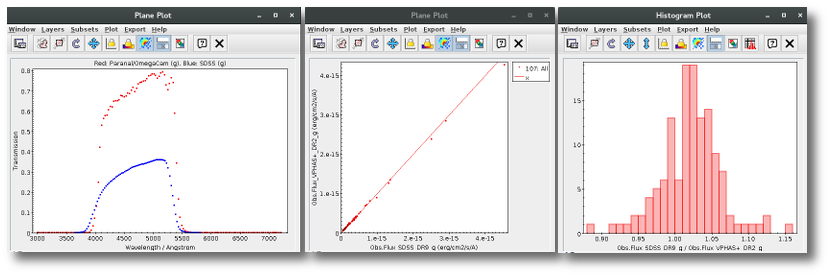

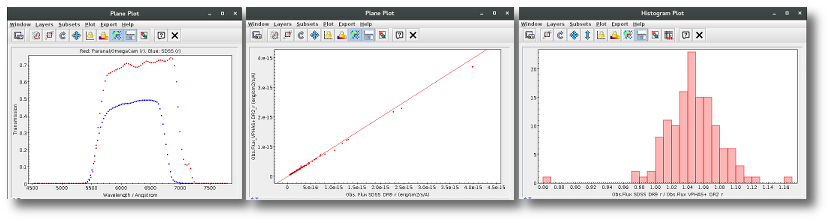

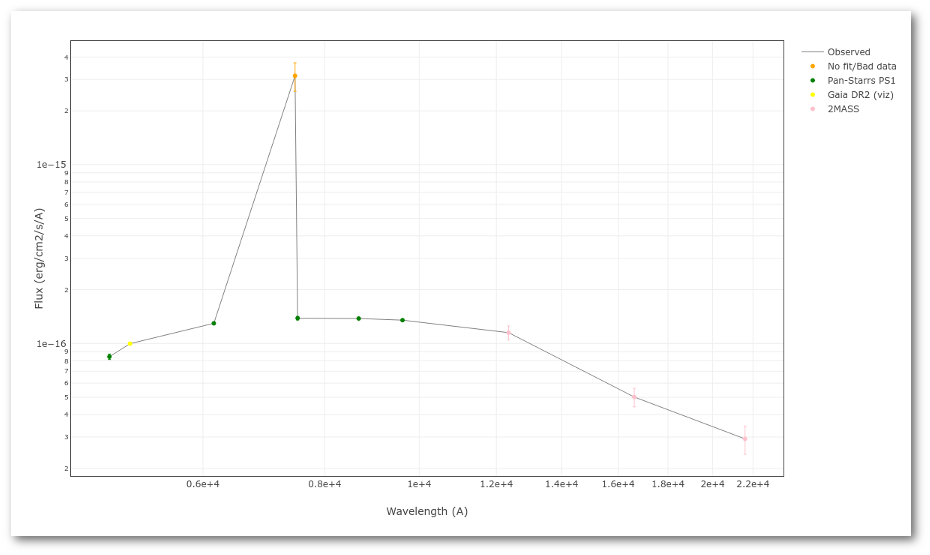

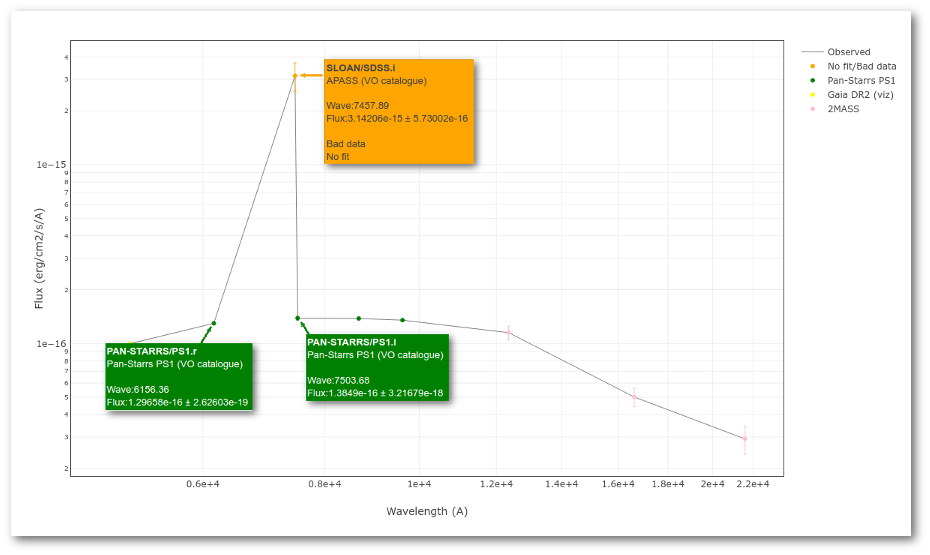

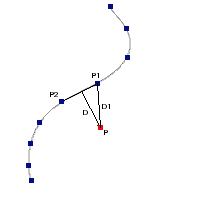

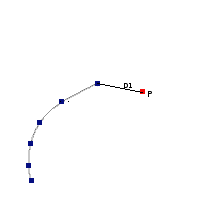

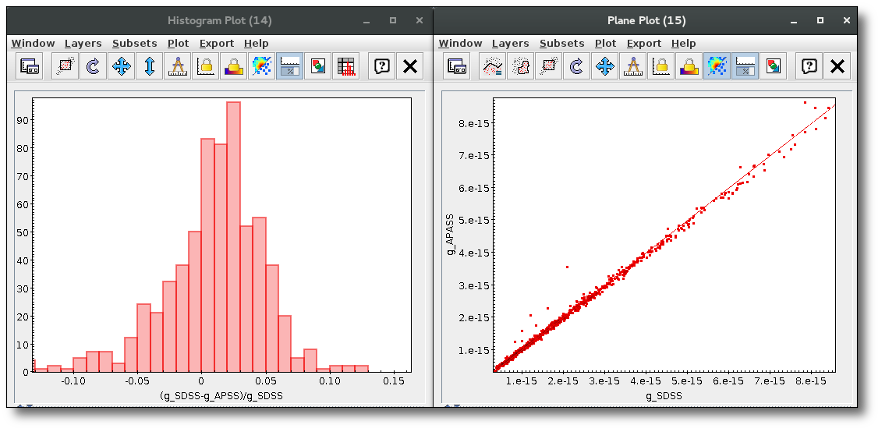

A simple example can be seen in this image:

The point for will be marked as bad:

- Fn/Fn-1 = 3.142e-15/1.297e-16 = 24.22 > 5

- Fn/Fn+1 = 3.142e-15/1.385e-16 = 22.68 > 5

- λn-λn-1 = 7457.89 - 6156.36 = 1301.53 < 2500A

- λn+1-λn = 7503.68 - 7457.89 = 45.79 < 2500A

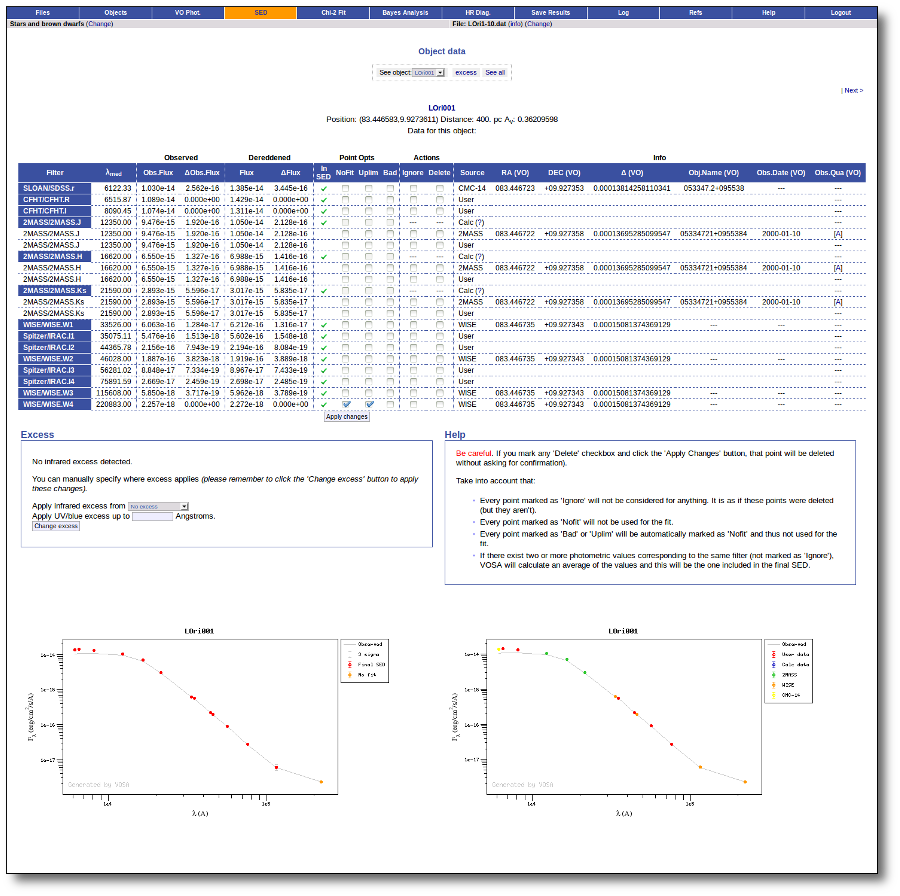

Object SED

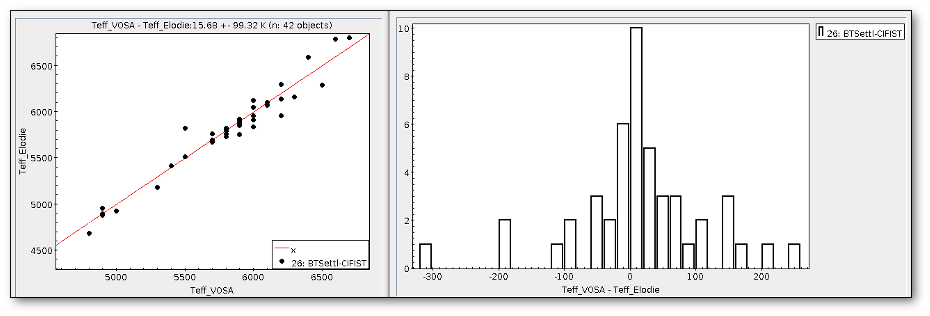

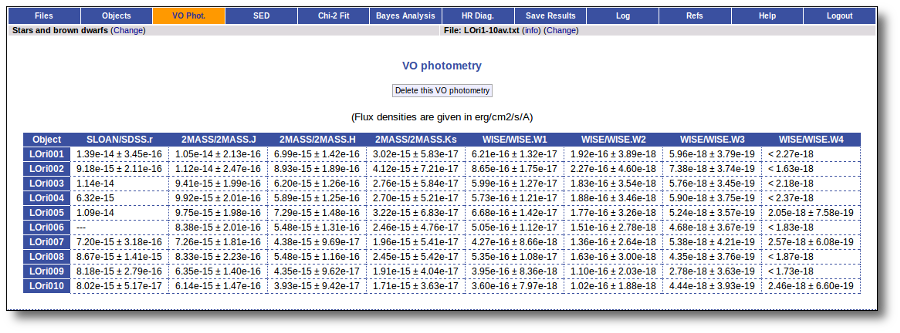

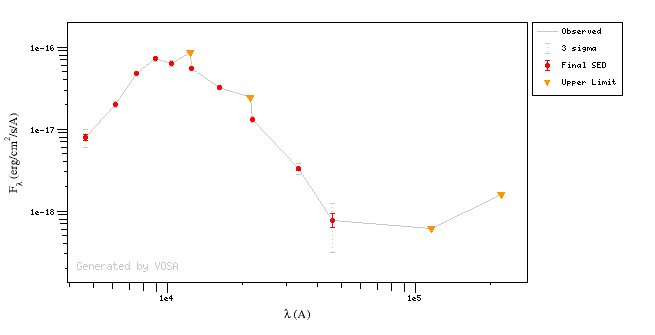

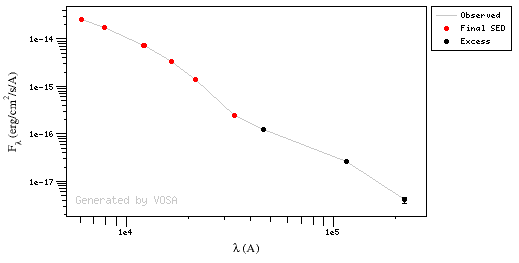

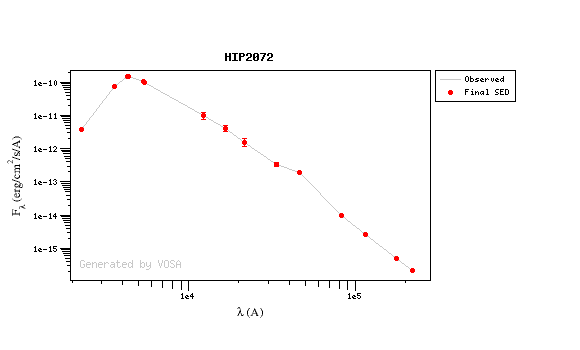

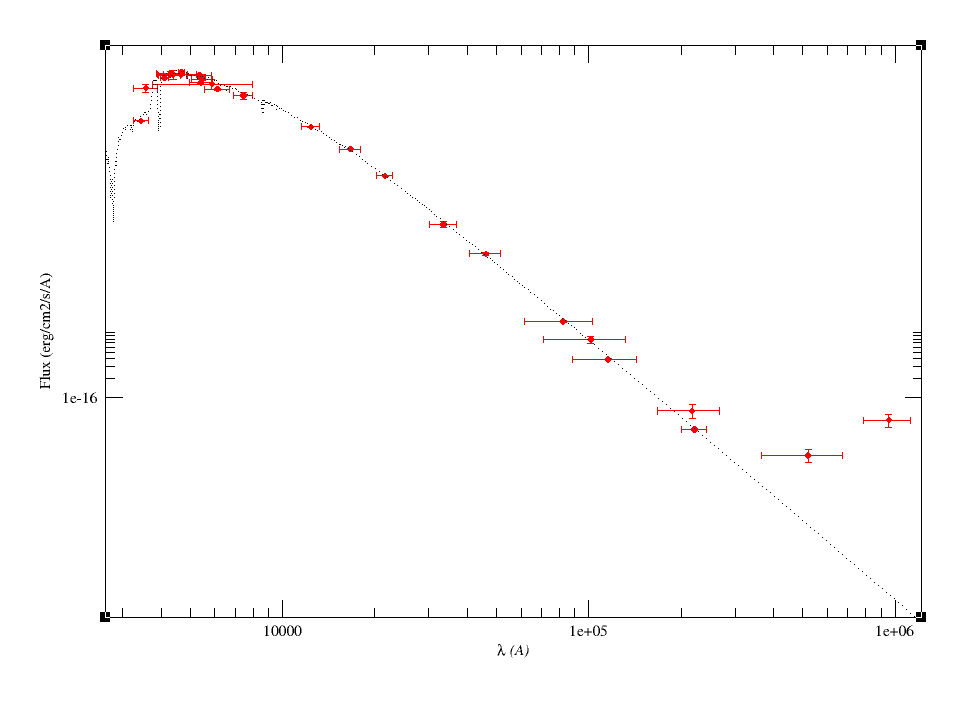

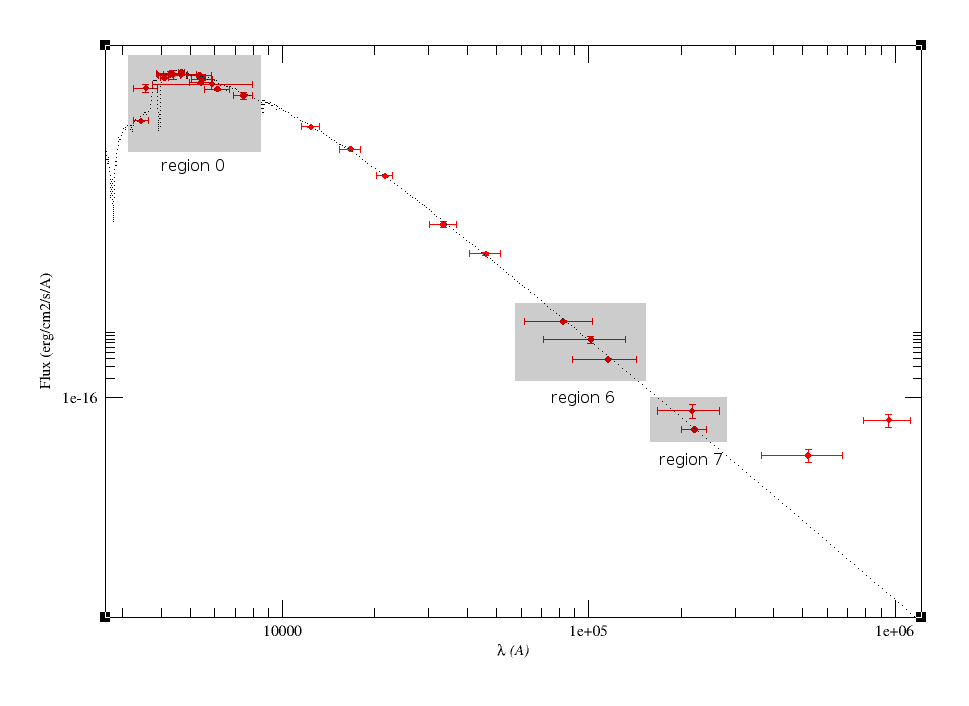

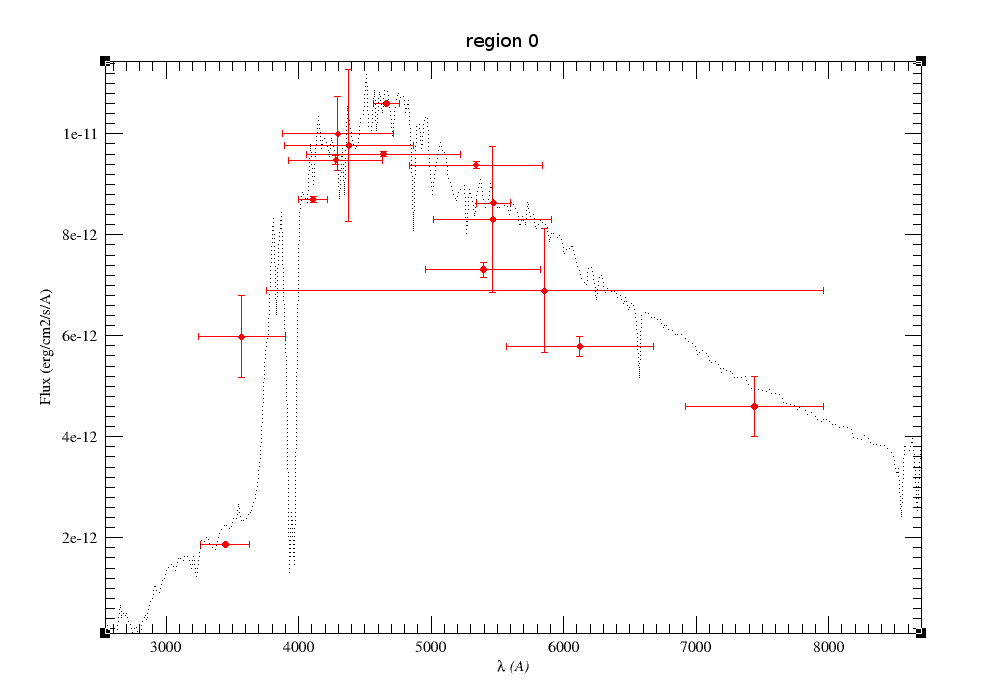

VOSA helps to build a Spectral Energy Distribution (SED) for each object in the file combining user input data with data obtained from VO catalogues, taking into account extinction properties for deredening the observed fluxes and marking photometric points where IR or UV excess is detected.

In the SED section of VOSA you can visualize how the final SED has been built, what points have been considered, where the photometric points come from (VO catalogue, user input, etc), some properties of the data when coming from VO catalogues (including data quality when available) and, finally, where an IR excess has been detected by VOSA.

You can also edit the final SED and make decisions about what points are considered and how they enter the final SED. This is specially tricky when there are different photometric values for the same filter (coming from the user input file and/or VO catalogues).

Point options and actions

There are some options that allow you to decide how the final SED is built:

- Delete: Be careful. If you mark the 'Delete' checkbox for any point and then click the 'Apply Changes' button, this point will be deleted from the SED without confirmation. And you will not be able to undo the operation. So please, be careful.

- Ignore: If you mark the 'Ignore' checkbox for a point, this point will be ignored. It is as if it is deleted but it will be there so that you can recover it later if you want. It will not be part of the final SED, it will not be considered to make averages if there are more points for the same filter, it will not be shown in the SED plots...

- Nofit: Points marked as 'nofit' will be considered for the final SED but they will not be used in the model fits (chi-square or bayes). The point will be shown in plots in a different color.

- Uplim: Points marked as 'uplim' are assumed to be upper limits, not actual photometric values. But remember that VOSA does not really consider upper limits in the fits. These points will be authomatically marked as 'nofit' too. They will be shown in plots in a different color and they won't be used for the fits.

- Bad: Points marked as 'bad' are assumed to be points with bad quality for whatever reason. They will be marked as 'nofit' too. In some cases VOSA authomatically marks a VO photometry point as 'bad' when we know how to detect 'bad quality' in that particular VO catalogue.

Several values for the same filter

In some cases it happens that there are several observed photometric values for the same filter. For instance, if you have given a value for one filter in your input file and another value is found, for the same filter, in a VO catalogue.

When this happens, VOSA will calculate an average of the different values and this average is the value that goes to the final SED.

The average is calculated as: $$ \overline{F}=\frac {\sum ( {\rm F}_{\rm i}/\Delta{\rm F}_{\rm i} )}{\sum ( {1}/\Delta{\rm F}_{\rm i} )}$$ $$\Delta\overline{F} = \sqrt{\sum \Delta{\rm F}_{\rm i}^2}$$ if the observed error for any of the involved fluxes is zero, the value of the error that will be used in this calculation will be $$\Delta{\rm F}_{\rm i} = 1.1 \ {\rm F}_{\rm i} \ {\rm Max}(\Delta{\rm F}/{\rm F})$$ (so that it is the biggest relative error, that is, the smallest weight).

If it happens that all errors are zero, the average will be done withouth using weights.

Take into account that:

- You cannot play with the options (delete, ignore, bad, uplim, nofit) for 'Calc' points. These are calculated by VOSA. You just can play with the particular observed points that are used to calculate the average value.

- Values marked as 'ignore' won't be used in the average. Thus, if there are three possible values (for instance, one from user input, one from catalogue A and one from catalogue B) and you want to use one of them (so that VOSA does not calculate an average) you only need to mark the other two ones as 'ignore'.

- If any of the points that are used to calculate an average is marked as bad,uplim or nofit, this property is inherited by the average. Let's say that the 'average' point can't be "better" than the "worst" element used for calculating it.

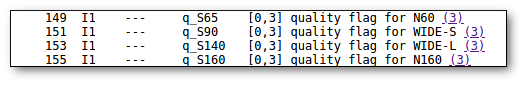

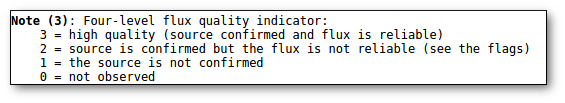

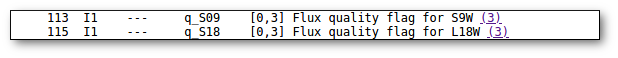

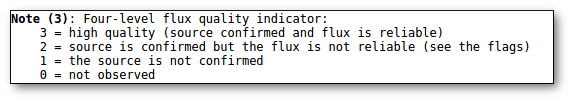

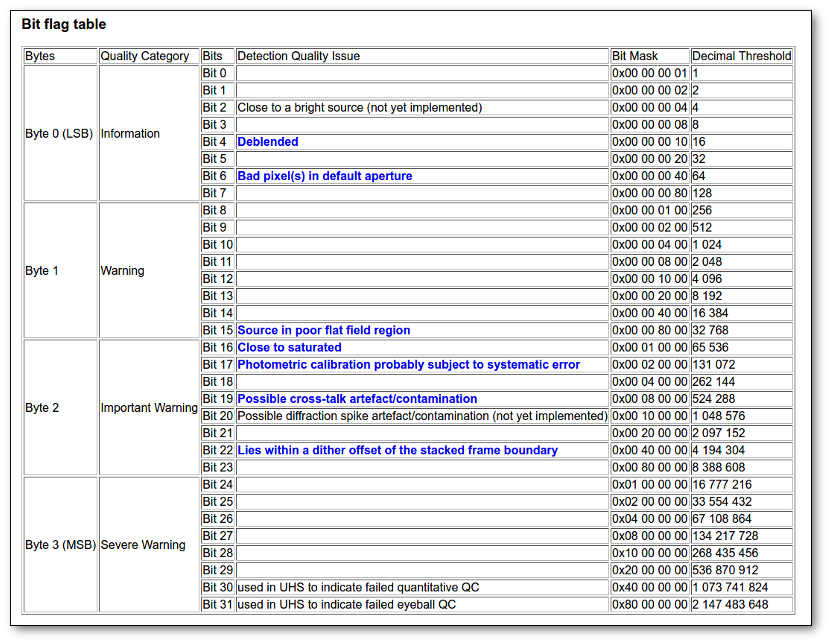

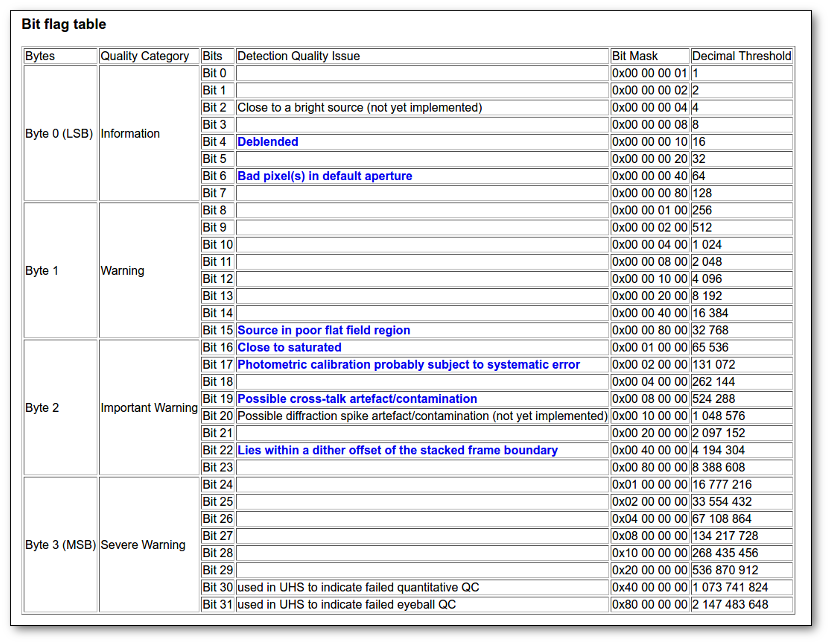

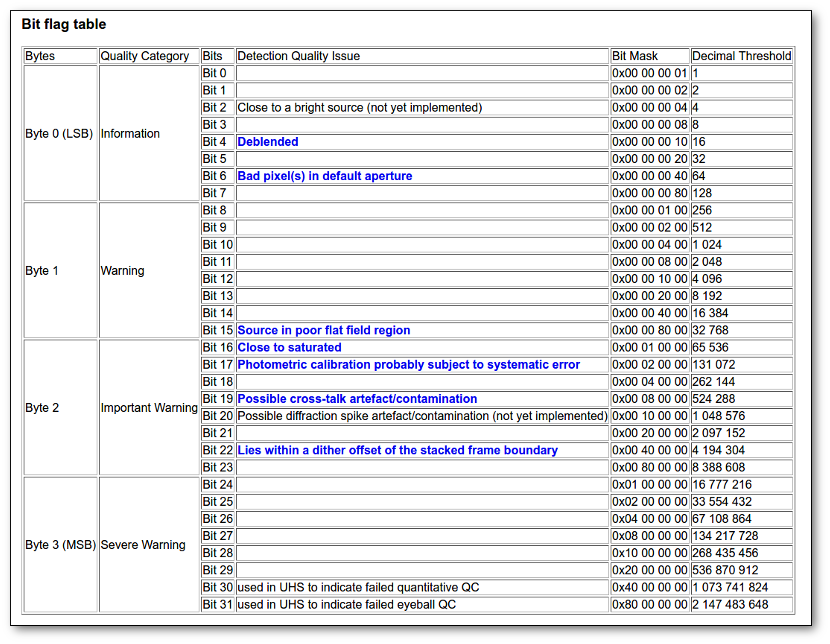

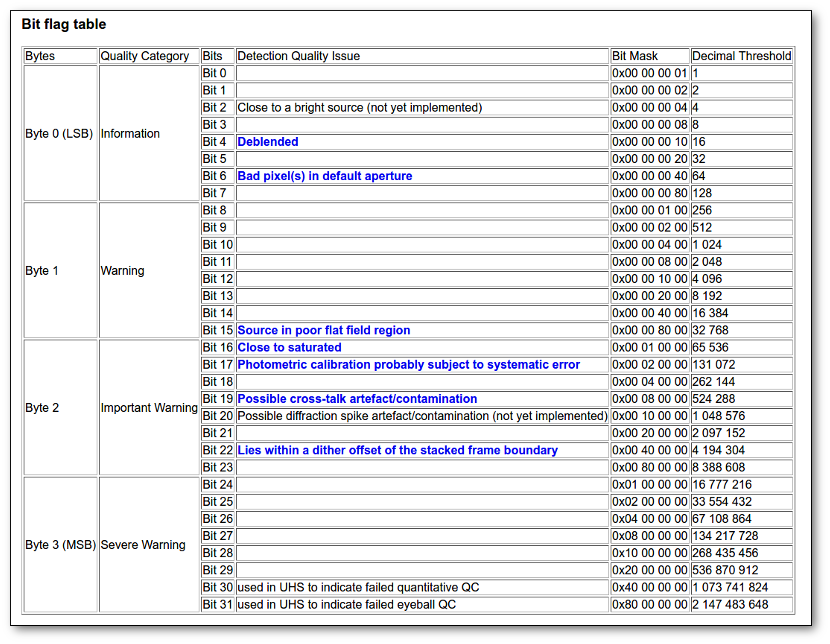

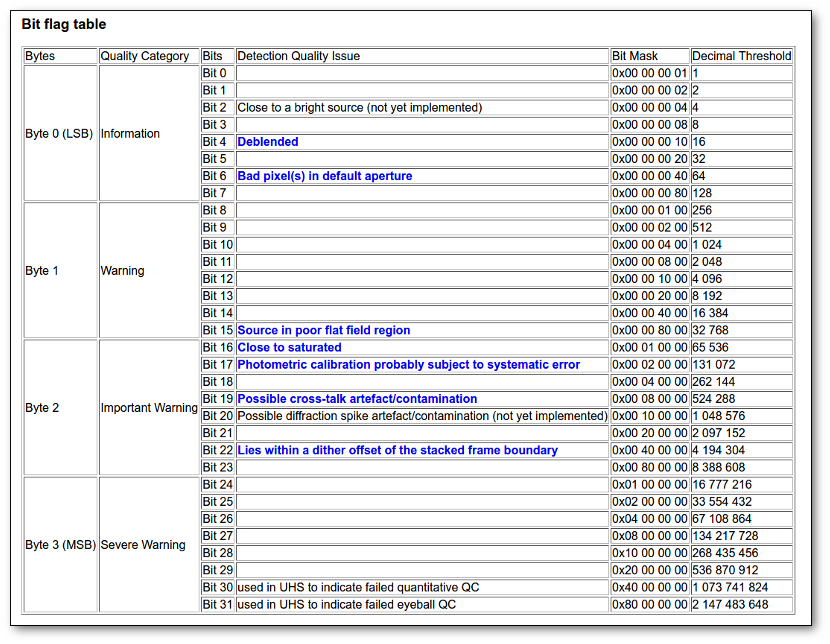

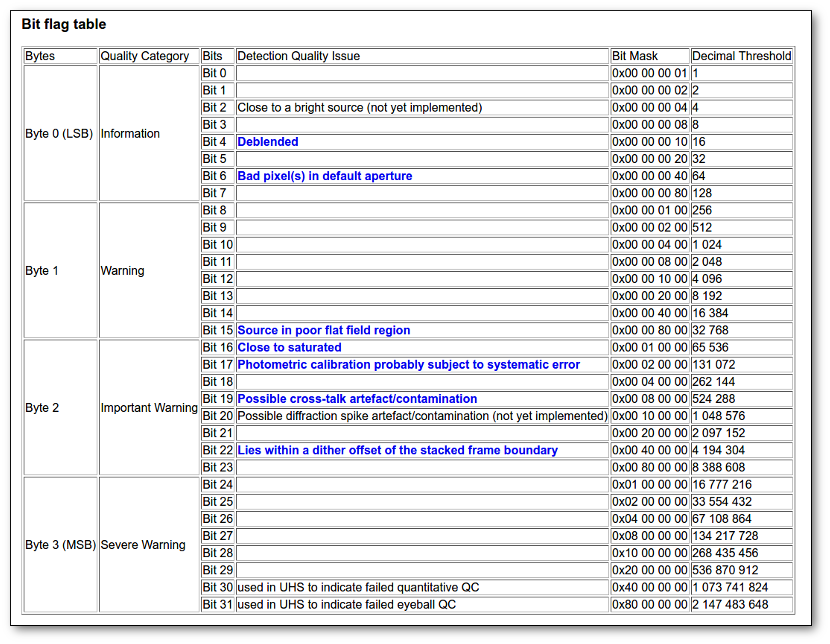

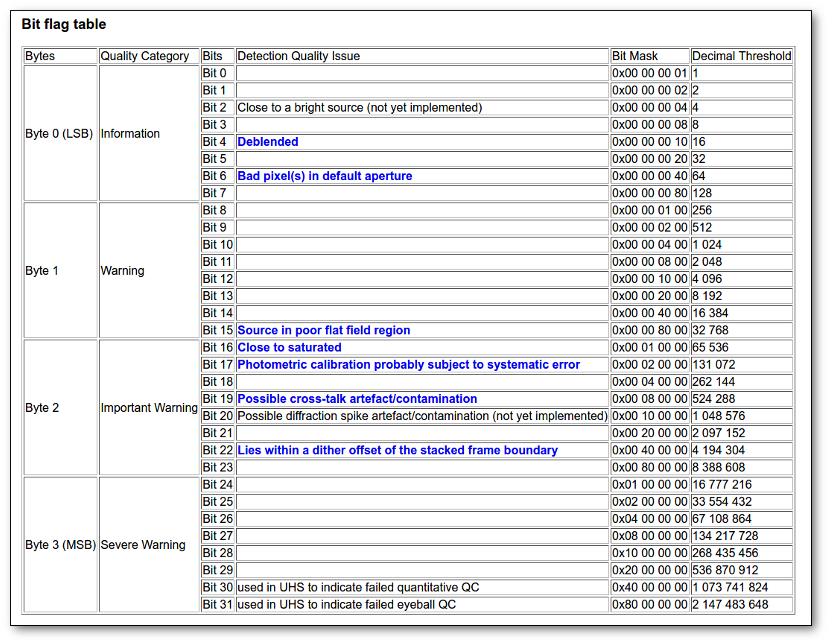

VO photometry information

When available, you will see, for each point coming from a VO catalogue, some information that we have extracted from the catalogue to help you to decide if you want to incorporate it to the final SED or not.

- RA (VO): RA coordinate (degrees) given in the catalogue for this point.

- DEC (VO): DEC coordinate (degrees) given in the catalogue for this point.

- Δ (VO): angular distance from the object position to the position given by the catalogue. If it is large, you could consider the posibility that this entry corresponds to a different object.

- Δ_2 (VO): angular distance from the object position to the position given by the catalogue for the second closest object. If this is small, or similar to Δ (VO) it could mean that the obtained photometry does not correspond to this object but to some counterpart and you should be cautious.

- Nobjs: number of objects found within the search radius (if there are more than 5 the value is shown as 5+). If this value is larger than 1 it could mean that the obtained photometry does not correspond to this object but to some counterpart and you should be cautious.

- OBJName (VO): object name in the VO catalogue.

- Obs.Date (VO): observation date as given in the VO catalogue.

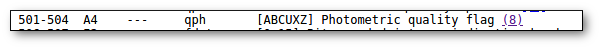

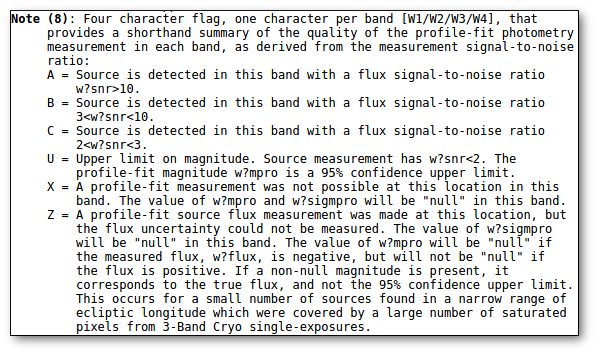

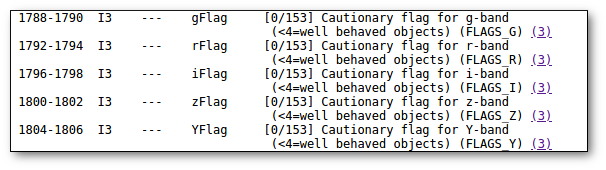

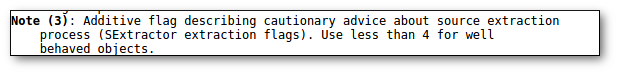

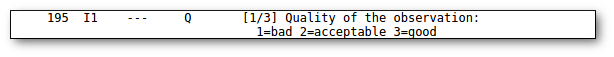

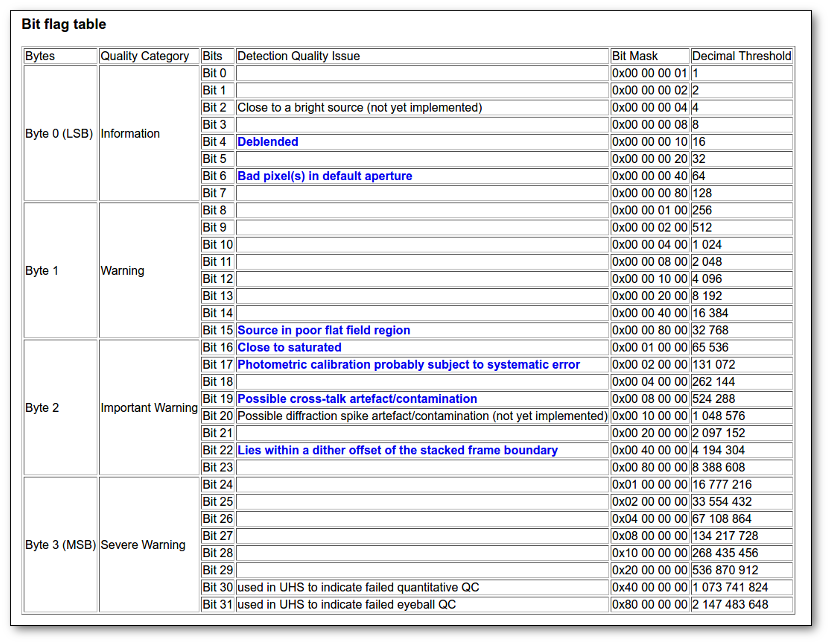

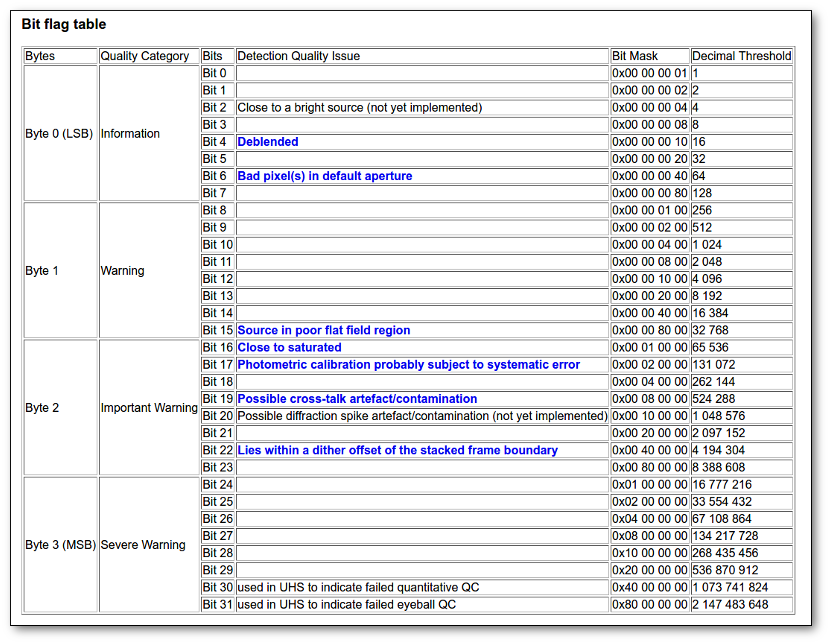

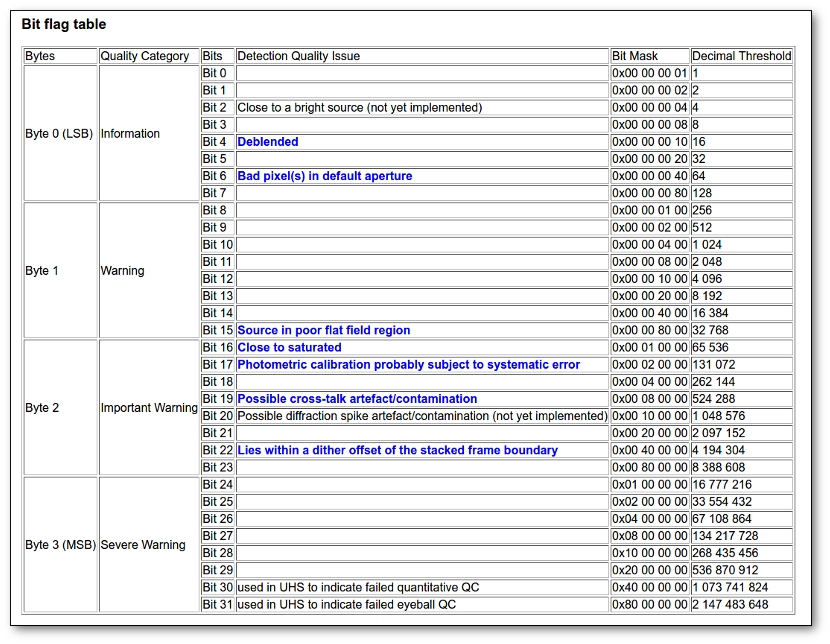

- Qual (VO): quality flag as given by the VO catalogue. When this info is available you will usually be able to click on the flag to access the information about the catalogue and the meaning of each flag.

An example

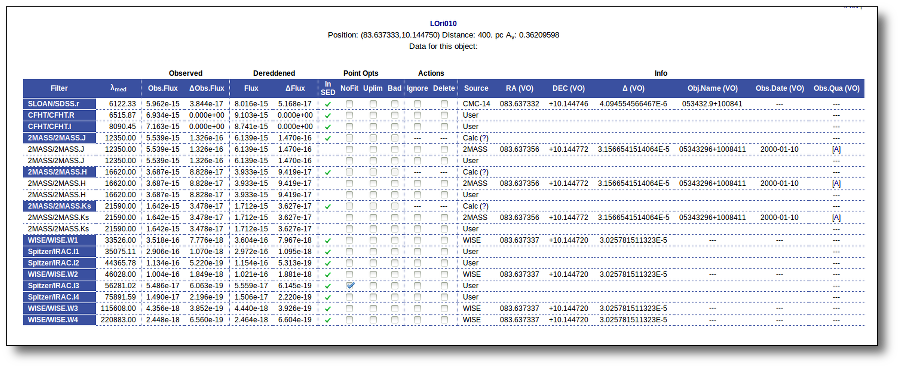

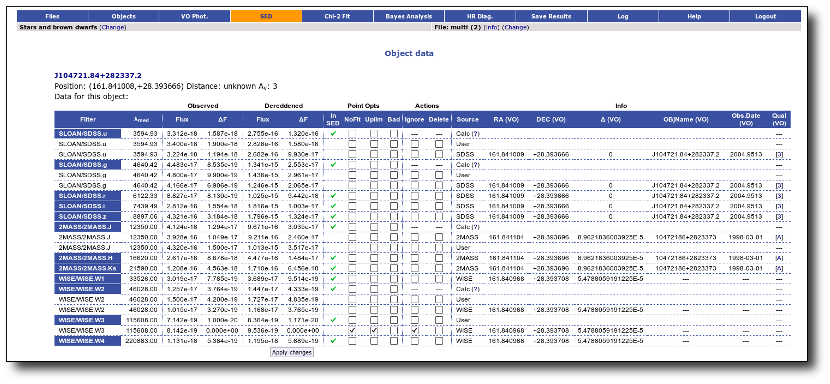

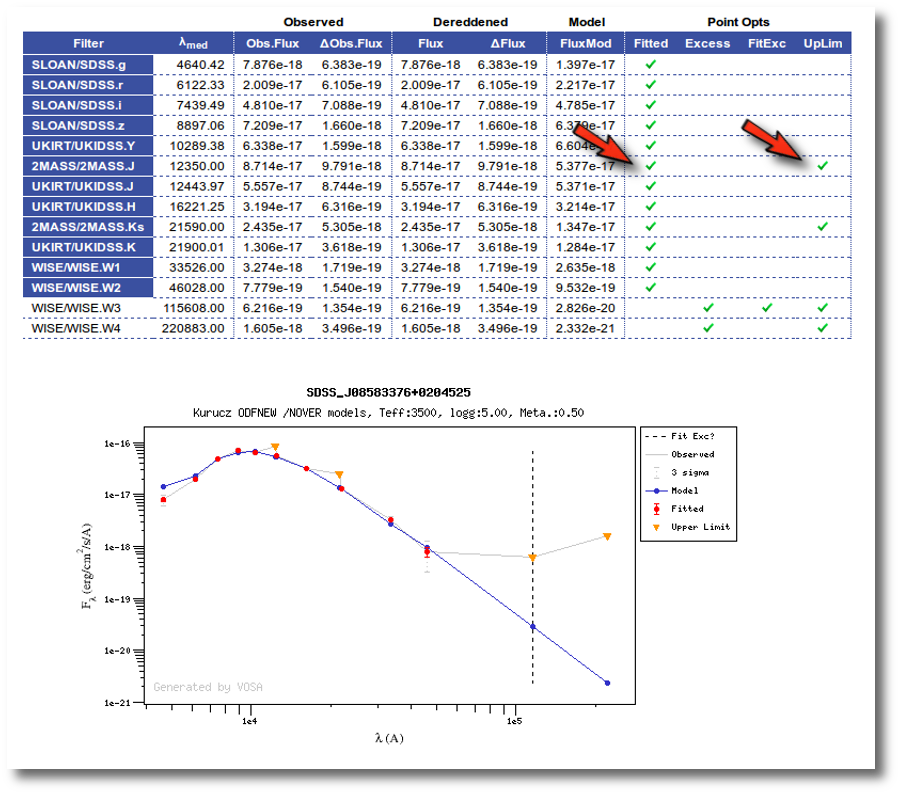

For instance, in this case (click in the image to enlarge):

- For SLOAN/SDSS.u, 2MASS/2MASS.J and WISE/WISE.W2 there were two values for each filter, one from user input and one from a VO catalogue. VOSA has calculated an averate and it will be used in the final SED.

- For SLOAN/SDSS.g there are two values too. But, as one of them has been marked as 'nofit', the final average has been automatically marked as 'nofit' too.

- For WISE/WISE.W3 there are two values too. But the one coming from the WISE VO catalogue has been marked as ignore. Thus, this point is ignored and only the user value is there to be considered. VOSA does not need to calculate an average and the user value goes directly to the final SED.

SED download

When you download the final resuls (see ) you will get a file (xml and/or ascii) with the final SED for each object. Most of the information is the same shown in the SED section of VOSA, but with some peculiarities.

When a data point has been calculated as an average of the photometry coming from different services (or user input file) some of the columns in the SED final file are built in terms of the original values for each catalogue. In particular:

- Δ (VO): this is the MAXIMUM value of all the Δ values for the data points combined to build this SED point.

- Δ_2 (VO): this is the MINIMUM value of all the Δ_2 values for the data points combined to build this SED point.

- Nobjs: this is the MAXIMUM value of all the Nobjs values for the data points combined to build this SED point.

- Qual (VO): if, for all the points combined to build this final SED point, the quality flag is the same, that value is shown here. Otherwise it is shown as 'mix'.

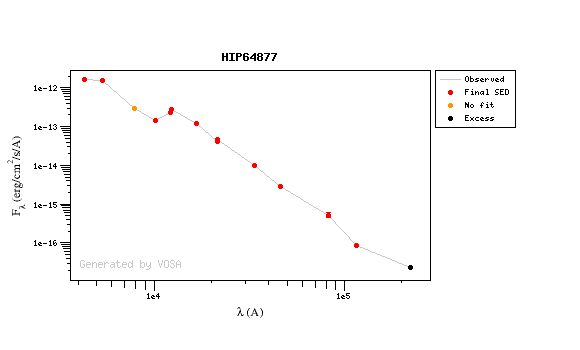

Excess

Most of the models used by VOSA for the analysis of the observed SEDs include only a photospheric contribution.

But the observed SED for some objects can include the contribution not only from the stellar photosphere but also from other components as disks or dust shells.

In these cases, some excess will appear and using the full SED for the analysis can be misleading.

Thus, VOSA offers the option to mark some part of the SED as "UV/Blue excess" or "Infrared excess" so that the corresponding points are not considered when the SED is analyzed using photospheric stellar models.

Infrared excess

VOSA tries to automatically detect possible infrared excesses.

Since most theoretical spectra used by VOSA correspond to stellar atmospheres only, for the calculation of the Χr2 in the 'model fit' the tool only considers those data points of the SED corresponding to bluer wavelengths than the one where the excess has been flagged.

(Some models, as the GRAMS ones, include other components as dust shells around the star. For those cases the points marked as 'infrared excess' will be also considered in the model fit).

The last wavelength considered in the fitting process and the ratio between the total number of points belonging to the SED and those really used are displayed in the results tables.

The point where infrared excess starts is calculated, for each object, when you upload an input file, but it is also recalculated whenever the observed SED changes, that is:

- When VO photometry is added to the SED.

- When you delete a point in the SED or change something in the "SED" tab.

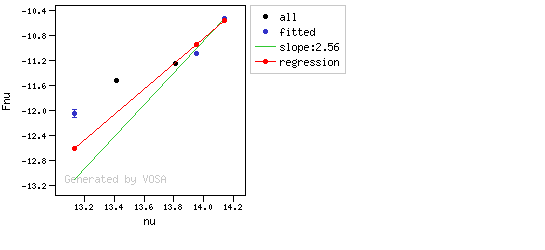

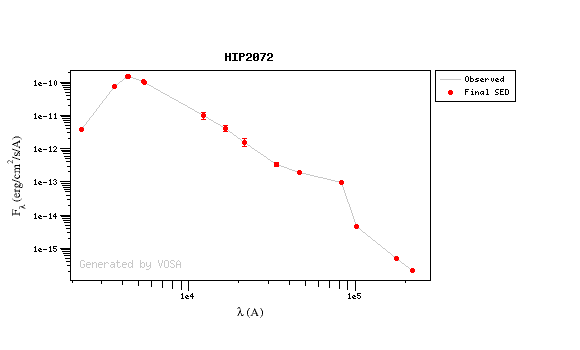

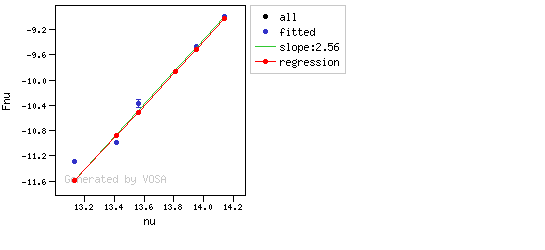

The excesses are detected by an algorithm based on calculating iteratively in the mid-infrared (adding a new data point from the SED at a time) the α parameter from Lada et al. (2006) (which becomes larger than -2.56 when the source presents an infrared excess). The actual algorithm used by VOSA is somewhat more sophisticated. A more detailed explanation is given below.

Apart from the automatic estimation made by VOSA, you can override this value specifying manually the point where infrared excess starts (so that more or less points are taken into account in the model fit) using the SED tab. Take into account that if you change the SED later (adding VO photometry or deleting a photometric point) this value will be recalculated again by VOSA.

It is also possible to specify the point where infrared excess start, for each object, as an 'object option' (10th column) in your input file. If you want to do this you have to include 'excfil:FilterName' (for instance: excfil:Spitzer/IRAC.I1) in the 10th column of the file. If you do that VOSA will not calculate the infrared excess for this object on upload and will accept the value given in the input file. But take into account that, if you change the SED later (adding VO photometry or deleting a photometric point) VOSA will recalculate the value even in this case.

Finally, you also have the possibility of changing the point where infrared excess starts for all objects at the same time. In order to do that, go to the SED tab and look for the "excess" link in the left menu. Once there, you have a form where this can be done.

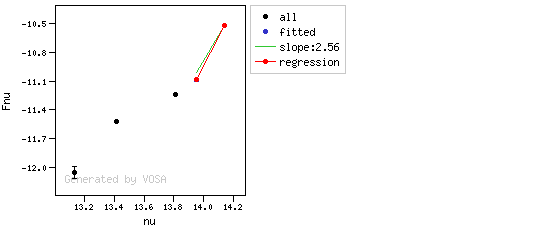

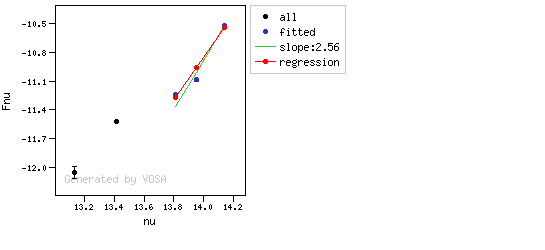

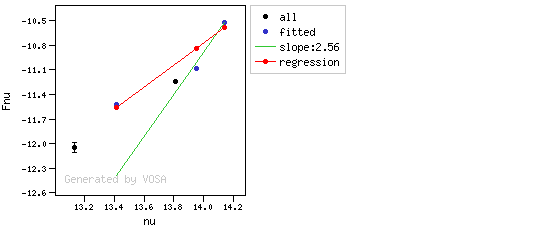

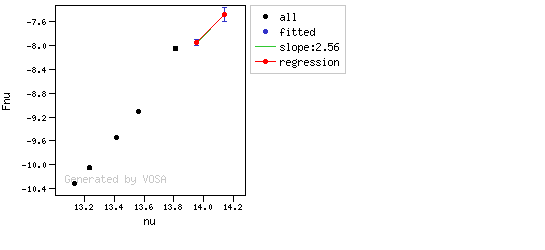

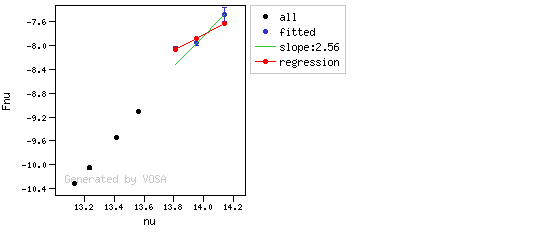

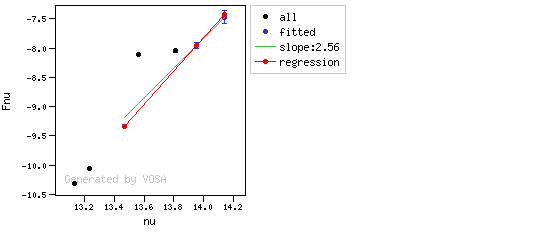

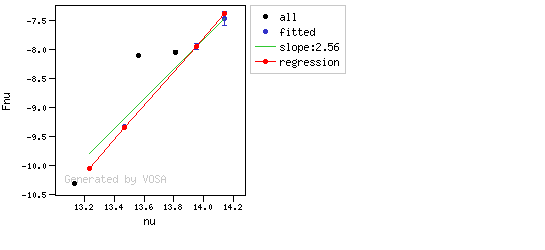

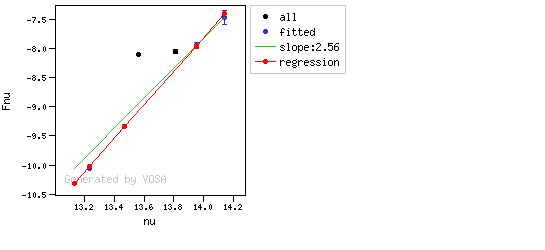

IR excess automatic detection algorithm

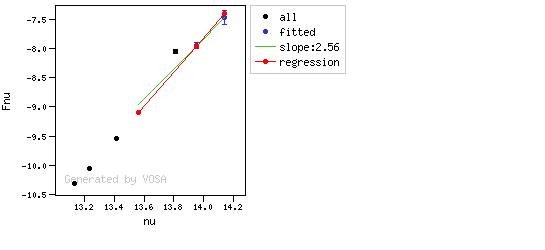

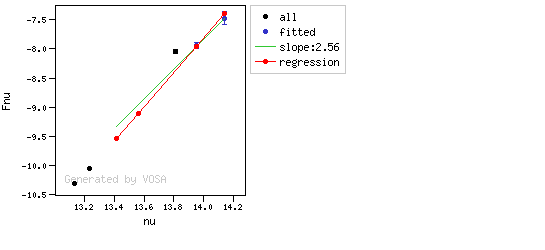

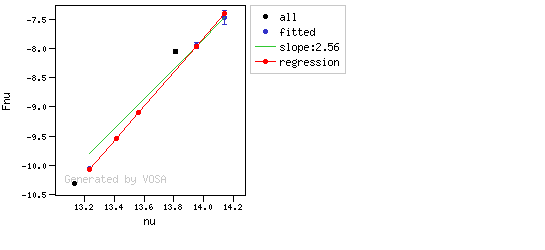

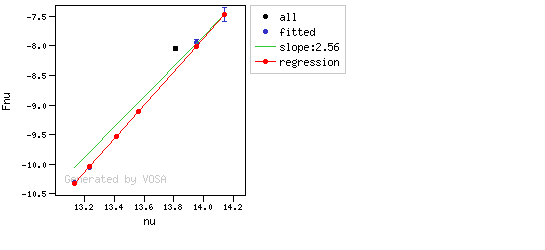

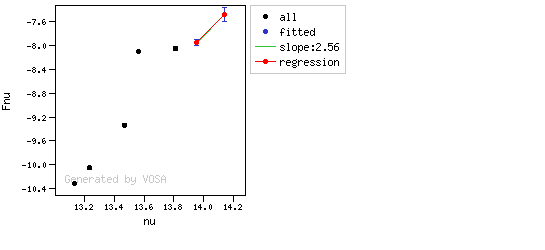

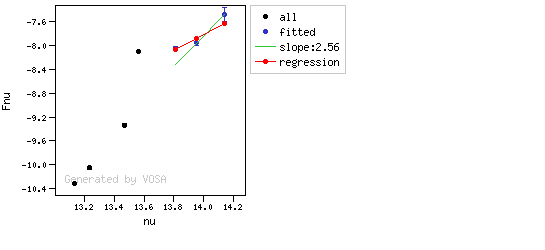

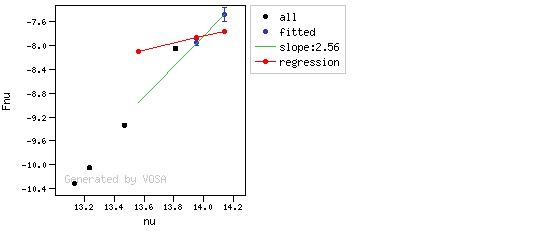

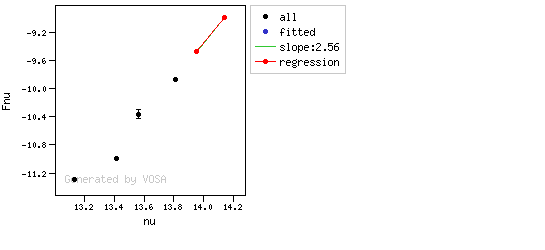

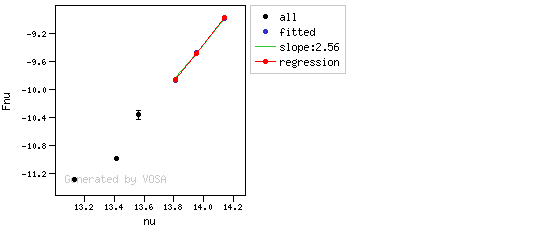

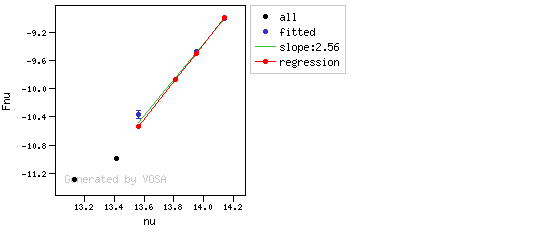

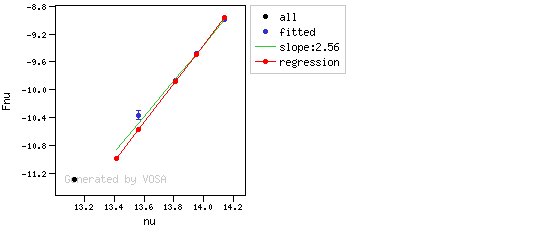

The algorithm used by VOSA to estimate the presence of infrared excess is an extension of the in the idea presented on Lada et al. (2006).The main idea is calculating, point by point in the infrared, the slope of the regression of the log-log curve showing $\nu F_{\nu}$ vs. $\nu$. At a first approximation, when this slope becomes smaller than 2.56, infrared excess starts.

In what follows, when we talk about regressions, we mean the regression of $y=log(\nu F_{\nu})$ as a function of $x=log(\nu)$, and taking into account observational errors as a weight for the regression. From error propagation, the "y" errors can be calculated as $\sigma(y) = \sigma(F_{\lambda})/(\ln10 F_{\lambda})$.

In order to avoid false detections due to "bad" photometric points, we refine the procedure as follows:

- We start at the first photometric point with $\lambda > 21500 A$.

- Points labeled as "nofit" are not considered in the algorithm.

- For each point (but the first one) we calculate:

- The linear regression of all the points from the first to this one (without taking into account those already labeled as "excess suspicious", see bellow).

- The $y$ value that would correspond to this point for a straight line starting on the first point and with slope=2.56. We call it $y_{\rm L}$.

- We mark the point as "excess suspicious" if it matches both of the following two criteria:

- The regression slope (b), plus the error in the slope, is smaller than 2.56, that is: $$b+\sigma(b) < 2.56 $$

- The observed value of $y$ is at least $3\sigma$ above the one predicted by the line with slope 2.56, that is: $$(y_{\rm obs} - y_{\rm L} ) > 3 \sigma(y)$$

- Points marked as "suspicious" will not be taken into account in further regressions.

- If two consecutive points are "suspicious" then VOSA marks the first of those points as the beginning of infrared excess.

- If one pointis suspcious and the next one isn't, then nothing happens. The first point (the suspicious one) will not be taken into account in further regressions, but we continue inspecting the next points.

- If the last point in the SED is suspicious, i.e., it matches both excess criteria, then that point is considered the beginning of inrrared excess even though the previous one did not match the criteria.

Apart from this, one more final criterium is applied. The slope (calculated as explained above) for at least one of the last two points in the SED must be sigma-compatible with being smaller than 2.56. $$b-\sigma(b) < 2.56$$

If this does not happen for any of the last two points, then there is no excess in the SED. The idea is that, if the infrared excess starts in some point it must continue for larger wavelengths. If that does not happen, any previous apparent detection of excess will be probably due to some "evil" combination of misleading points. In summary:

- The slope for at least one of the last two points in the sed must fulfill: $$b-\sigma(b) < 2.56$$

- two consecutive points must fulfill: $$b+\sigma(b) < 2.56 $$ $$(y_{\rm obs} - y_{\rm L} ) > 3 \sigma(y)$$ and then the infrared excess starts at the first of the two points.

- Or, if the last point in the SED meets those two criteria, even if the previous didn't, then the excess starts at the last point.

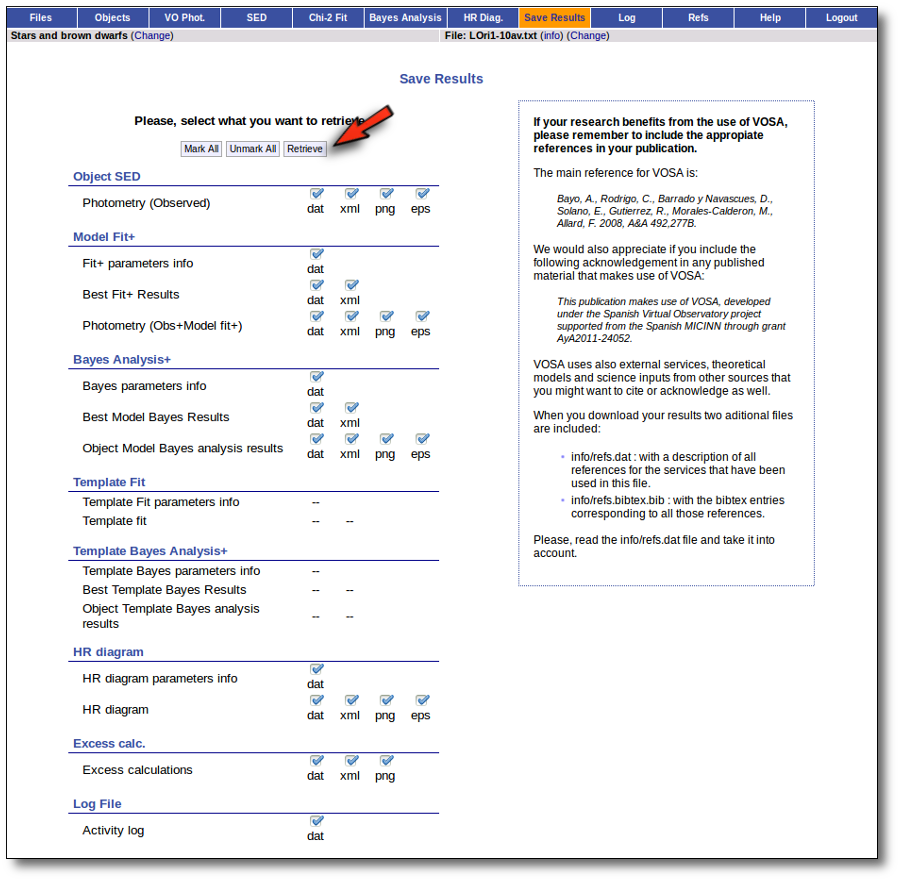

In the "Save Results", the user will be able to download files with a summary of the excess determination and with the details of each linear regression. These summary and details can also be visualized in the "SED" tab.

You can see some detailed examples of these calculations.

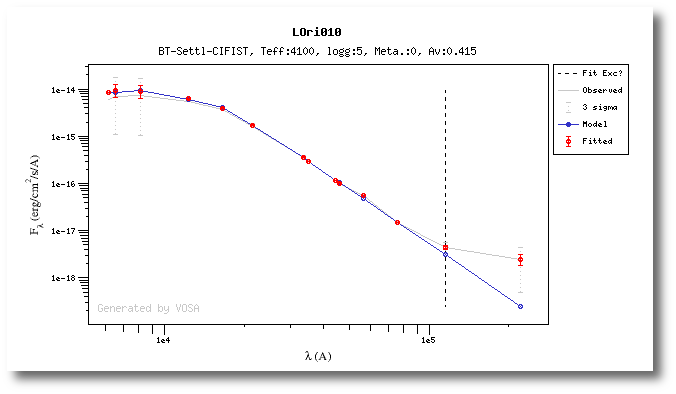

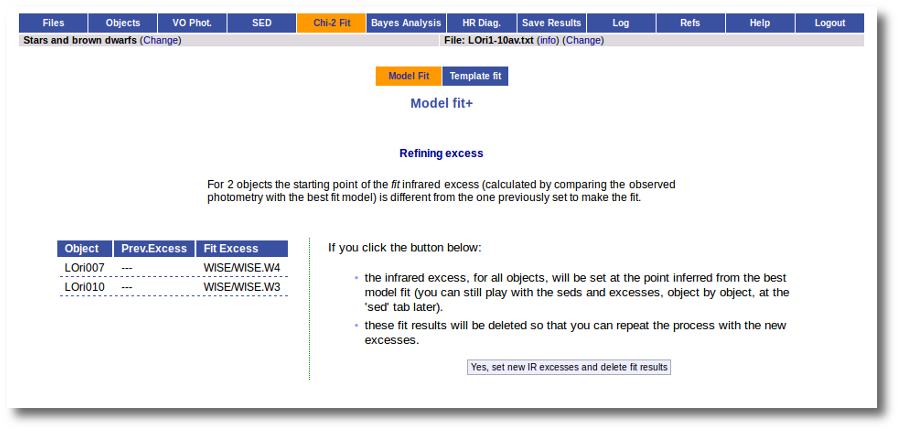

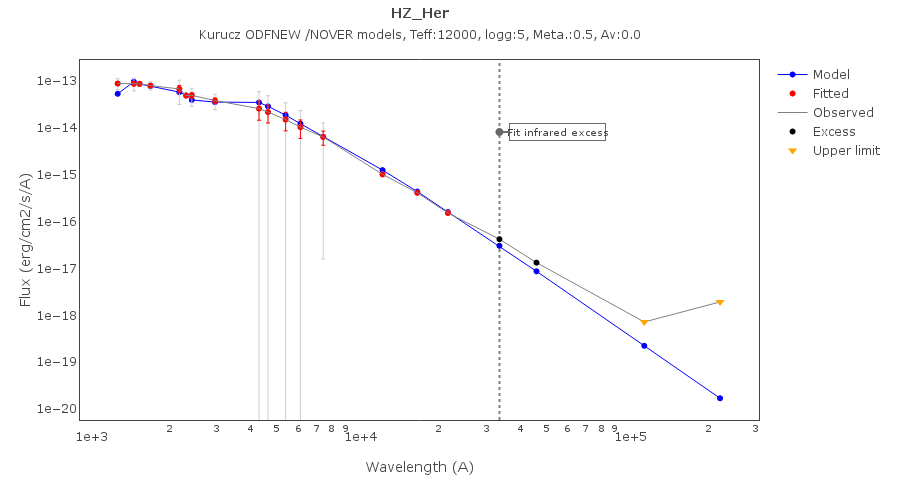

Fit refinement of the IR excess

When a model fit is completed, VOSA compares the observed SED with the best fit model synthetic photometry and makes a try to redefine the start of infrared excess as the point where the observed photometry starts being clearly above the model.

The procedure is as follows:

- If there is a point previously marked as the start of infrared excess VOSA starts the checking at that point.

- If not, VOSA starts in the medium point among those with λ > 21500A.

- For each point, VOSA checks for two criteria: $$\frac{F_{obs}-F_{mod}}{\Delta F_{obs}} > 3$$ $$\frac{F_{obs}-F_{mod}}{F_{mod}} > 0.2$$ that is, in plain words: the observation must be above the model, at least at a 3σ level, and the difference between both must be "significant".

- Both criteria must be fulfilled to consider that a point has excess (unless $\Delta F_{obs}=0$, that only the second crterium can be applied).

- If the criteria are fulfilled at the first point (thus, suspicious of 'fit excess') we check the previous point (smaller wavelength) and continue till one point doesn't match the criteria.

- If the criteria are NOT fulfilled at the first point (thus, no 'fit excess' detected) we check the next point (bigger wavelength) and continue till one point matches the criteria.

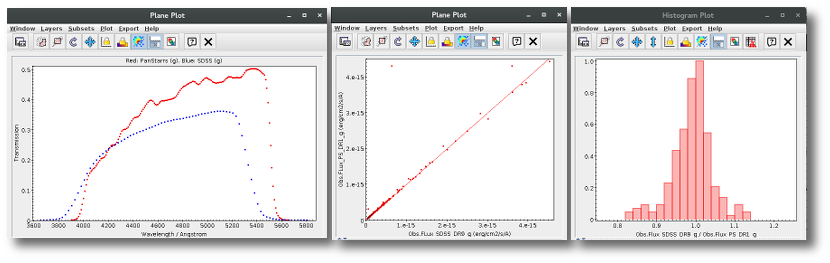

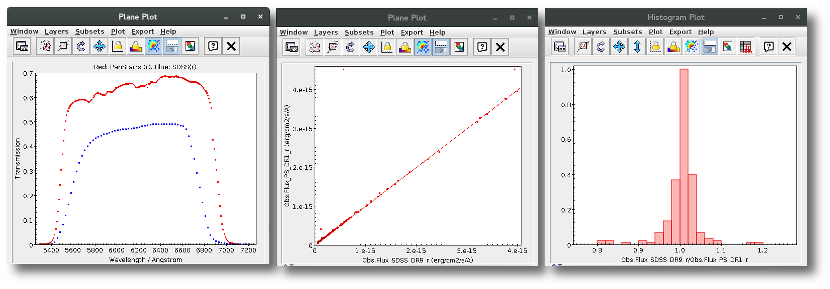

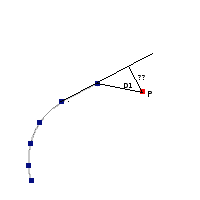

Let's see some examples.

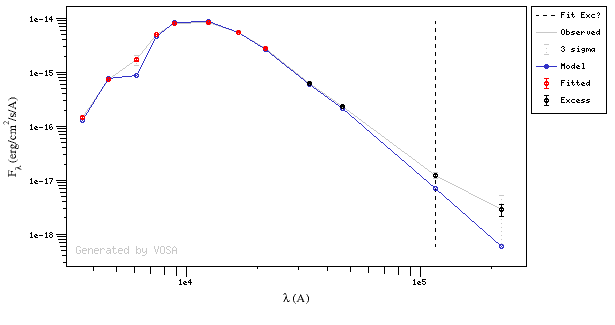

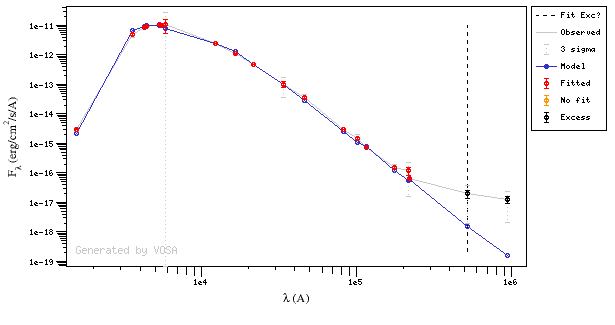

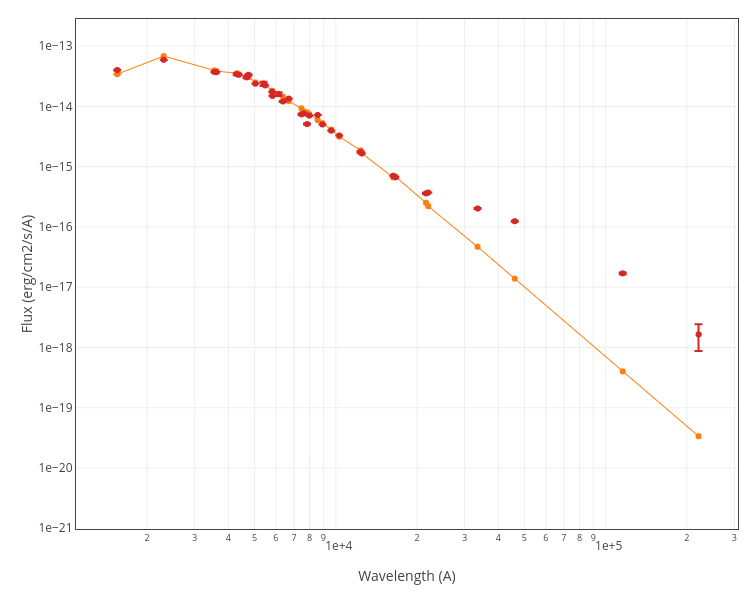

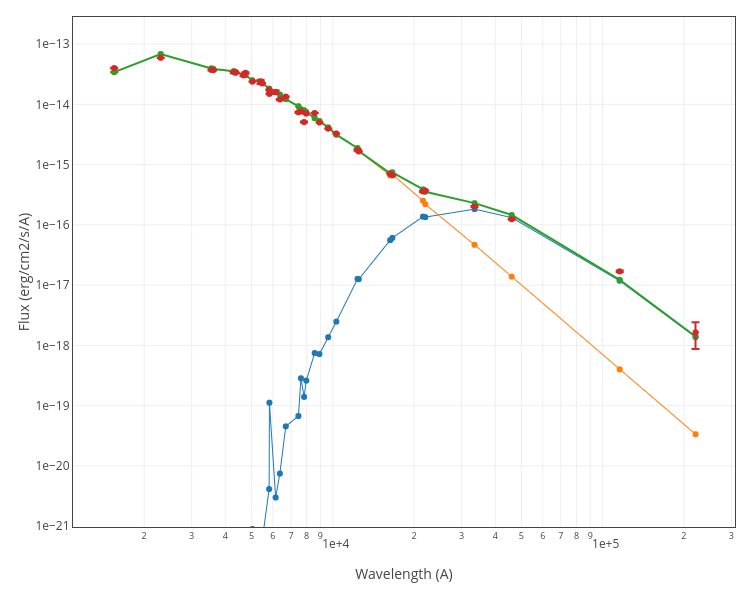

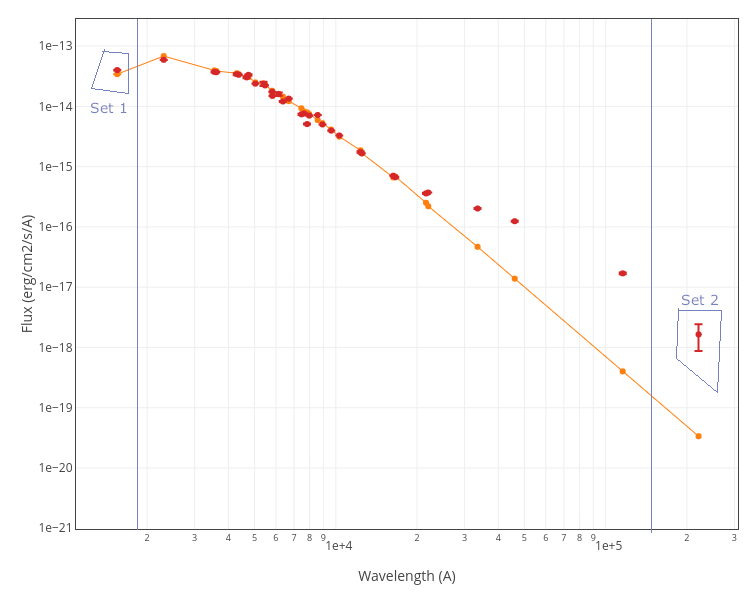

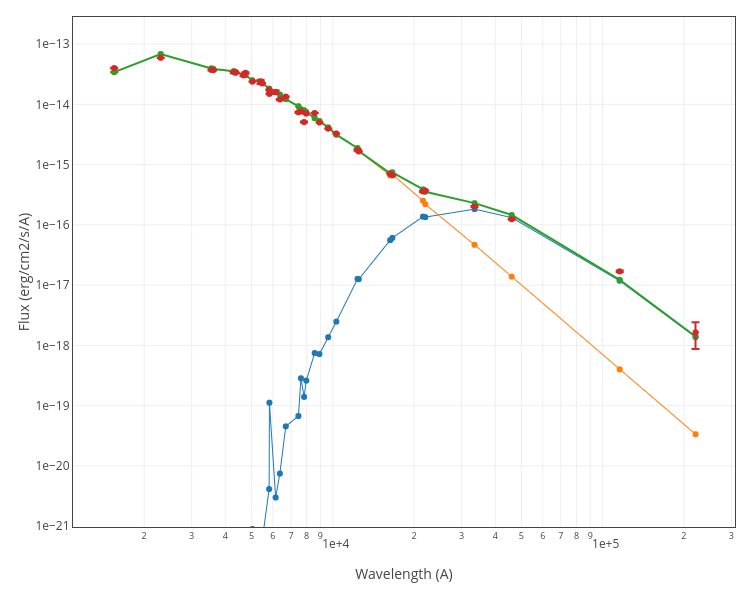

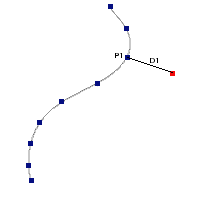

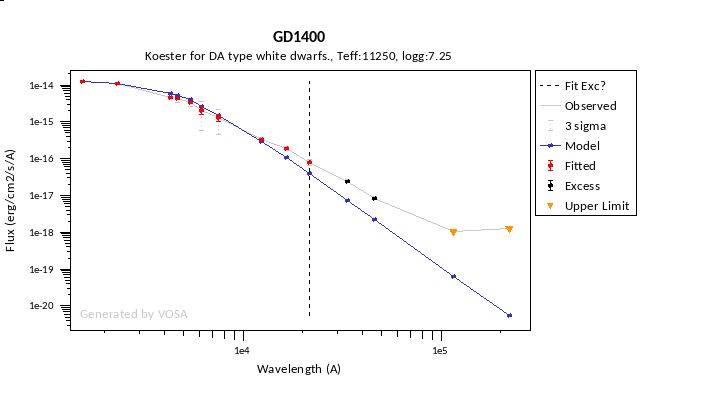

In the next case, when comparing the observed photometry with the model, VOSA sugests that the real infrared excess starts later than when the automatic algorith had detected:

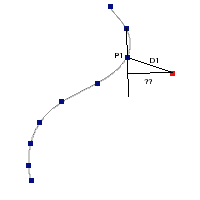

In this image, looking at the fit, there is no apparent infrared excess (although the automatic algorithm had detected it):

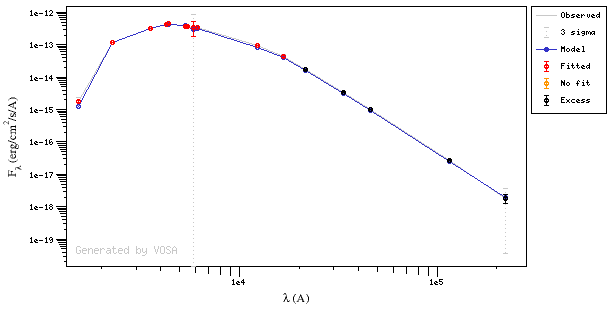

In the following case, according to the "fit excess" criteria there is no infrared excess. This is due to the big observational errors. Instead, the automatic algorithm had detected it:

Onthe other hand, there are cases where the automatic detection algorith had not detected infrared excess but according to the fit, we see some excess:

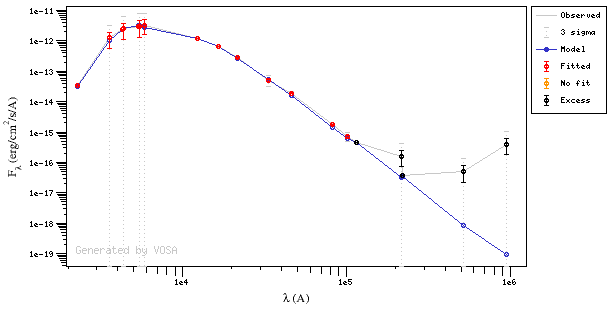

And, obviously, in many cases both algorithms give the same result:

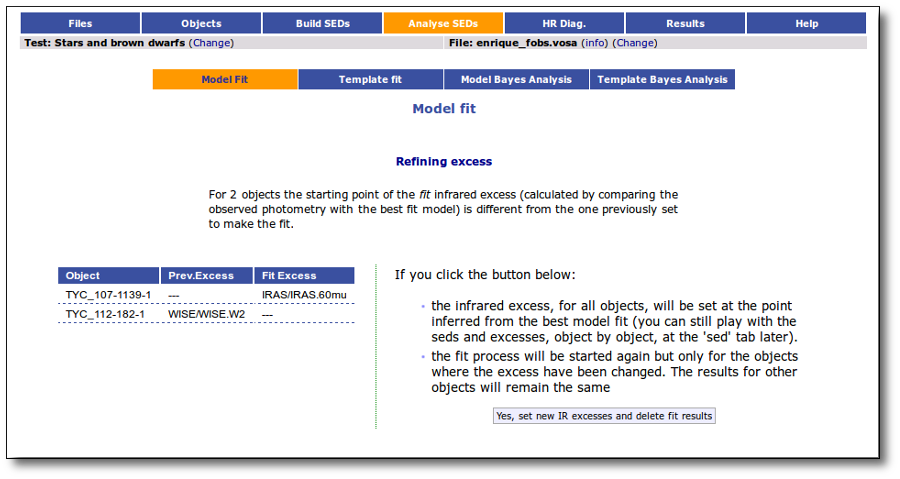

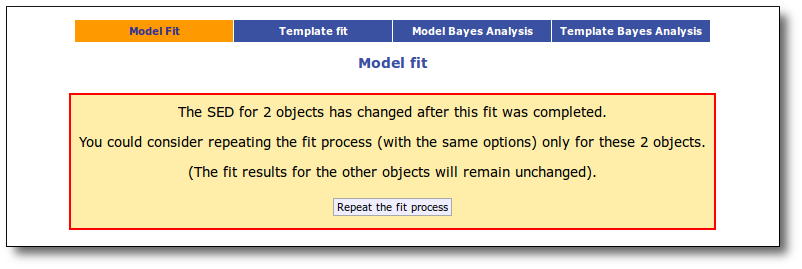

If for some objects the IR excess starting point calculated in this way is different from the one previosly calculated by the automatic algorithm, VOSA offers you the option to "Refine excess". If you click the corresponding button you will see the list of objects where this happens, the filters where excess starts according to both algorithms for each case, and the possibility of marking the start of infrared excess in the point flagged by the fit refinement instead of the one previously calculated by VOSA. If you choose to do this, and given that this would change the number of points actually used in the fit for those objects, the fit results are deleted and you have to restart the fit process. But, in what follows, the IR starting point will be the one suggested by the previous fit.

UV/blue excess

In some cases, there is also some excess in the bluer (UV) part of the SED.

VOSA does not detect this automatically, but you can specify it so that the application does not consider these points in the fits either.

The UV/blue excess can be set in two different ways:

- Including it in the input file as an 'object option' with the syntax Veil:VALUE, where VALUE is the value in Angstroms of the last wavelength where UV excess applies. For instance, if you include Veil:6000 in the 10th column of your input file for a given object, all the points with λ<=6000A will be marked as "Blue excess" and they will not considered in the fits.

- Specifying this value manually in the SED tab.

Finally, you also can specify the same UV/blue excess range for all objects at the same time. In order to do that, go to the SED tab and look for the "excess" link in the left menu. Once there, you have a form where this can be done.

This Blue excess, as it happens with the infrared one, will not be taken into account for models that include not photospheric components (as the GRAMS ones).

An example

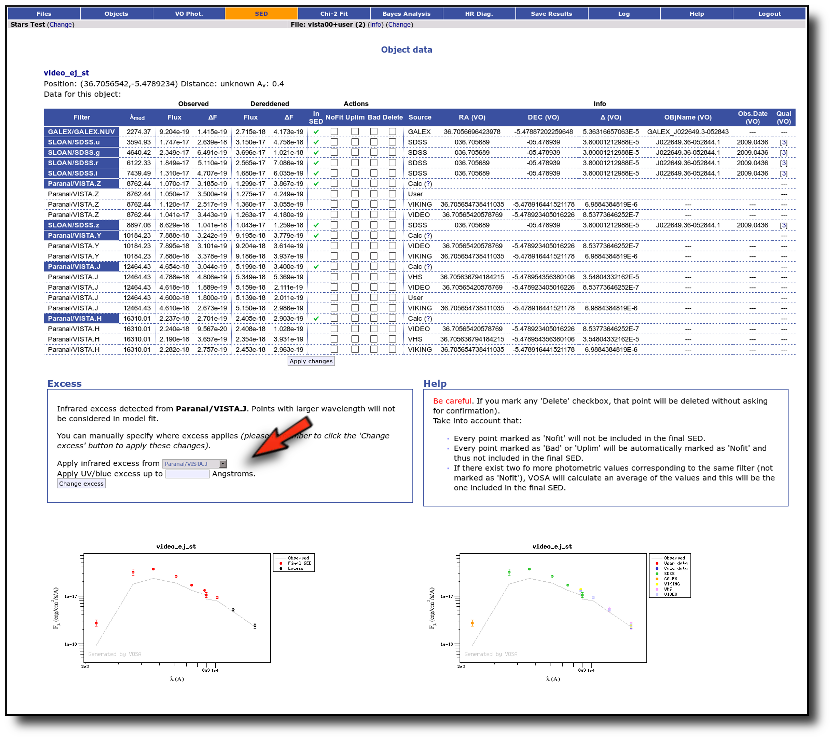

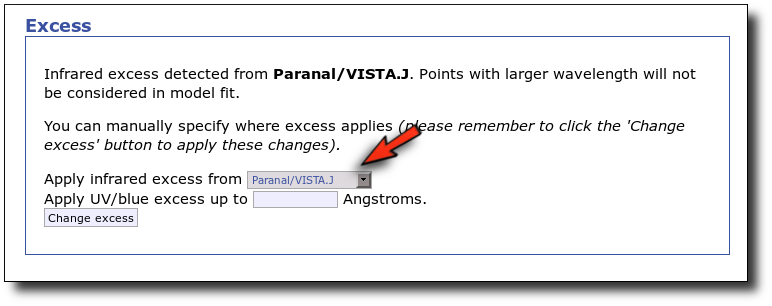

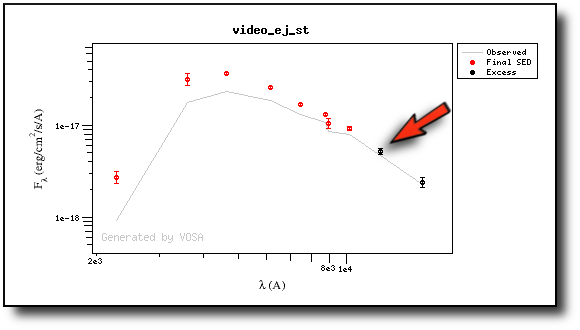

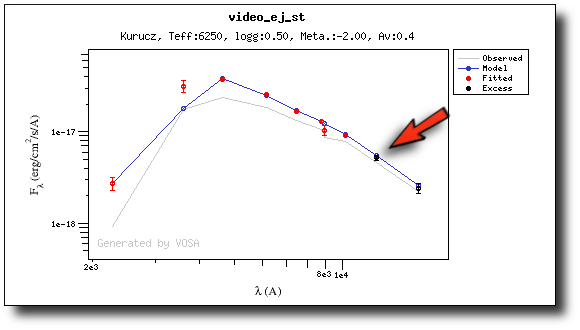

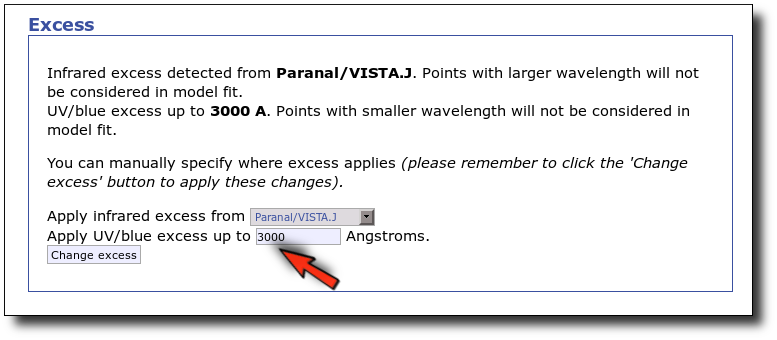

We have an object where VOSA detects infrared excess starting at the Paranal/VISTA.J filter.We are going to consider three different examples.

(1) Infrared excess only

First, we leave the excess as detected by VOSA, starting at VISTA.J.

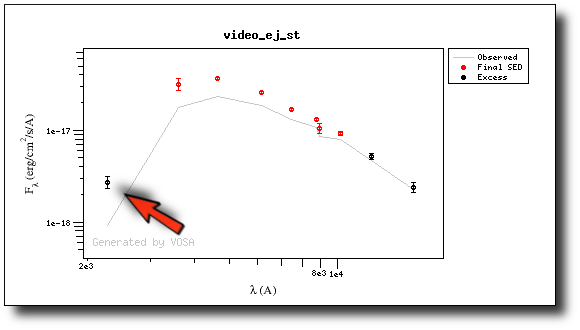

Those points are plotted in black in the SED.

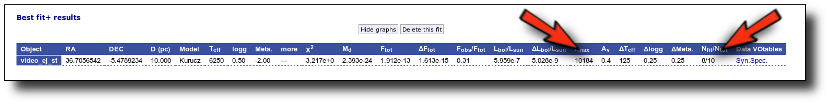

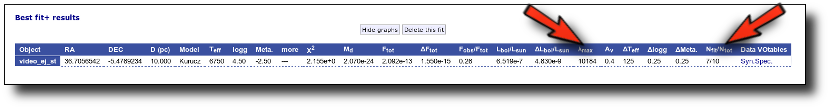

If we make a model fit for this object, the last two points in the SED won't be used. We see, in the results table, that only 8 of the 10 points have been used, and the wavelength of the last point fitted in the SED is the one for VISTA.J

And these two points are shown in black also in the fit plot.

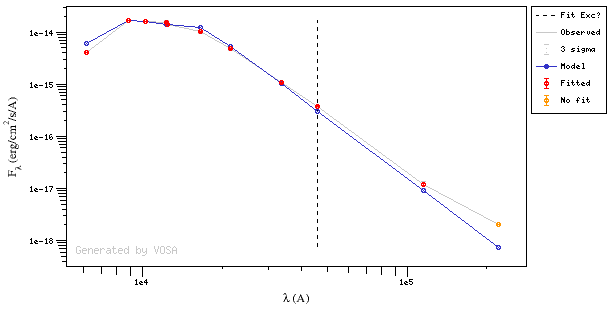

(2) Both UV/blue and infrared excess

Now we decide to go back to the SED tab and we make a change:

- There is also some UV/blue excess up to 3000A (so that the GALEX.NUV point will not be considered for the fit either).

This changes the SED plot accordingly.

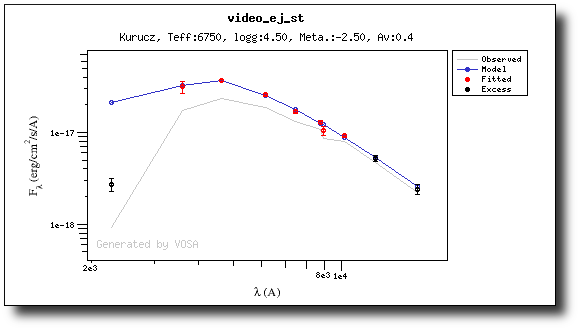

And when we repeat the model fit, the points that are fitted are only those that doesn't have excess now.

Actually, the best fit model is now a different one.

And the points in black in the fit plot are the ones corresponding to the excess that we specified manually (the GALEX.NUV point is not taken into account for the fit).

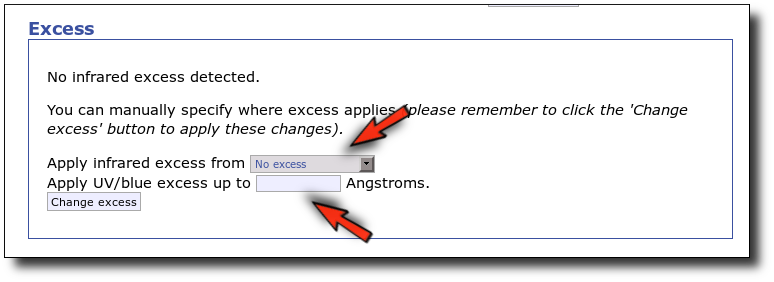

(3) No excess

As a last example, we go back to the SED tab and set that there is no infrared or UV/blue excess.

This changes the SED plot accordingly.

And when we repeat the model fit, all the points are considered for the fit now.

And all the points are shown in ref (fitted) in the plot.

Analysis

VOSA offers several options to analyze the observed Spectral Energy Distributions and estimate physical properties for the studied objects.

First, observed photometry is compared to synthetic photometry for different collections of theoretical models or observational templates in two different ways:

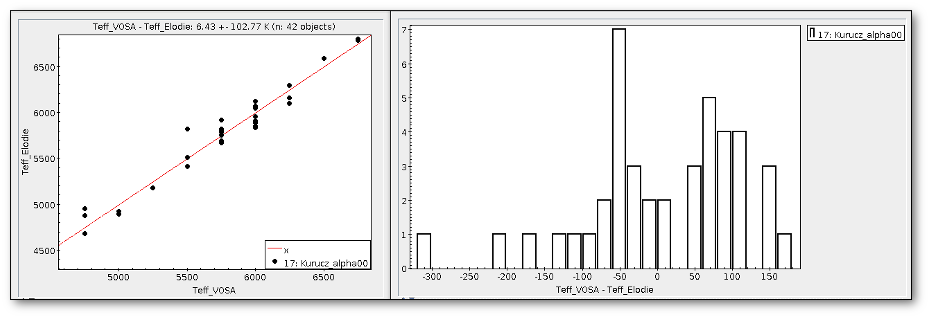

The Chi-square fit provides the best fit model and thus an estimation of the stellar parameters (temperature, gravity, metallicity, ...). It also estimates a bolometric luminosity using the distance to the object, the best fit model total flux and the observed photometry.

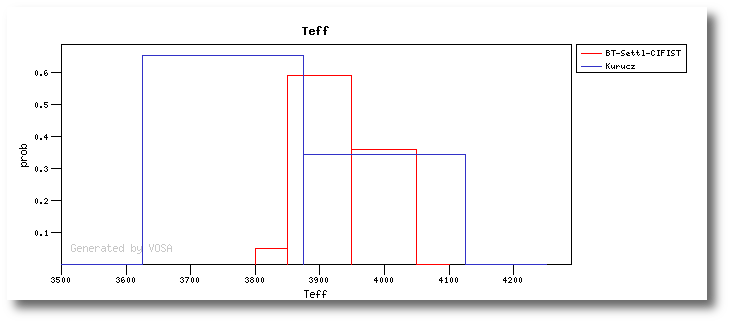

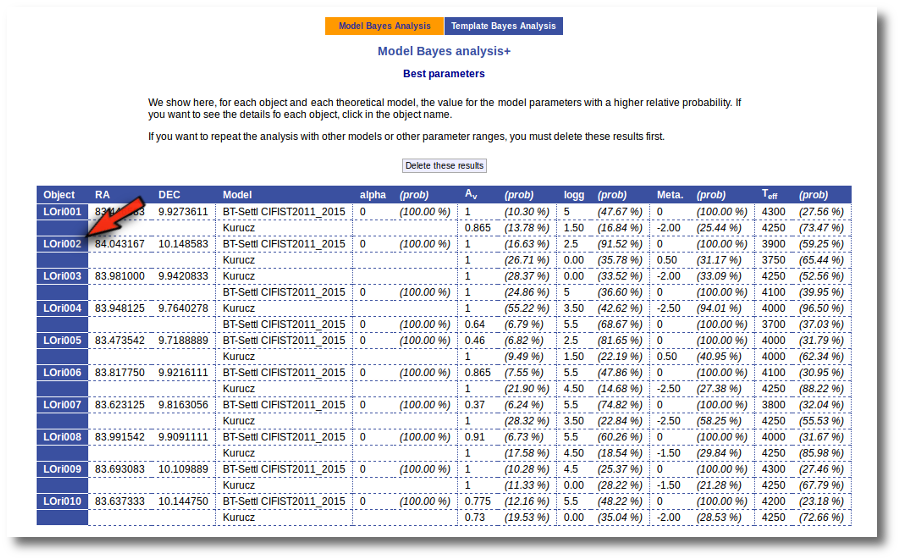

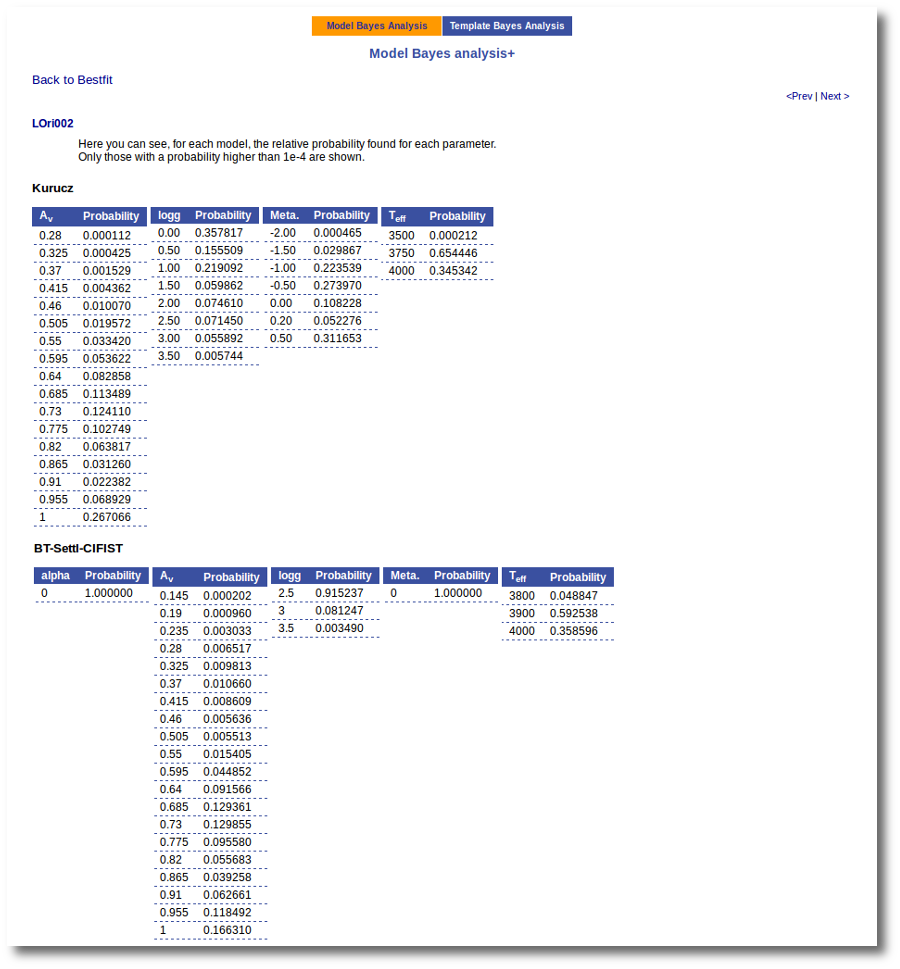

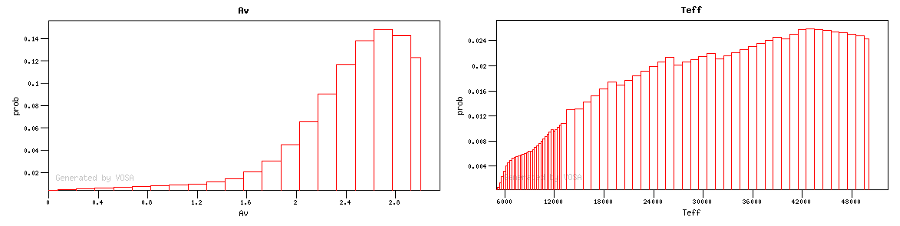

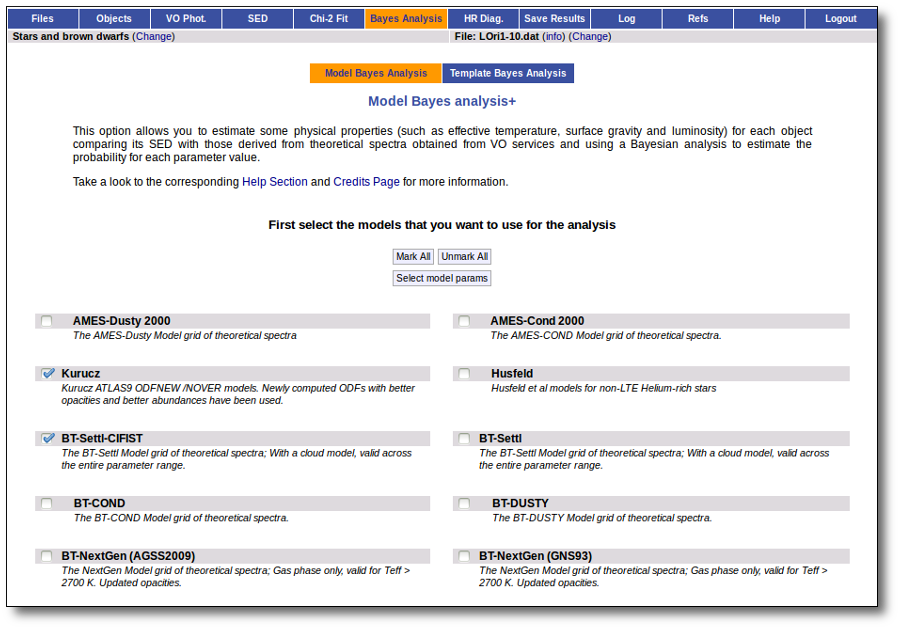

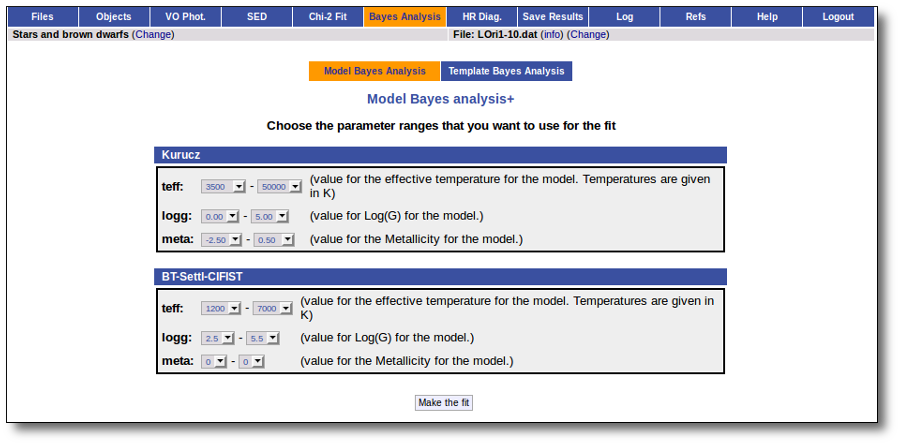

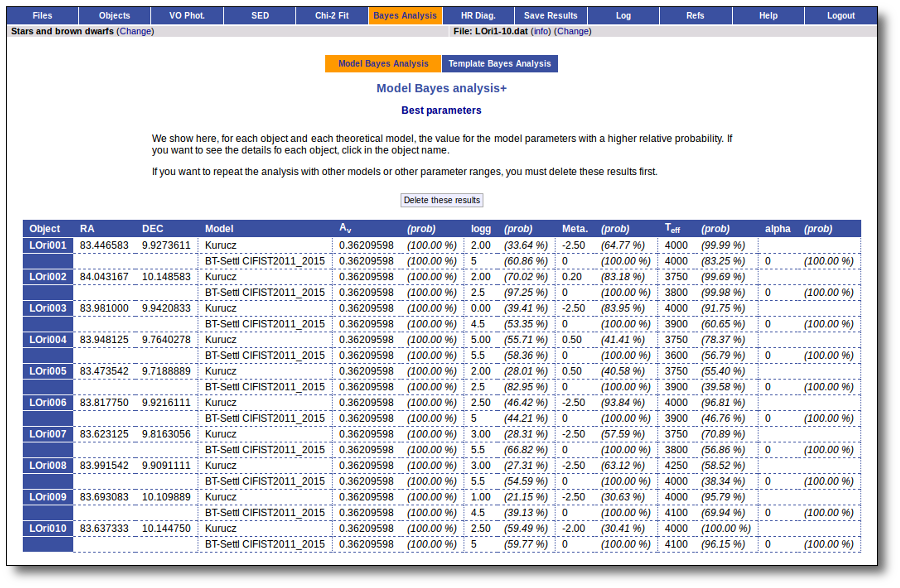

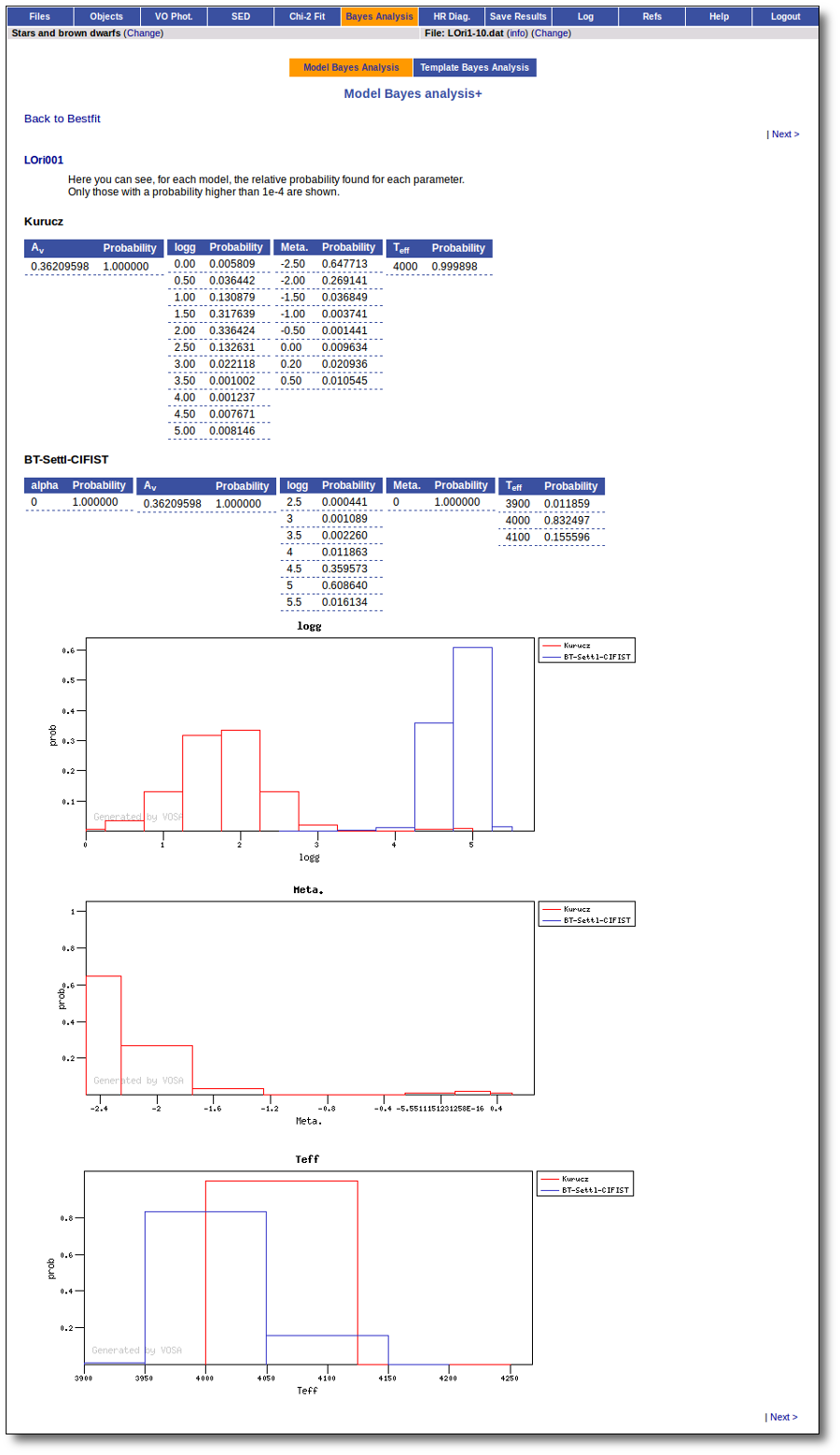

On the other hand, the Bayesian analysis provides the projected probability distribution functions (PDFs) for each parameter of the grid of synthetic spectra.

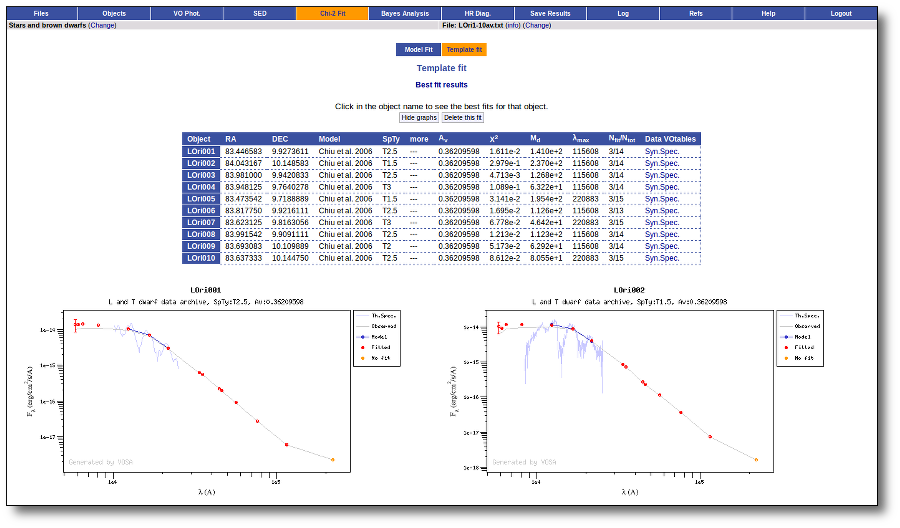

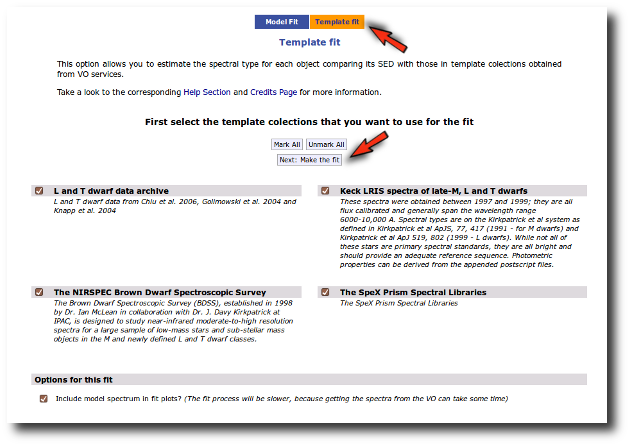

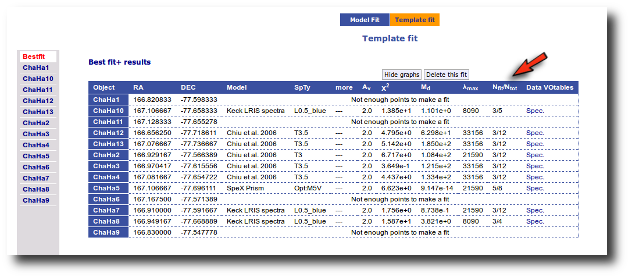

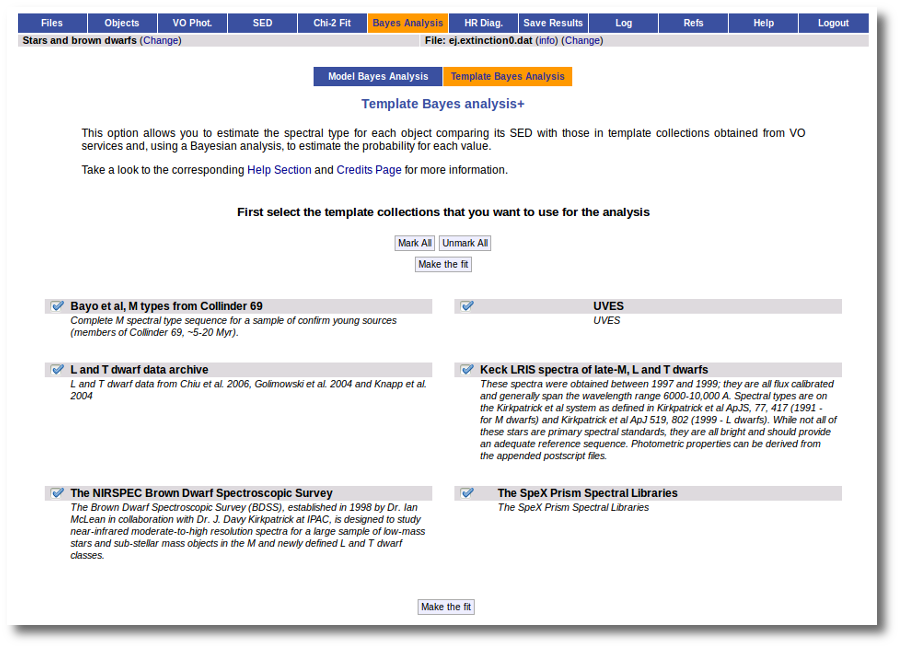

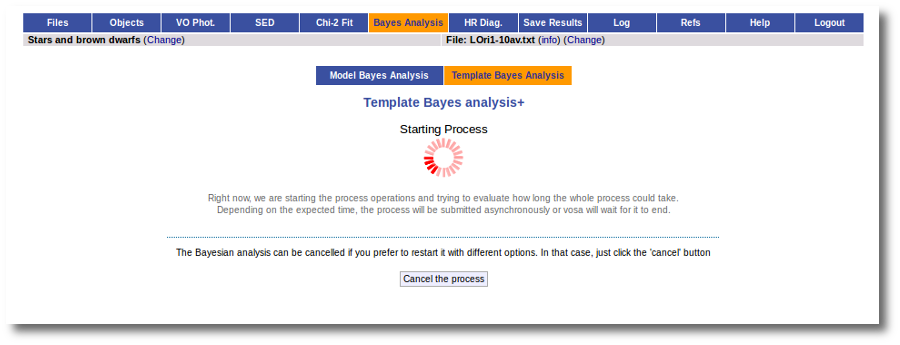

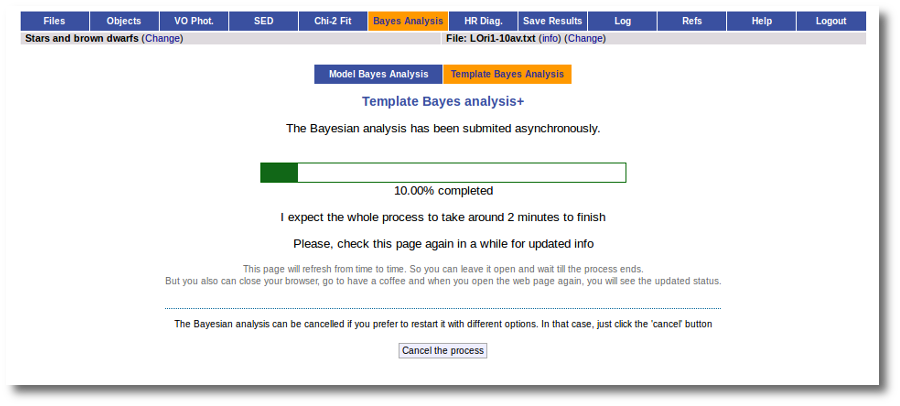

When these analysis tools are applied to observational templates (chi-square and bayes), we obtain an estimation of the Spectral Type too.

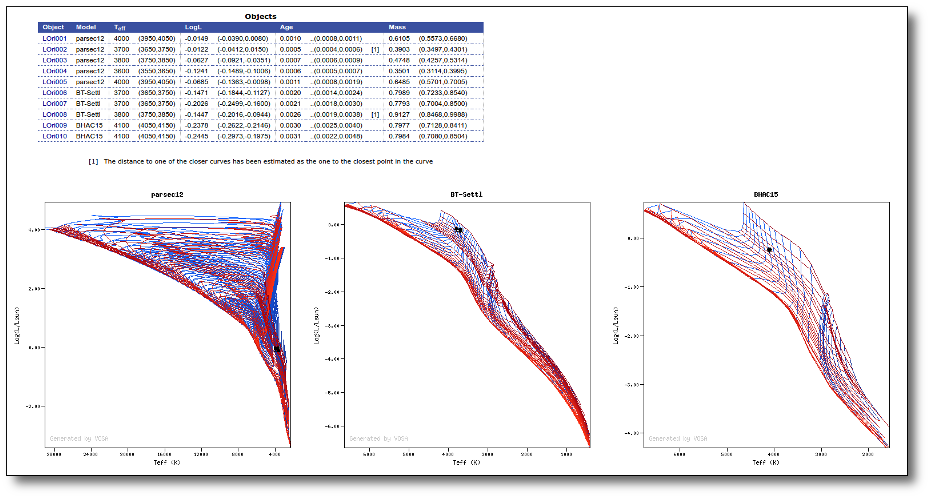

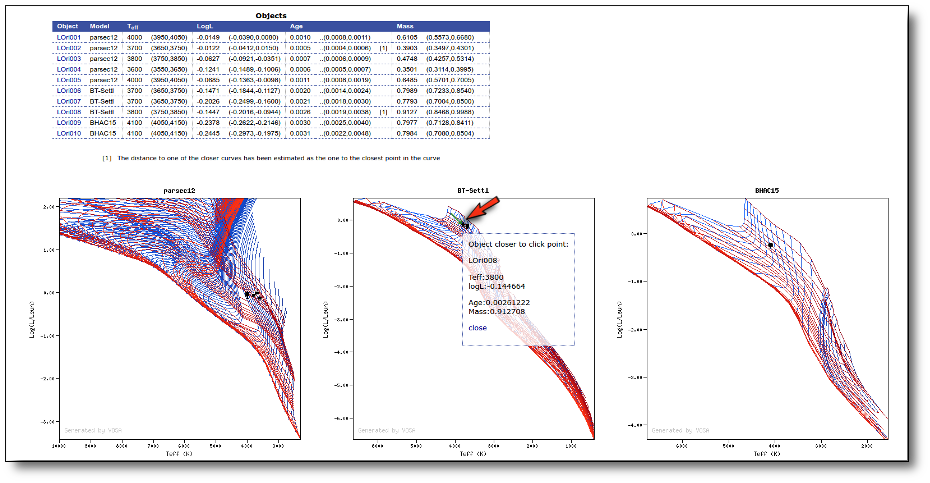

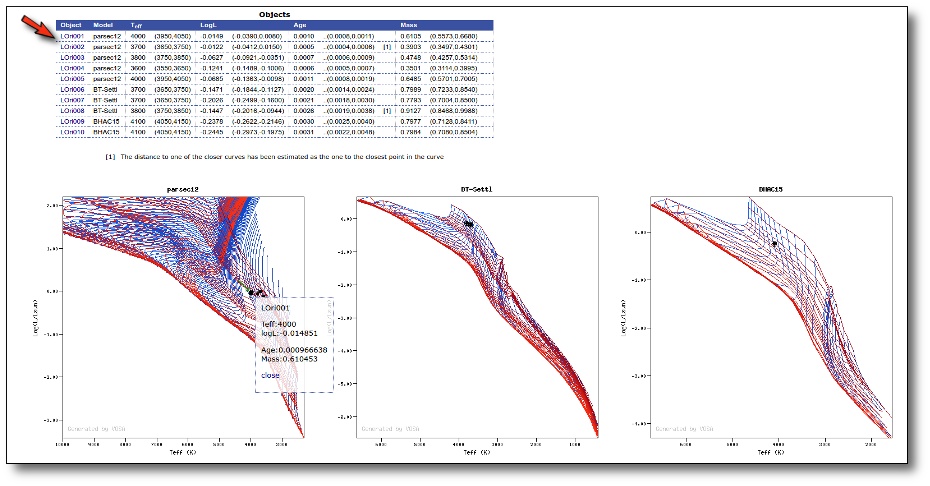

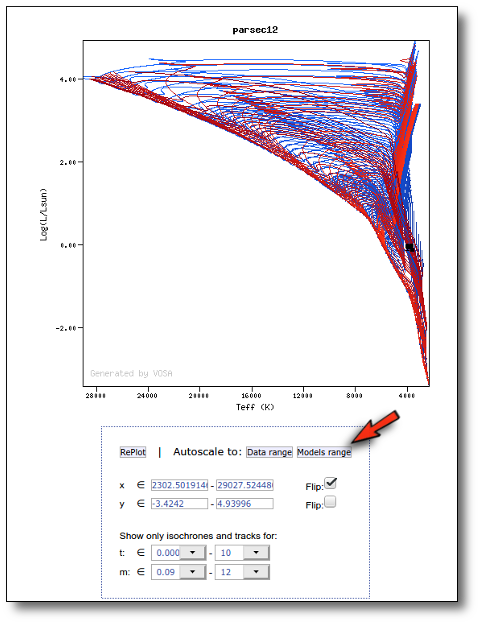

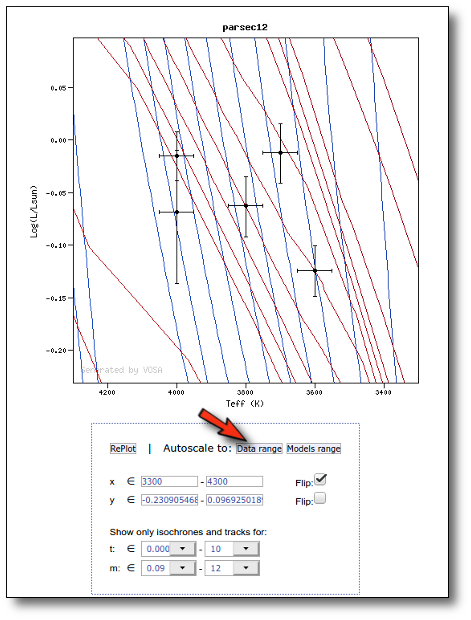

Once the best fit values for temperature and luminosity have been obtained, it is possible to build an HR diagram using isochrones and evolutionary tracks from VO services and making interpolations to estimate values of the age and mass for each object.

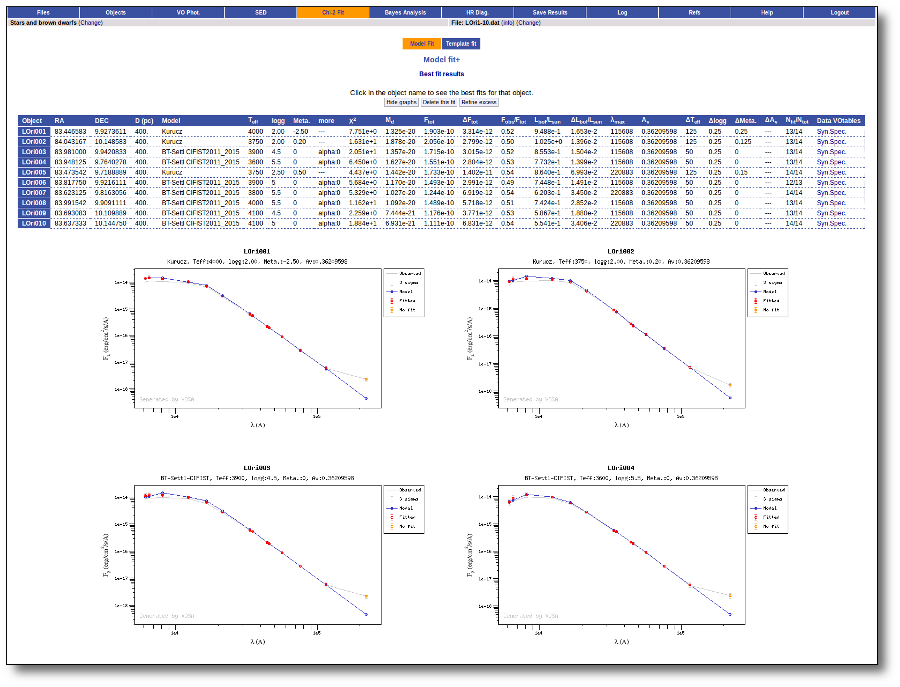

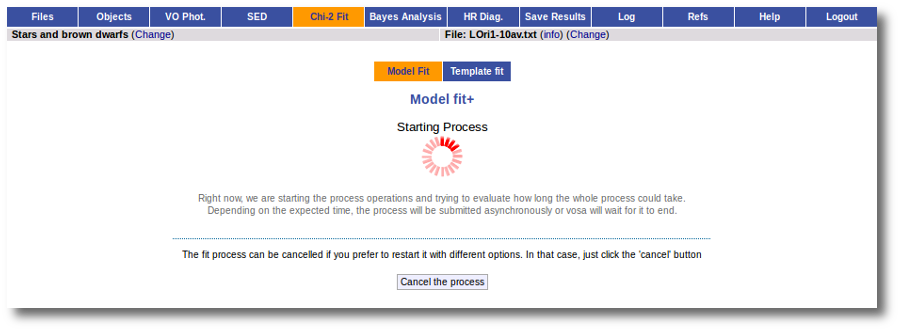

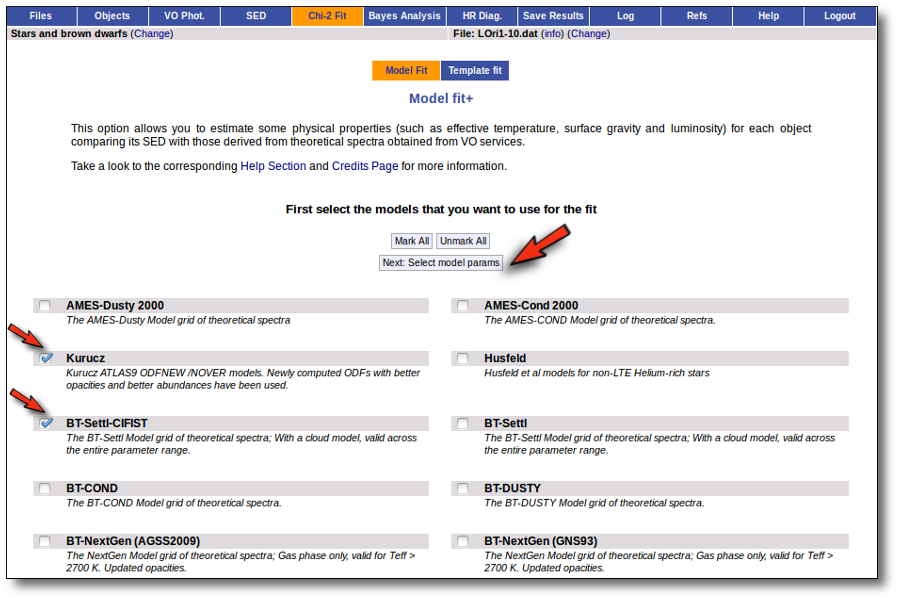

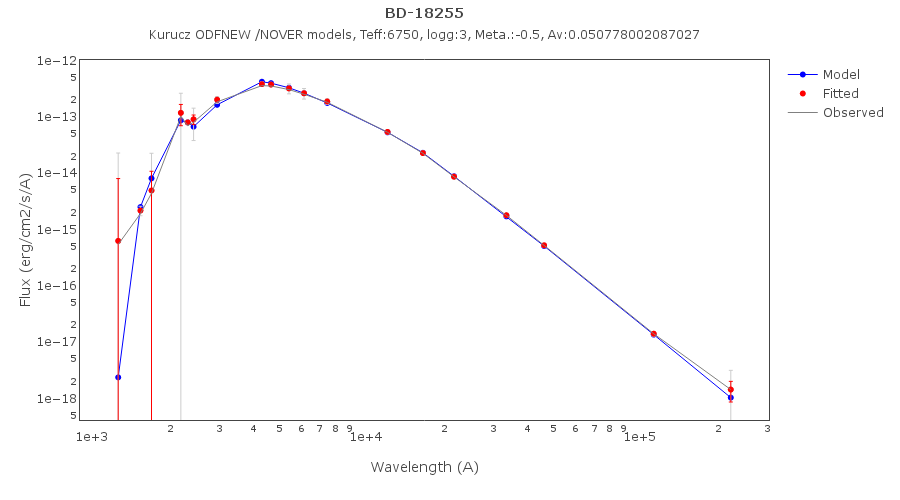

Model Fit

One of the main analysis options of this application is the Model fit.

Here the observed SED for each object is compared to the synthetic photometry for several theoretical models using a chi-square test. This gives an estimate of the physical properties of the given object.

If you provide a range for the visual extinction (AV), this fitting will also consider it as a fit parameter, as explained below.

Fit

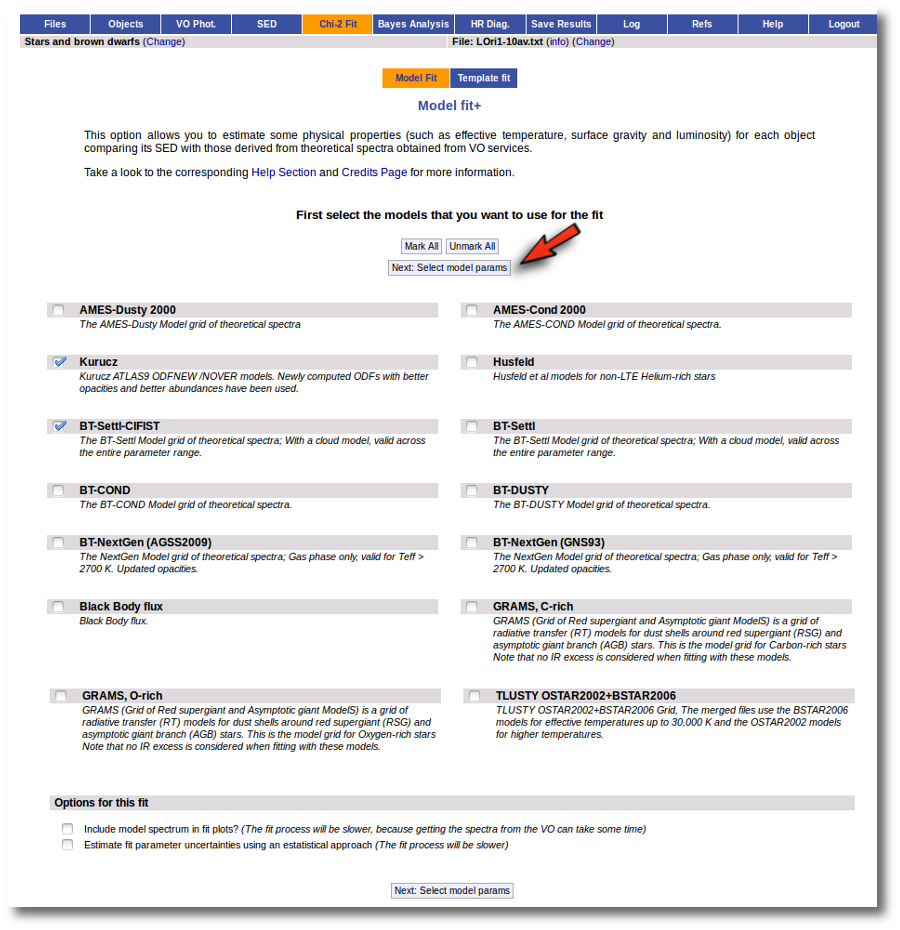

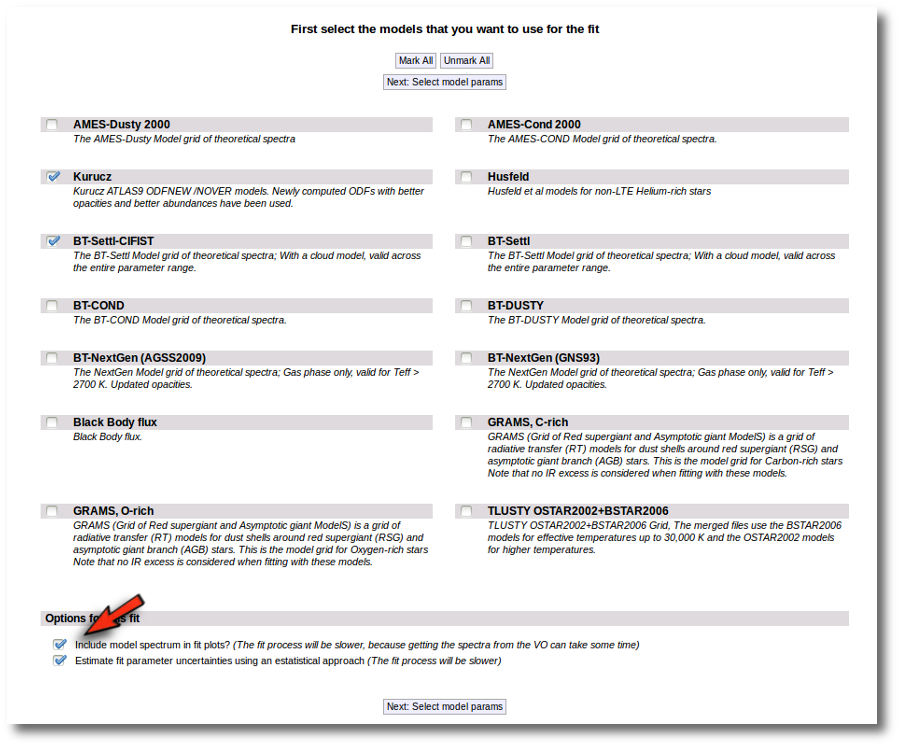

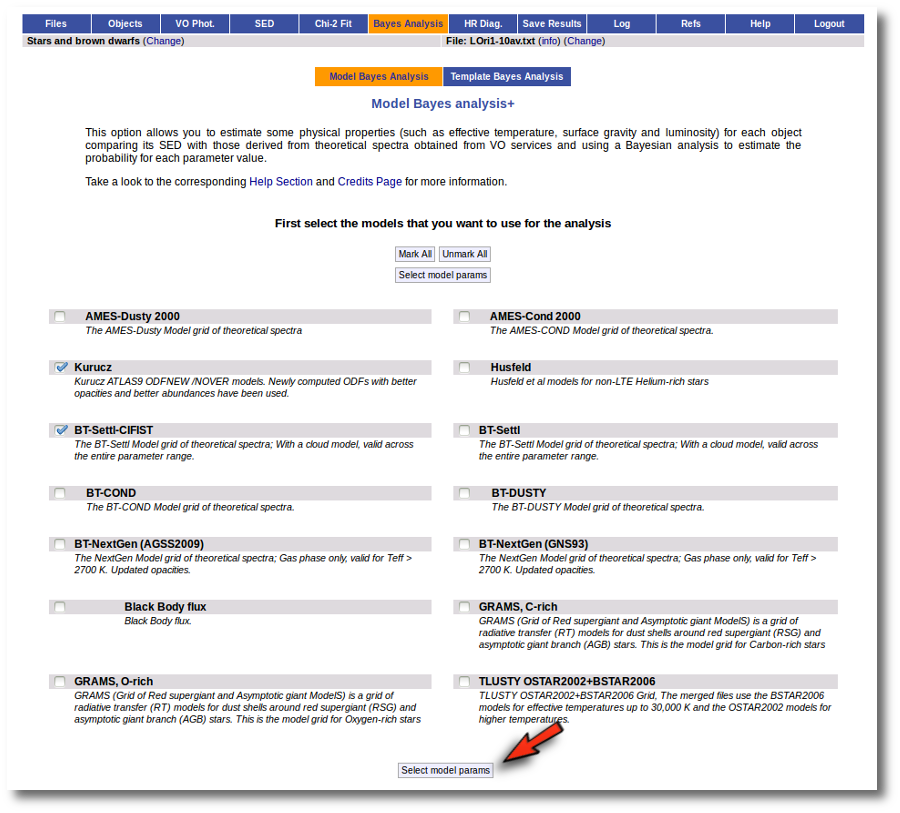

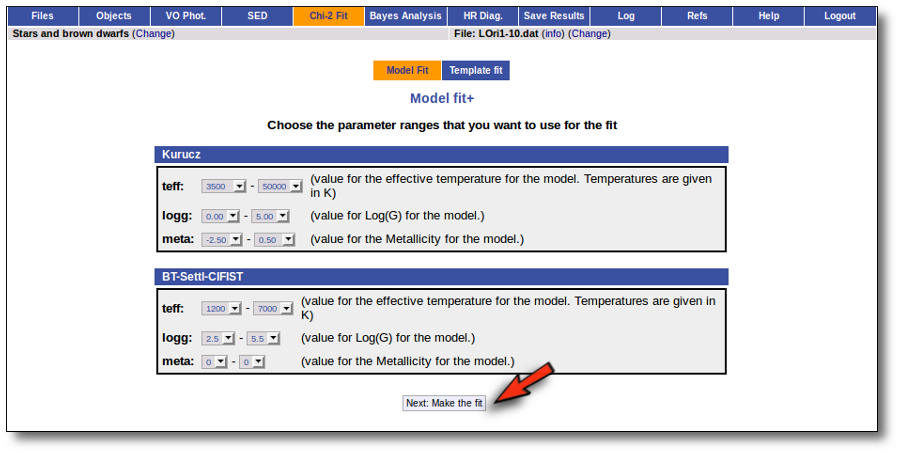

When a fitting process is started you can choose among a list of theoretical spectra models available in the VO. Only those that are checked will be used for the fit.

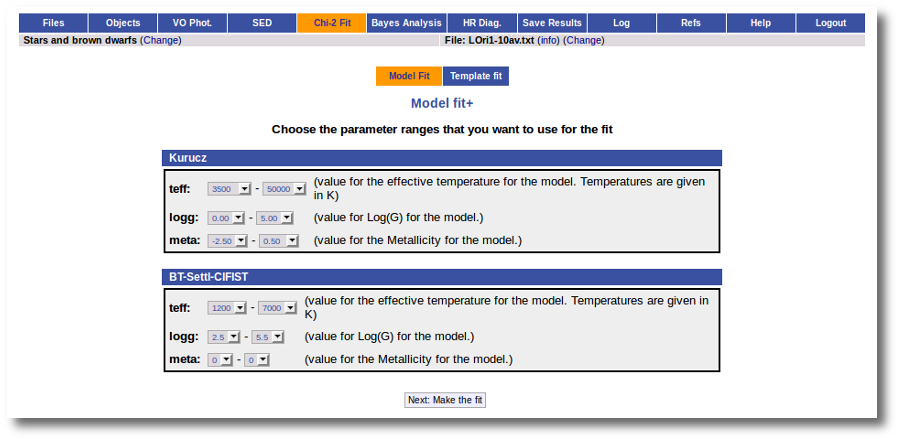

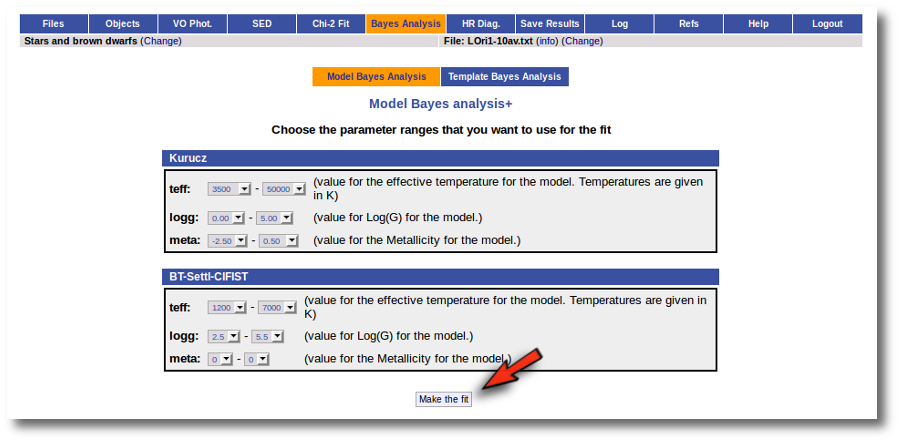

In the next step the application uses the TSAP protocol (SSAP for theoretical spectra) for asking the model servers which parameters are available to perform a search. According to that, a form is built for each model so that you can choose the ranges of parameters that you want to use for the fit. Take into account that:

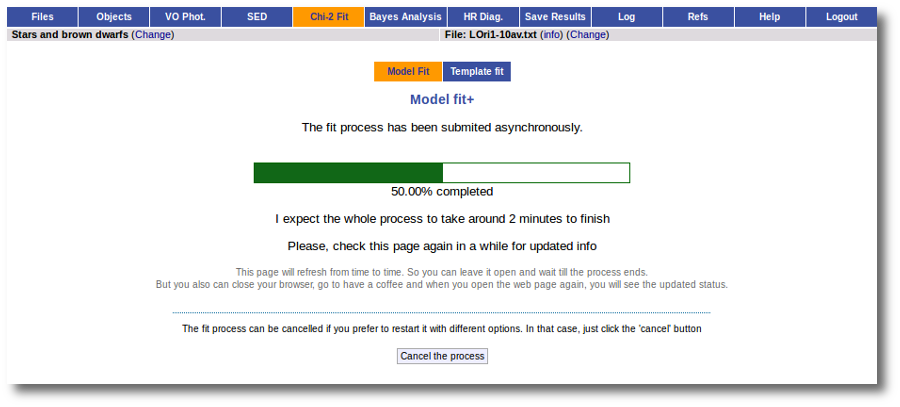

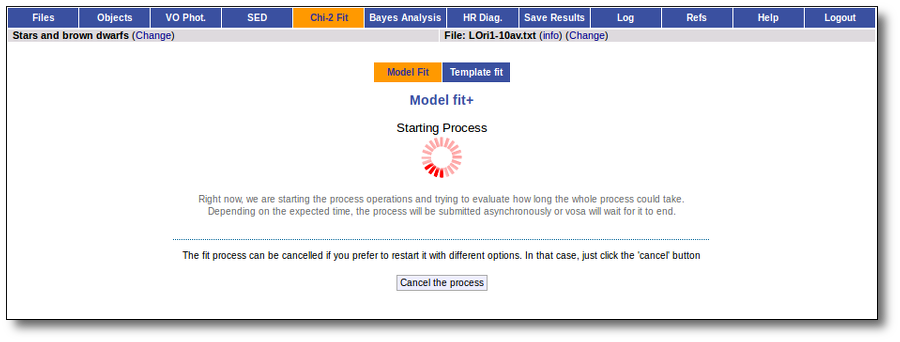

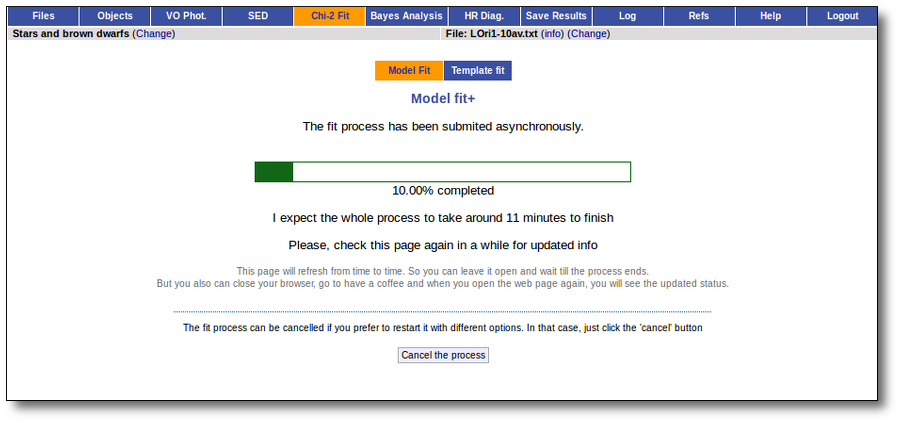

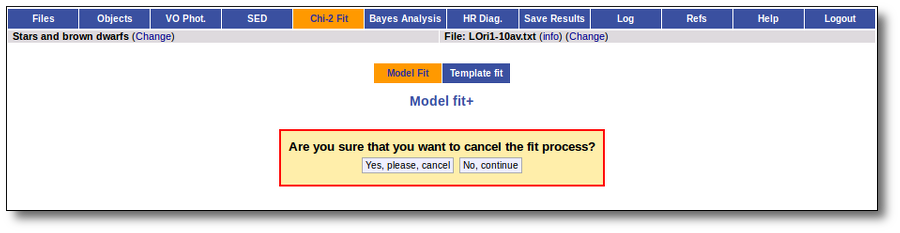

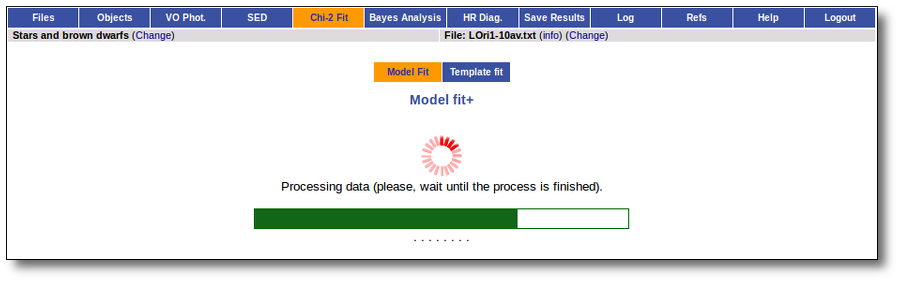

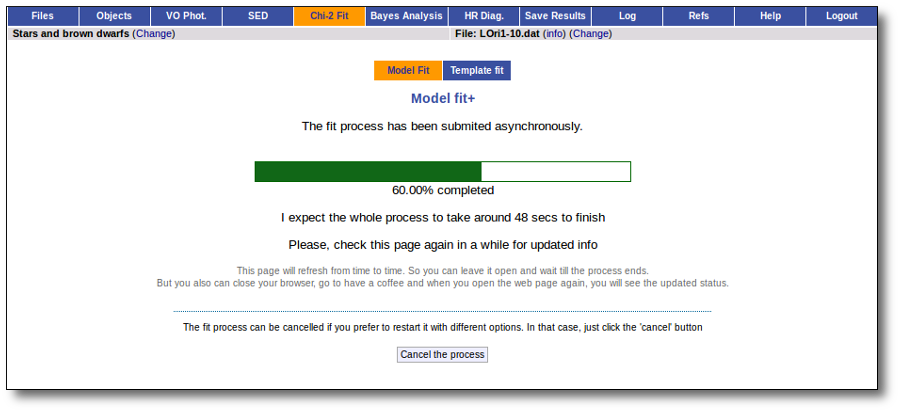

- The fitting process implies queries to VO services, data sent through the network, a lot of calculations (some done by the services themselves and some done by the application)... That means that it could take a long time to get the final results.

- Using more models and wider ranges of parameters will imply a longer time for the fitting (specially if your file contains many objects) so be ready for a long waiting time in the next step.

- In some cases, the whole range of parameters offered by the models are not right for your objects. For instance, if you know, for whatever physical reasons, that your objects have small temperatures, choose only small temperatures in the forms to optimize the process.

- The response time has roughly linear dependence on the number of objects in the file (twice number of objects means twice waiting time). Thus, you could prefer splitting your input file in different ones (according to physical properties, pertenence to a group or other reasons) better than doing all the work in an only data file.

- If you decide to fit the extinction too (giving a range for AV) this will also increase the fitting time. Take into account that 20 different values of AV are considered for each object/model combination. Although this won't imply a fitting time 20 times larger, it also enlarges the calculation time.

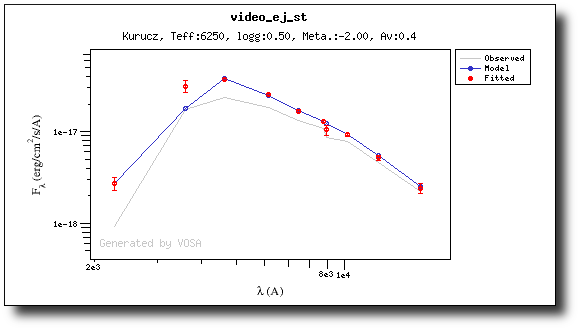

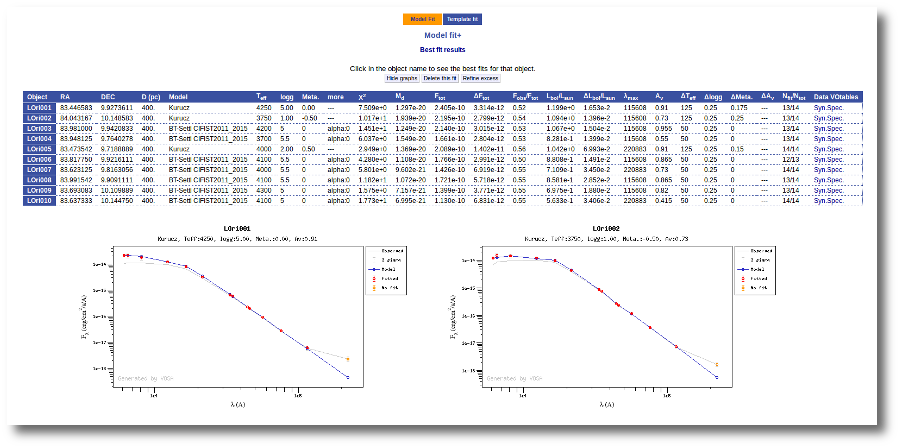

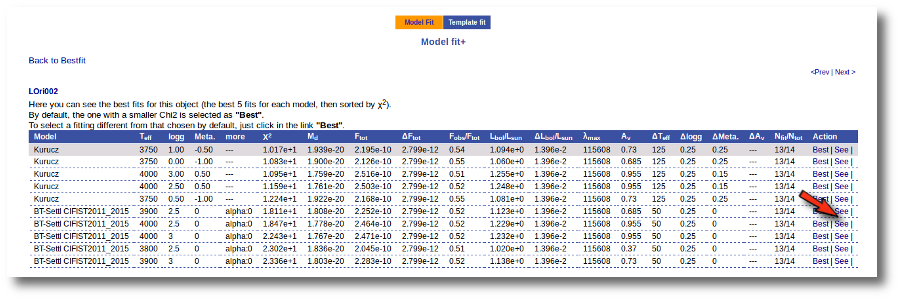

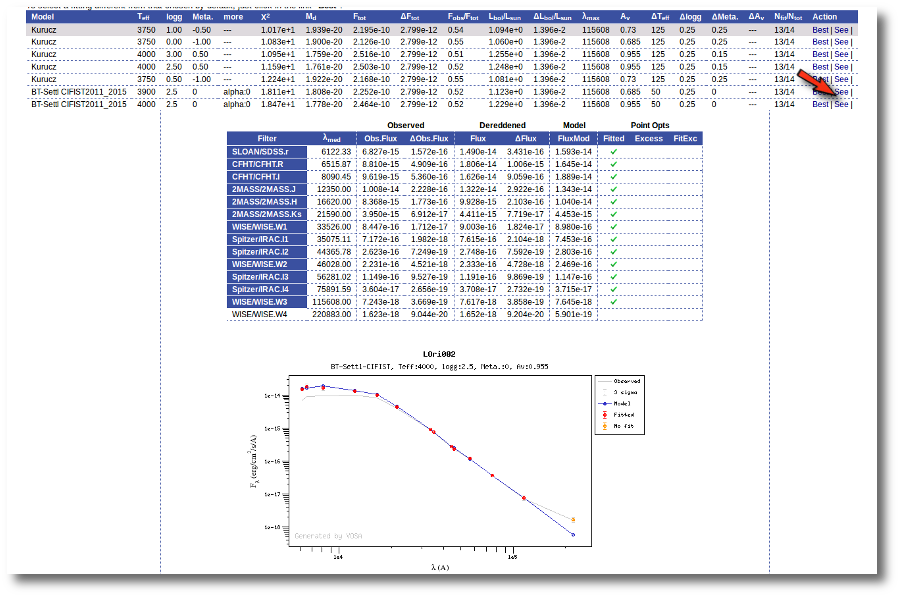

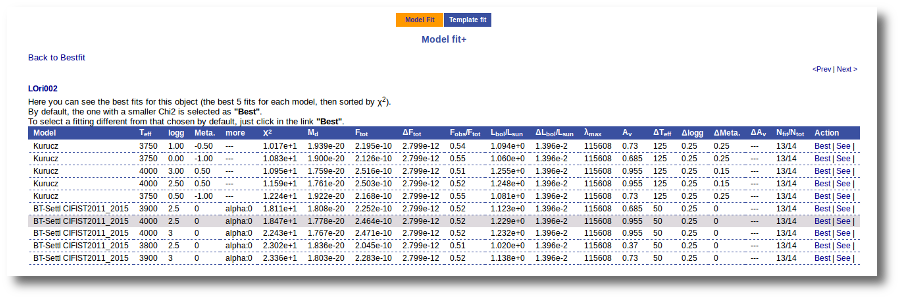

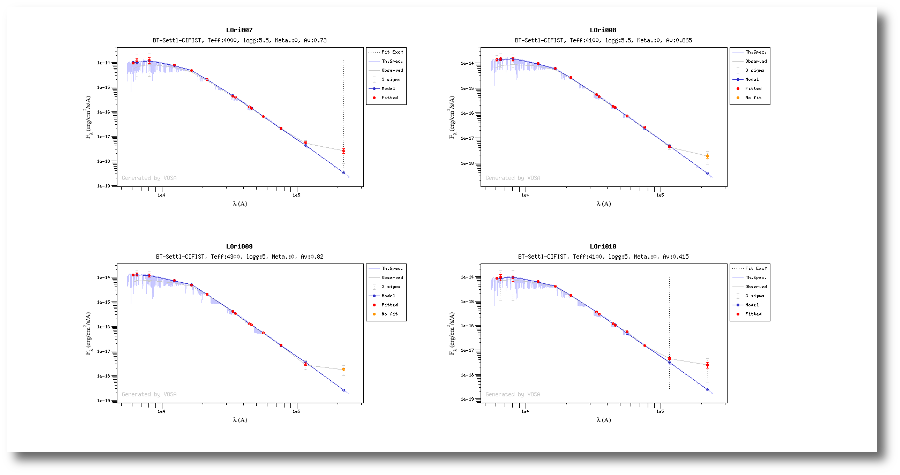

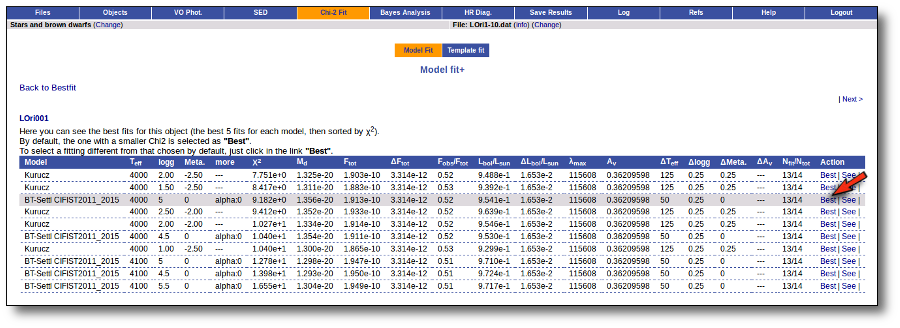

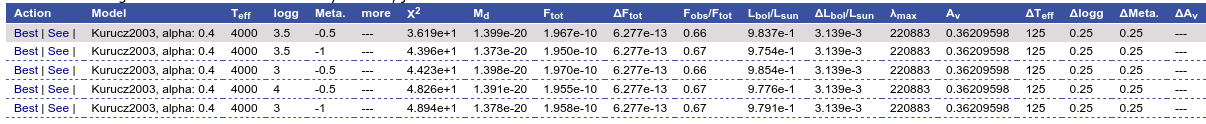

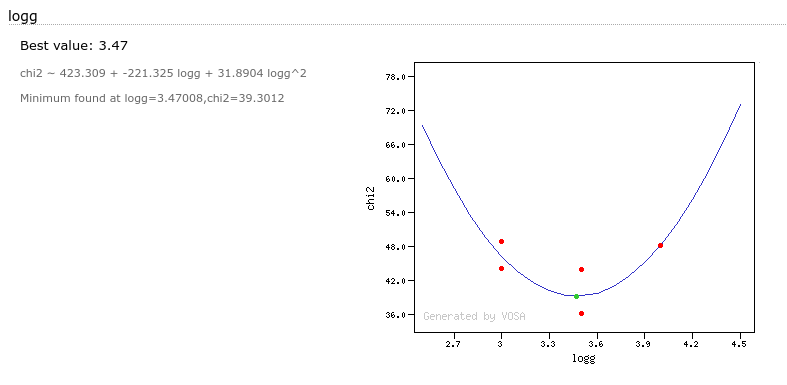

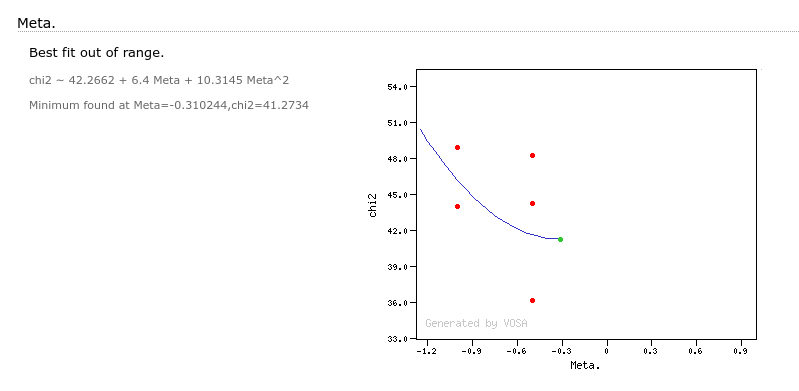

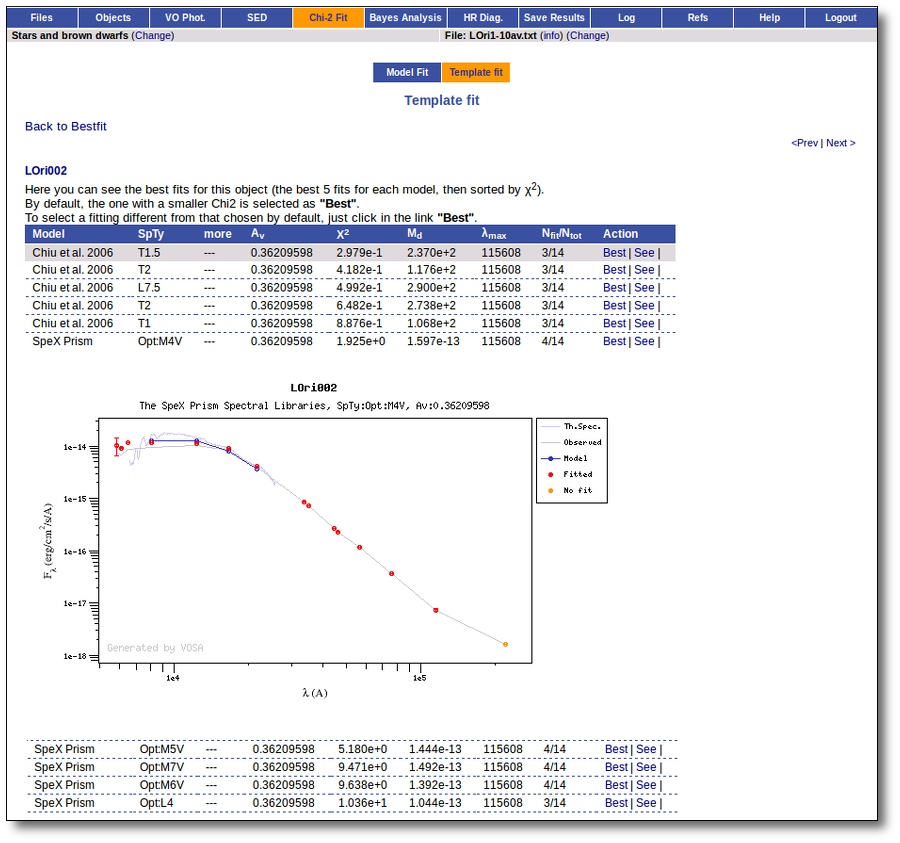

Once the fit has been finished, you can see a list with the best fit for each object and, optionally, a plot of these fits.

Besides that, for each particular object, you can also see a list with the best 5 fits for each model sorted by χ2. For each result you can see the corresponding SED and plot (with the "See" button) or use the "Best" button to mark a different result as the preferred best one. If you do that, this fit will be highlighted and it will be the one that will be shown in the "Best fit" table later.

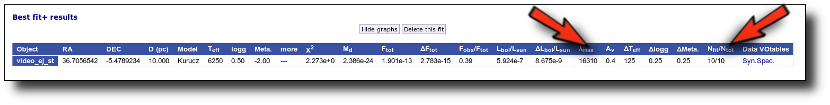

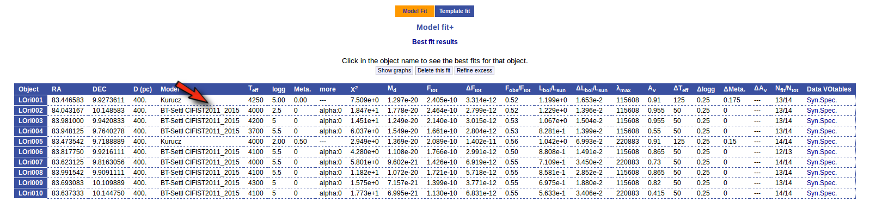

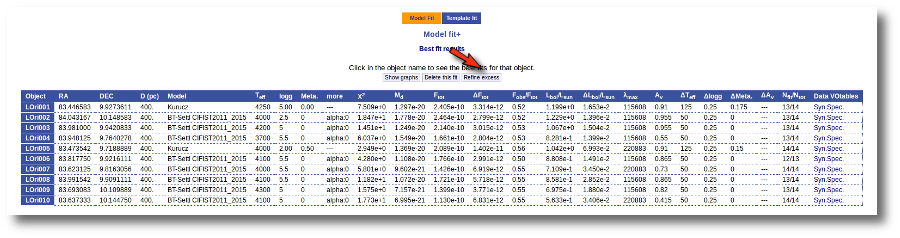

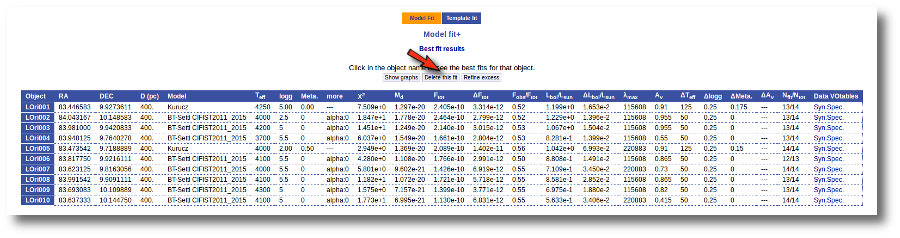

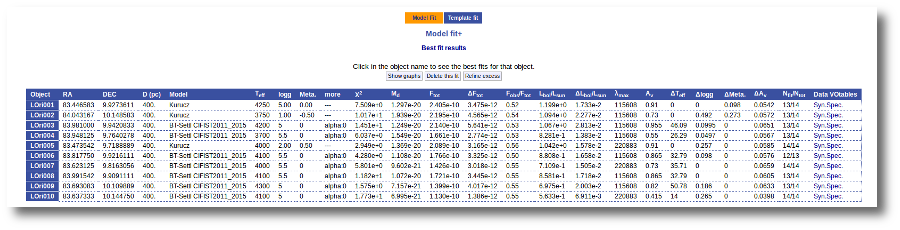

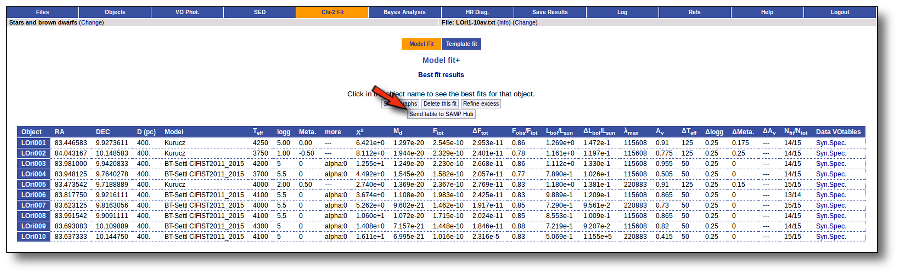

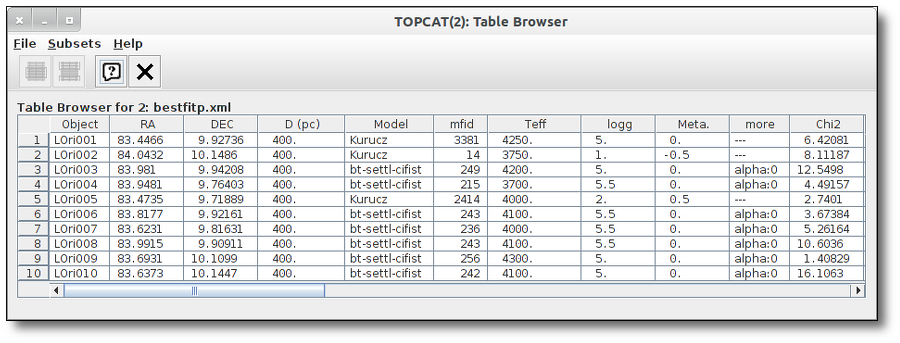

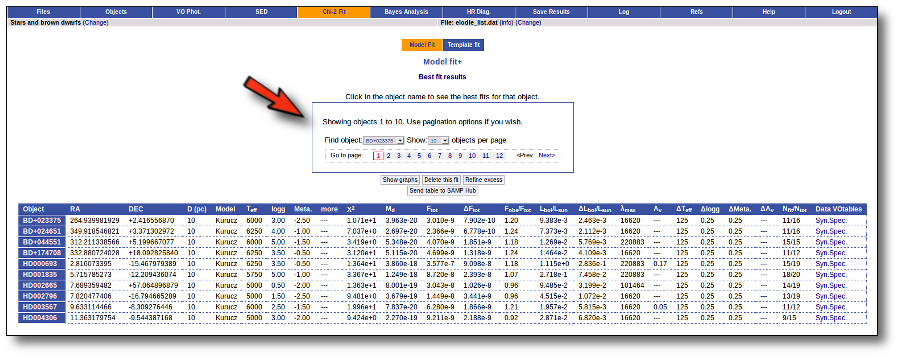

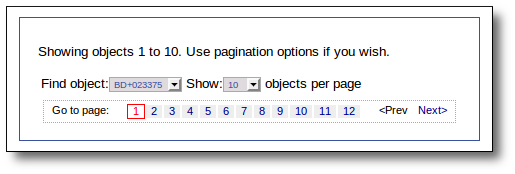

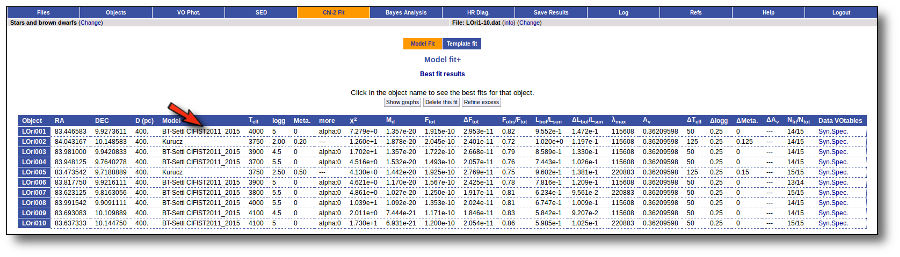

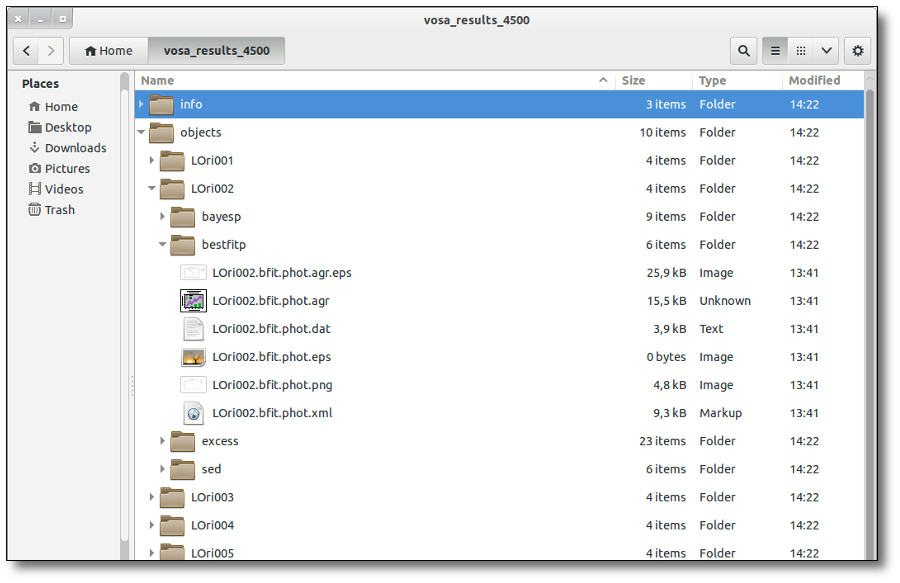

Best Fit

Once a fit has been done, you can see the Best Fit table with the best fit properties for each object.

A number of results are shown for each object:

- Object name, as given by the user.

- RA, Right Ascension as given by the user.

- DEC, Declination as given by the user.

- D(pc): distance in pc as given by the user (if the user does not provide a value, a typical default value of 10pc is used).

- Model name that best fits the data.

- Teff: effective temperature, in K, for the model that best fits the data.

- Log(G): logarithm of the gravity for the model that best fits the data.

- Metallicity: metallicity for the model that best fits the data.

- More: values for other (not so common) parameters used by the model.

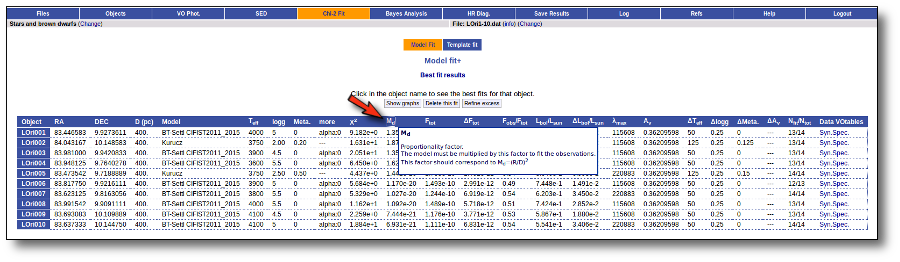

- Χr2: value of the reduced chi-squared parameter for the fit (see below).

- Md: dilution factor. Value by which the model has to be multiplied to fit the data (see below).

- Ftot: Total flux (see below).

- ΔFtot: error for the total flux.

- Fobs/Ftot: fraction of the total flux obtained from the observed photometry (See below).

- Lbol/Lsun: Bolometric luminosity (See below).

- ΔLbol/Lsun: error for the Bolometric luminosity.

- λmax: value of the last wavelength considered for the fitting In order to avoid data with excess) (See below).

- AV: final value of AV used for dereddening the sed.

- ΔTeff: uncertainty for the effective temperature. It's estimated as half the grid step, around the given value, for this model.

- ΔLog(G): uncertainty for the logarithm of the gravity. It's estimated as half the grid step, around the given value, for this model.

- ΔMeta.: uncertainty for the metallicity. It's estimated as half the grid step, around the given value, for this model.

- ΔAV.: uncertainty in the value of AV (in the case that AV has been used as a fit parameter).

- R1. Estimate of the stellar radius obtained using Md and the distance (See below).

- ΔR1. Uncertainty on R1 (See below).

- R2. Estimate of the stellar radius obtained using logg and the distance (See below).

- ΔR2. Uncertainty on R2 (See below).

- M1. Estimate of the stellar Mass using Lbol and R1 (See below).

- ΔM1. Uncertainty on M1 (See below).

- M2. Estimate of the stellar Mass using Lbol and R2 (See below).

- ΔM2. Uncertainty on M2 (See below).

- Nfit/Ntot: Number of points considered in the fitting (not taking into account points with excess or points labeled as 'nofit') divided by the total number of observed points (See below).

- Link to a VOtable with the synthetic spectra corresponding to the best fit.

When the fit has been made with the option of calculating parameter uncertainties using a Monte Carlo method, a statistical distribution is obtained for these parameters and some other values are shown in this table:

- Teff,min,68, Teff,max,68: Minimum and maximum value for the effective temperature at the 68% confidence level. Calculated as the 14 and 84 percentiles of the distribution.

- Teff,min,96, Teff,max,96: Minimum and maximum value for the effective temperature at the 96% confidence level. Calculated as the 2 and 98 percentiles of the distribution.

- loggmin,68, loggmax,68: Minimum and maximum value for logg at the 68% confidence level. Calculated as the 14 and 84 percentiles of the distribution.

- loggmin,96, loggmax,96: Minimum and maximum value for logg at the 96% confidence level. Calculated as the 2 and 98 percentiles of the distribution.

- Metamin,68, Metamax,68: Minimum and maximum value for the Metallicity at the 68% confidence level. Calculated as the 14 and 84 percentiles of the distribution.

- Metamin,96, Metamax,96: Minimum and maximum value for the Metallicity at the 96% confidence level. Calculated as the 2 and 98 percentiles of the distribution.

- AV,min,68, AV,max,68: Minimum and maximum value for AV at the 68% confidence level. Calculated as the 14 and 84 percentiles of the distribution.

- AV,min,96, FV,max,96: Minimum and maximum value for AV at the 96% confidence level. Calculated as the 2 and 98 percentiles of the distribution.

- Ftot,min,68, Ftot,max,68: Minimum and maximum value for the total Flux at the 68% confidence level. Calculated as the 14 and 84 percentiles of the distribution.

- Ftot,min,96, Ftot,max,96: Minimum and maximum value for the total Flux at the 96% confidence level. Calculated as the 2 and 98 percentiles of the distribution.

Extinction fit

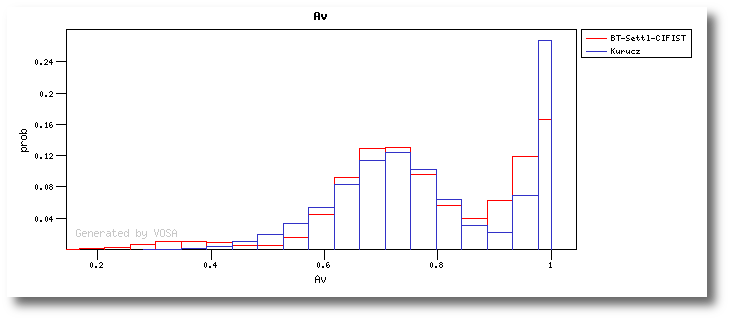

If a range for the visual extinction (AV) is given, it will also be considered a fit parameter.

You can provide this range for each object in two different ways:

- In the input file (10th column). See Upload file format section for more info.

- In the "Objects: extinction" tab.

If you don't provide a range for AV, the default value provided by you (also in the input file or the Extinction tab) will be used.

If you provide a range, like for instance AV:0.5/5.5, the fit service will compare each particular file of the model with the observed SED dereddened using 20 different values for AV in that range. Then the best fit models will be returned by the service with the best corresponding value of AV.

Reduced chi-square

The fit process minimizes the value of Χr2 defined as:

$$\chi_r^2=\frac{1}{N-n_p}\sum_{i=1}^N\left\{\frac{(Y_{i,o}-M_d Y_{i,m})^2}{\sigma_{i,o}^2}\right\}$$Where:

| N: | Number of photometric points. |

| np: | Number of fitted parameters for the model. (N-np are the degrees of freedom associated to the chi-square test) |

| Yo: | observed flux. |

| σo: | observational error in the flux. |

| Ym: | theoretical flux predicted by the model. |

| Md: | Multiplicative dilution factor, defined as: $M_d=(R/D)^2$, being R the object radius and D the distance between the object and the observer. It is calculated as a result of the fit too. |

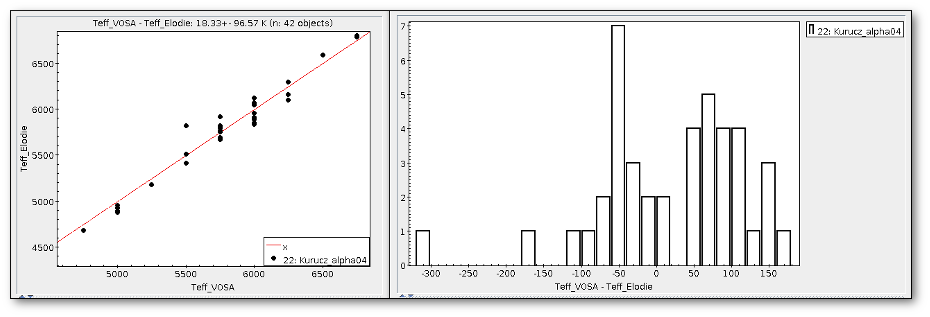

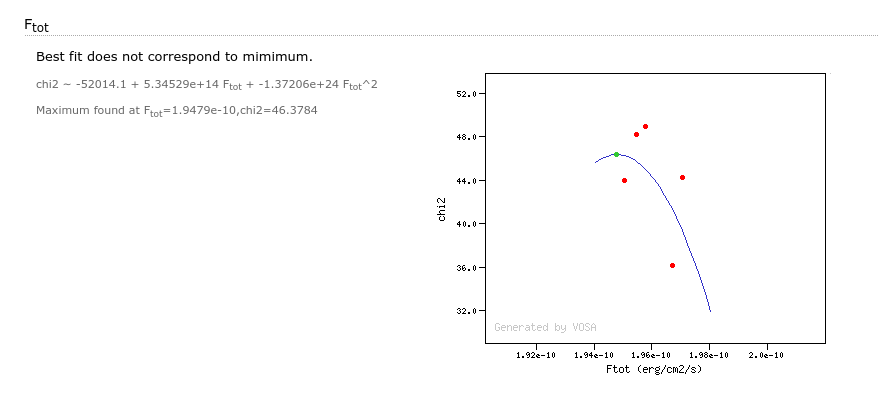

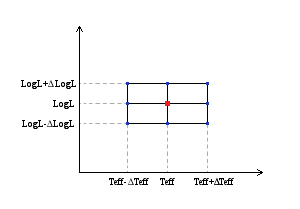

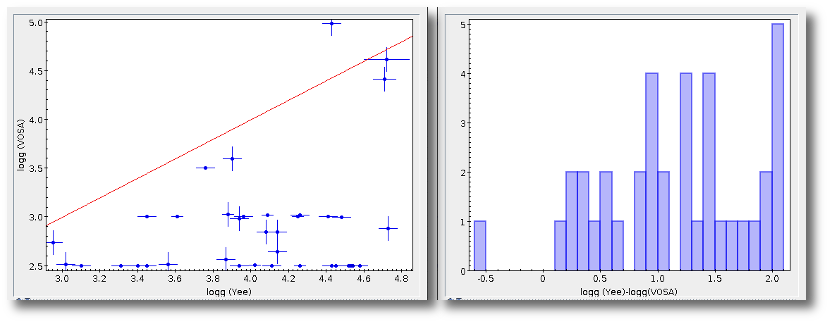

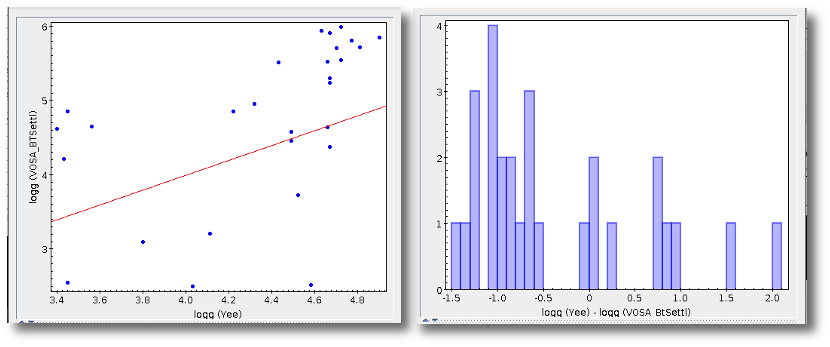

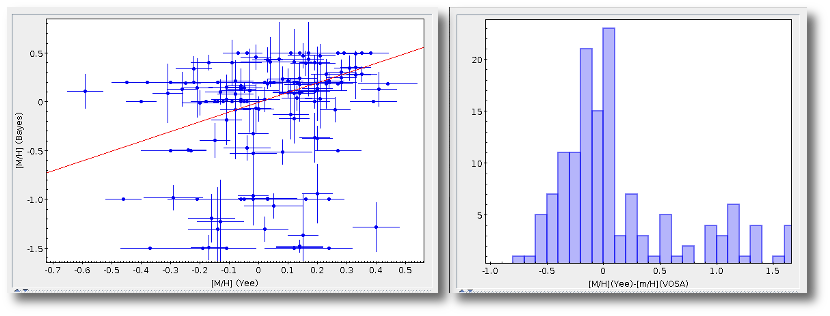

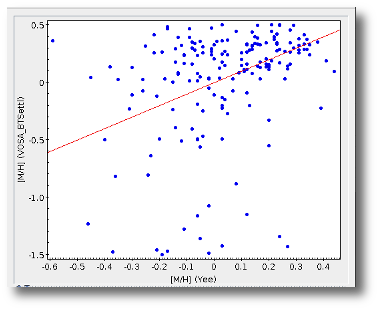

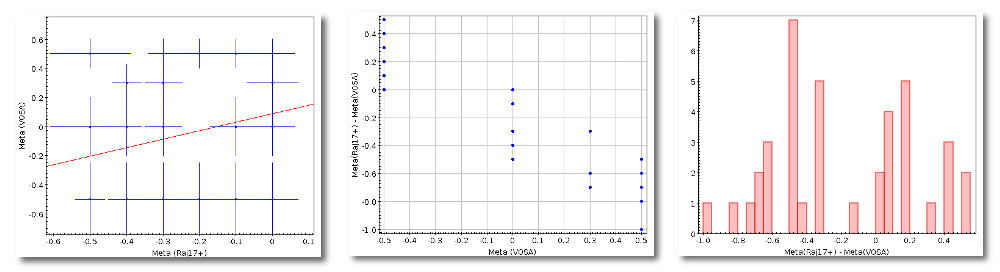

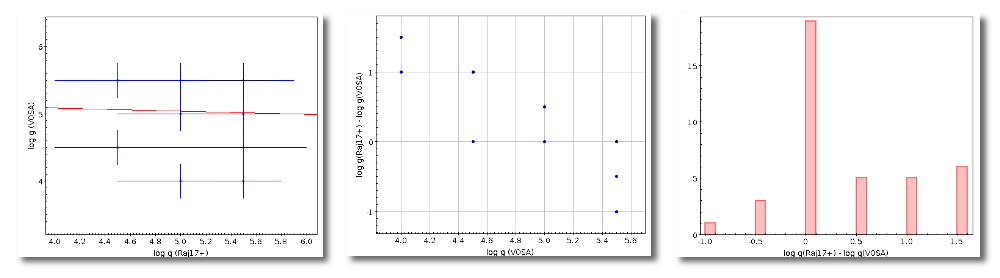

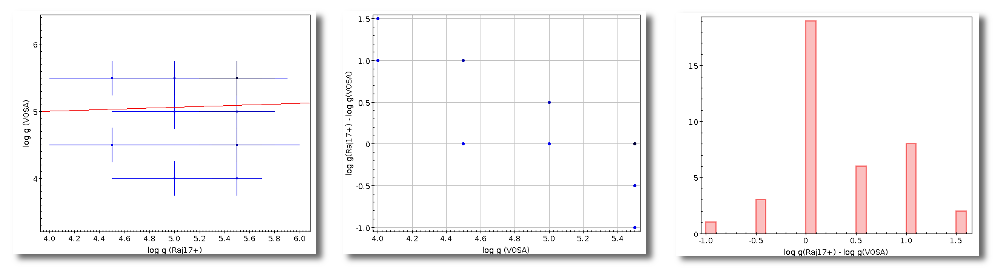

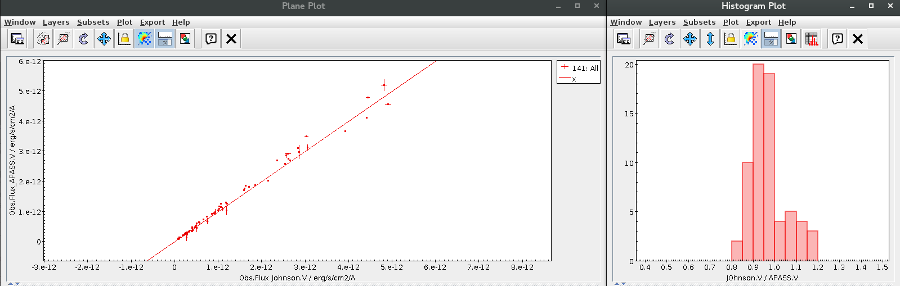

Visual goodness of fit

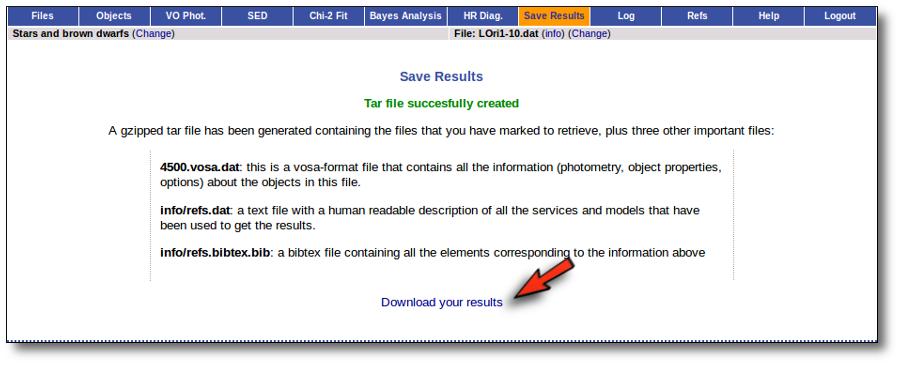

Two extra parameters, Vgf and Vgfb are also calculated as estimates of what we call the visual goodness of fit.